Intel has been researching a newer area of compute called Neuromorphic computing. This is a different approach, although perhaps not quite as dramatic as quantum computing, but it is also a major shift. The basic idea is to inform a compute platform on neuroscience with how brains work and use that framework to complete tasks in a more energy-efficient manner.

Intel Loihi 2 Neuromorphic Compute Tile on Intel 4

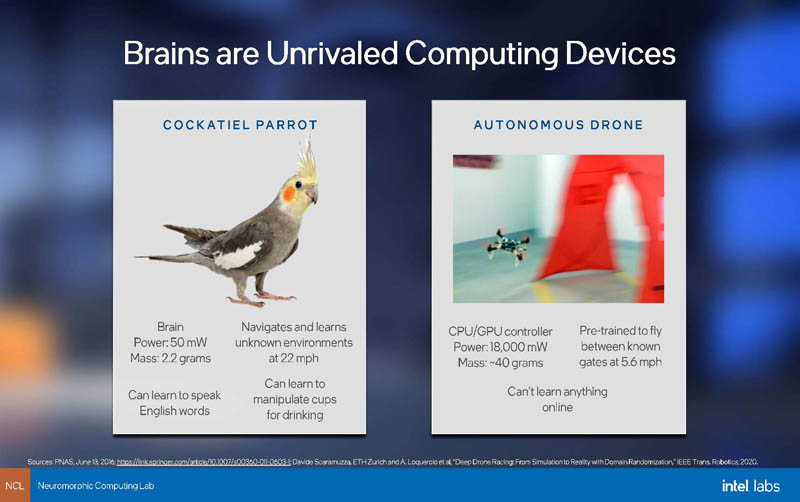

Normally we skip these examples, but this one hit home. People often refer to making human brain like machines, but Intel here is using a parrot. The parrot has a small lightweight and low-power brain yet is autonomous and can learn new tasks and adapt to environments in real time. Compared to a drone, the parrot has some unique capabilities (although it cannot, for example, organically record video and stream that video), and at much lower power consumption.

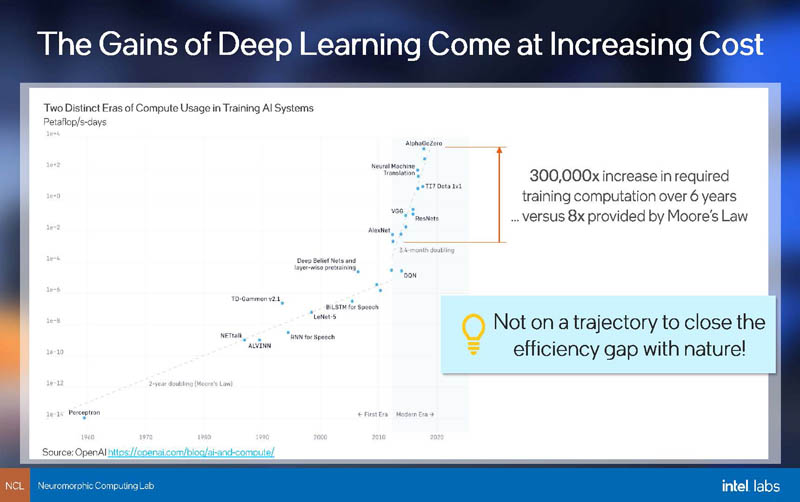

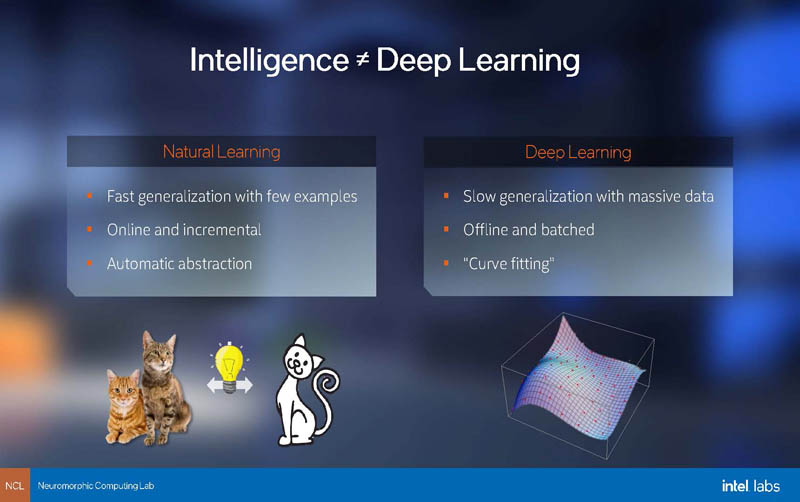

Intel is undertaking this research because brains are able to train on very minimal data sets and relatively quickly. Traditional deep learning models are being deployed today but the cost and power consumption are increasing at a faster rate.

So the goal of neuromorphic computing is not necessarily to get the correct answer 100% of the time. Instead, the goal is to quickly and efficiently learn and apply what is learned.

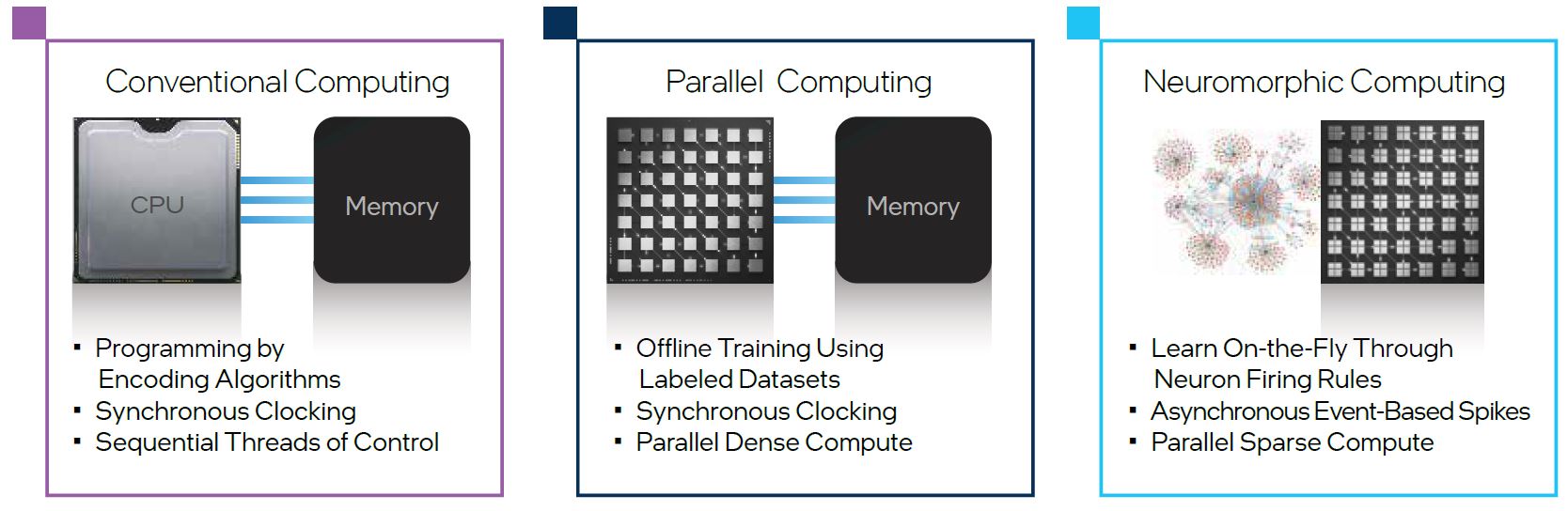

Here is a quick comparison basically with CPU, parallel (GPU), and neuromorphic compute. Something being called out here is the lack of off-chip memory.

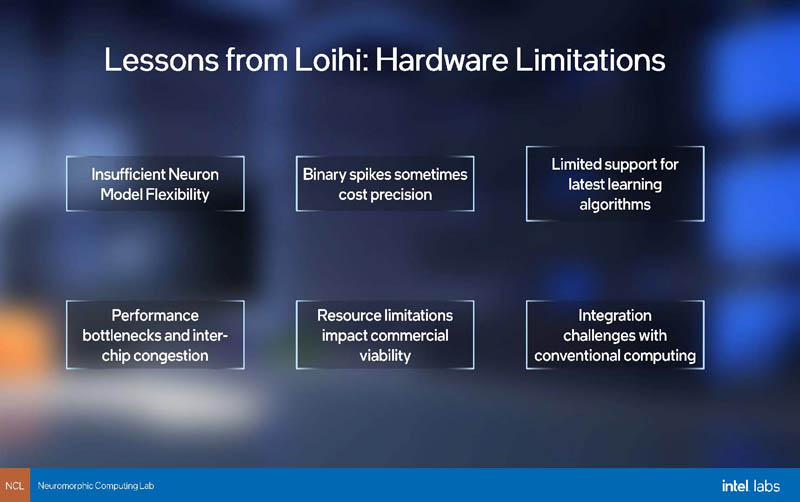

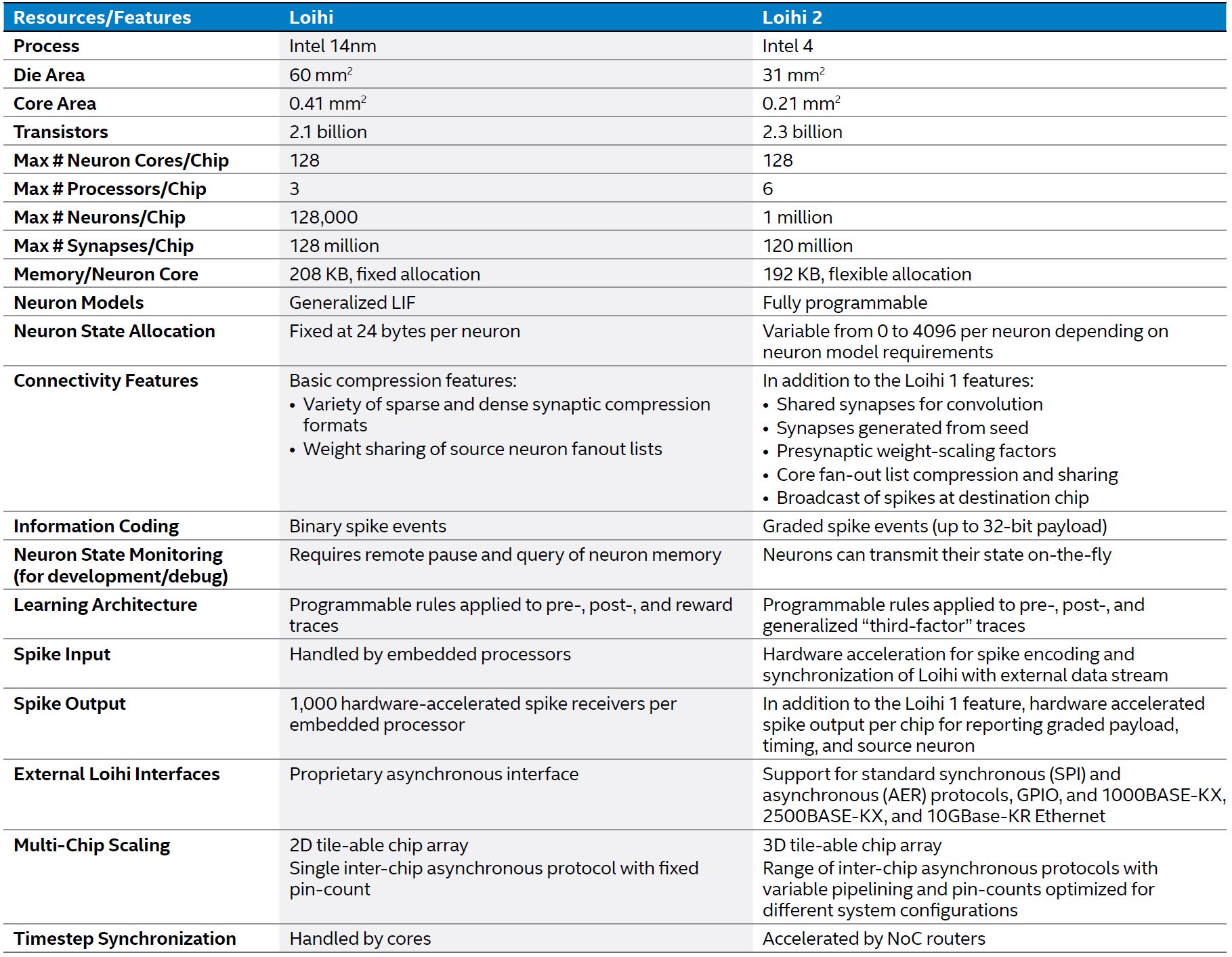

Since today’s announcement is Loihi 2, the “2” means this is a second-generation chip. Here are the key lessons learned from Loihi. One needs to keep in mind that this is a relatively newer area for chip design where the software frameworks are changing so we can expect big changes from the lessons learned.

Those bigger changes are certainly implemented. One we will highlight is that in nature neurons generally fire in binary spikes. Either the spike happened or it did not. Intel Labs realized that actually carrying magnitude could help convey more information and be beneficial relative to the cost of adding magnitude as s dimension, so this is an example where nature is informing, and humans are evolving based on math.

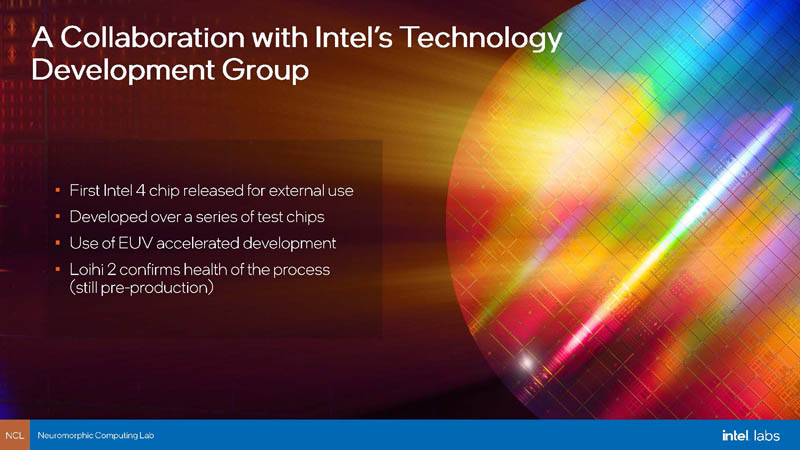

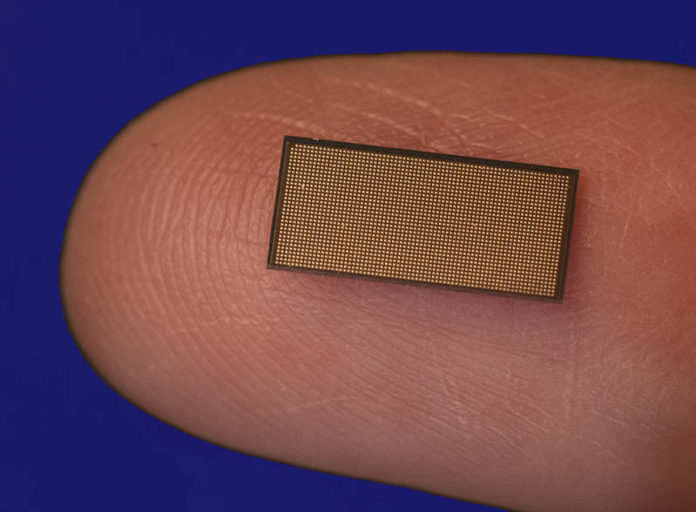

Of course, the chip is getting a huge jump in new resources, and the way it is doing so is not by getting bigger. Instead, this is a very small chip. The cover image shows the Loihi 2 chip on a small/ pinky finger. Intel is instead using its Intel 4 process. This is the first chip designed and fabbed on Intel 4 that is designed to be used by folks outside Intel.

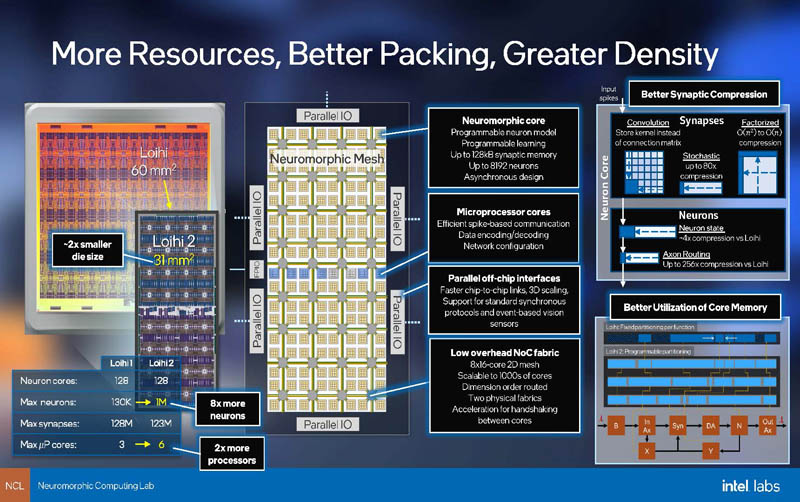

Intel added twice the cores and around 8x the number of neurons while also making more efficient use of memory and shrinking the die size by almost half. Moving forward a decade or so in process technology helps get some amazing gains.

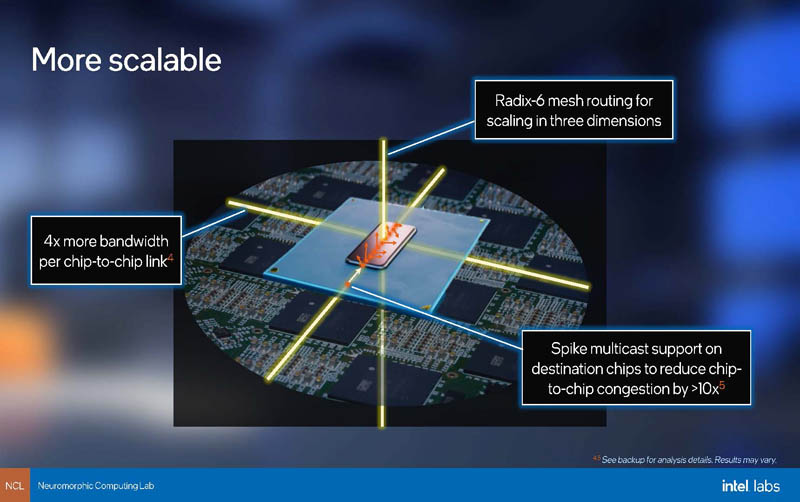

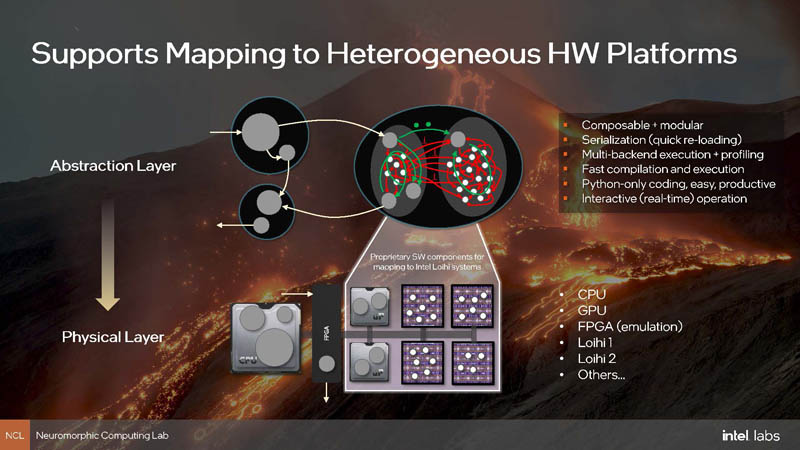

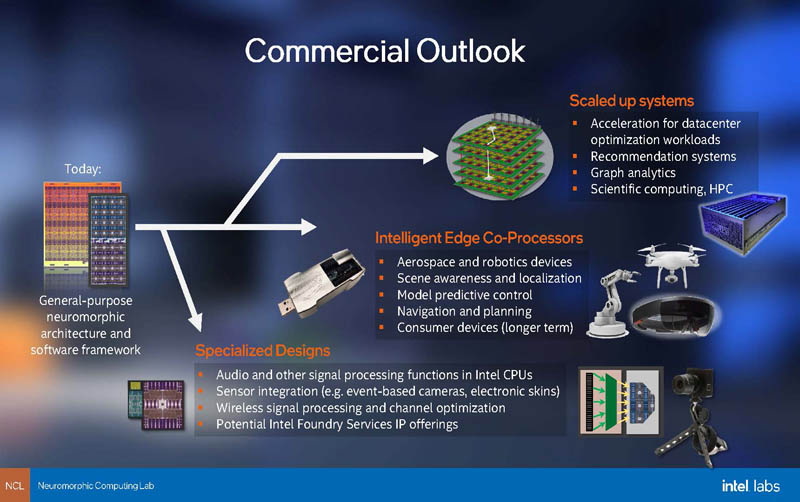

Intel Labs also is supporting larger scaling. These chips are relatively small, almost small enough that they could be tiles packaged along other forms of Intel compute, but the way the company makes its neuromorphic compute is to scale by having more interconnected chips instead of making larger chips.

Here is the Loihi vs. Loihi 2 spec comparison:

We mentioned that Intel is making Loihi 2 more available, the first solution is Oheo Gulch which is a development card. The next hardware that Intel is announcing is Kapoho Point which is an 8-chip solution with an Ethernet interface.

Here is a picture of the Intel Loihi 2 Oheo Gulch development board. We can see the Loihi 2 here along with a big heatsink for the Intel Arria 10 FPGA interfacing with the new chip.

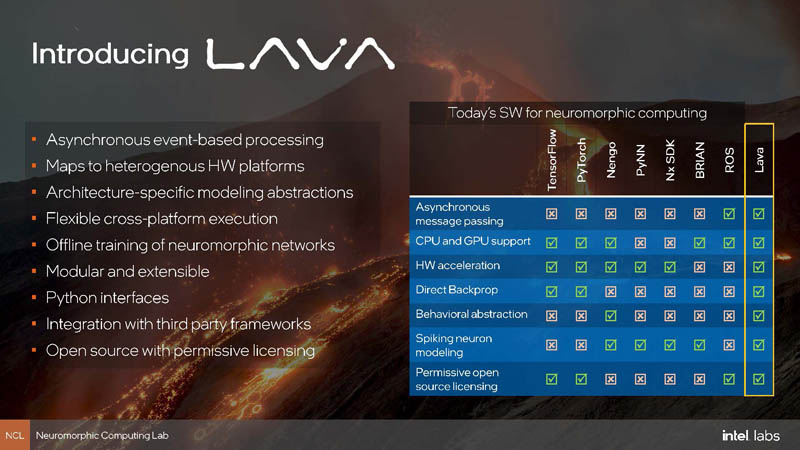

On the software side, neuromorphic computing is still a relatively immature field compared to traditional deep learning. Intel is making its LAVA framework to help move the industry forward.

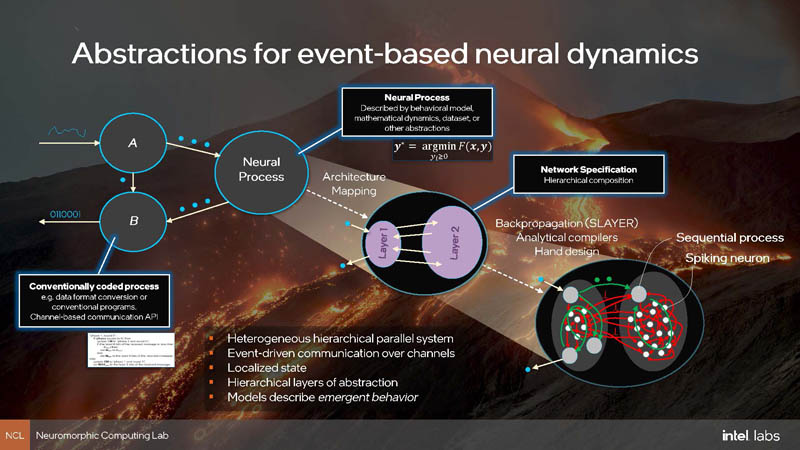

One example is that there are event-based neural dynamics that trigger communication through events. These processors, and the frameworks that support them, need to know not just that something happened, but also when it happened as an example.

Intel as an xPU company intends for LAVA to map work to the appropriate xPU. This is a theme across Intel as we also see in the company’s vision for oneAPI. LAVA is more of a project at this point with cool background slides rather than the Tensorflow of the neuromorphic world.

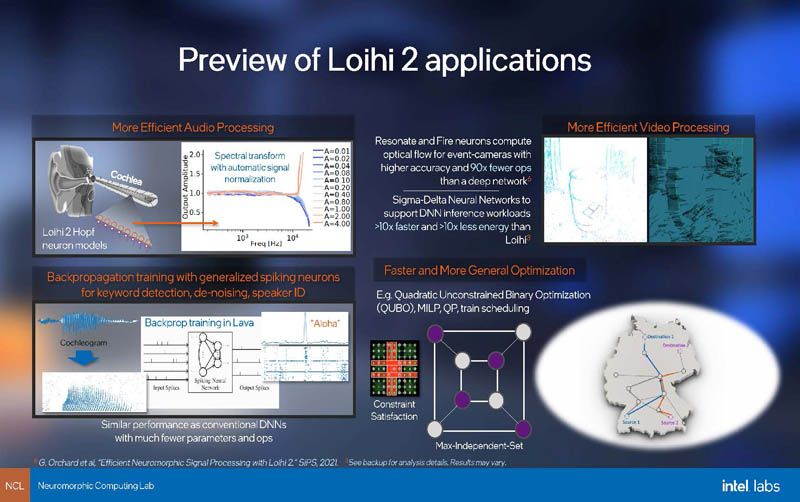

Since this is a labs part, Intel Labs has some potential applications for the new chips that we will let our readers browse.

Today’s Loihi 2 chips are not necessarily designed to be commercialized. This is still an Intel Labs project instead of a product line at this point.

Final Words

In a stroke of luck (we hope) Intel is releasing its new Hawaiian-themed Loihi 2 chips and LAVA framework on the same day that Hawaii’s Kilauea Volcano on the Big Island started to erupt. The technology is cool, but if you are the type of person that does not believe in coincidence, then that is great timing on Intel’s part, especially since STH was pre-briefed on the launch before the eruption.

BrainChip’s Akida, Esperanto’s ET-S1, Intel Loihi… I understand these are still not mature enough tech, but wouldn’t it be interesting to have them integrated in the design/thinking of the most powerful GPGPUs? Just like what was done with NVidia’s converged accelerators?

With the upcoming MI200s, and its x4.9 FP64 perf increase versus A100, AMD has just showed (I might be wrong) a leaning towards HPC perf, while NVidia is more on a common ground between AI and HPC, this reinforced in their papers on ” The convergence of AI and HPC “. Who am I to judge: The FP64 specs at the announcement of the Ampere architecture were rather disappointing, even though one can be happy with the tensor cores aid… and a x4.9 perf stride is HUGE for a 2nm decrease.

Now, even though modern HCP scientific workflows can benefit from some onboard AI acceleration, I can’t help but ask myself if a more specialized AI approach (Integrated or onboard neuromorphic/RISC logic) would not be more efficient in those fields, regarding the problem formulation, simulation and clusters setups, real-time simulation and data analytics (right at the start of a simulation), energy consumption, and time-to-solution. What do you think?