Google has (slowly) been opening up about some of its custom designed hardware. The newest disclosure this week is something we have hinted at since the Xilinx Live Video Transcoding Product Line Launch last year, is that Google has its own video transcoding hardware. Specifically, the Google/ YouTube VCU or Video Coding Unit, is designed to accelerate the video workloads for the company across multiple data centers. YouTube has many hundreds of hours uploaded every minute (a shameless plug to subscribe to the STH YouTube Channel here so you can see our content.) YouTube live has hundreds of thousands of streams. Google Photos and Drive also handle video. Google also has Stadia, its cloud gaming service, that can use VCU-based VP9 encoding to deliver 4K 60fps gaming on 35Mbps connections. Most in the industry would agree that Google/ YouTube does a lot of work with video, and so there is an accelerator for that: the VCU.

Google YouTube VCU For Warehouse-scale Video Acceleration

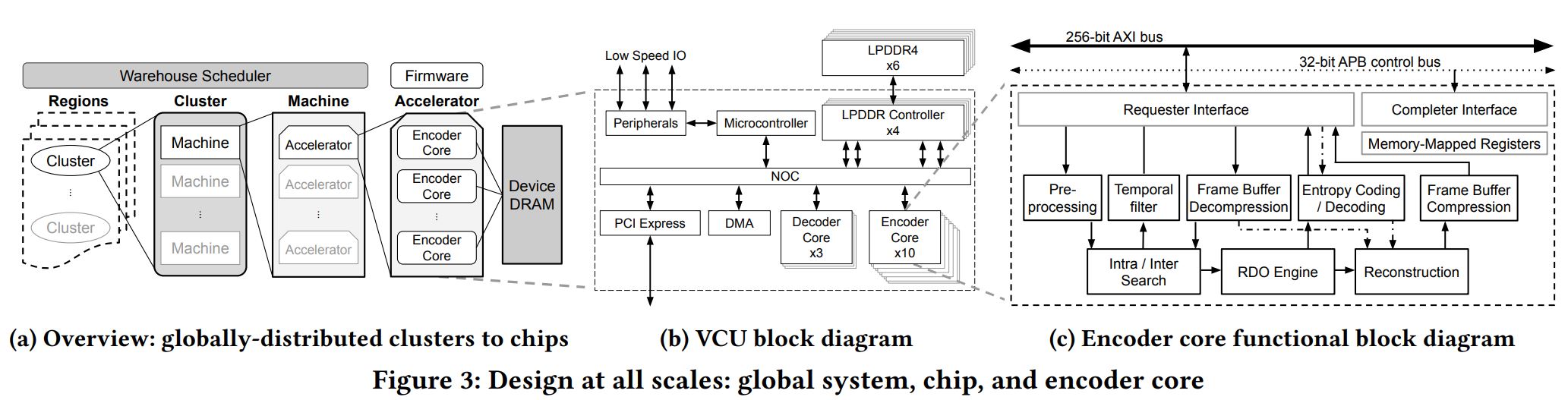

One of the really interesting parts about Google’s disclosure is that the company did more than just saying it has a new card. Instead, in its paper (certainly worth a read if you are interested in this) Google laid out how the VCU is used in a warehouse-scale workflow. We often see details around the way an accelerator works, and sometimes additional detail around a specific aspect, but given the scale, Google is solving a big problem so a lot of the work is in managing the workload, not just the accelerator.

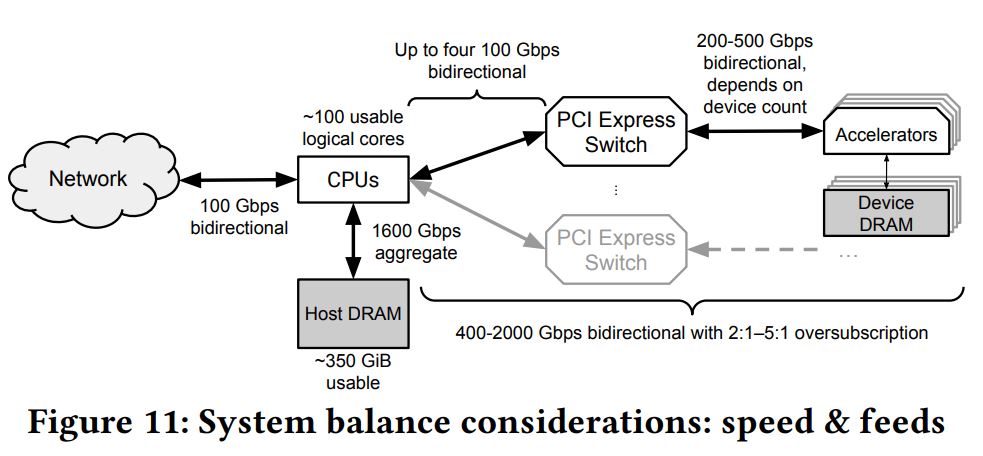

Google also spent time optimizing for a balance of speeds for its dedicated VCU machine infrastructure. Specifically, one can see that the balance is focused on how bandwidth inside a system should be optimized for the given network throughput. That is different than some other designs we have seen more focused on accelerator throughput and is an example of how Google breaks a problem down at scale.

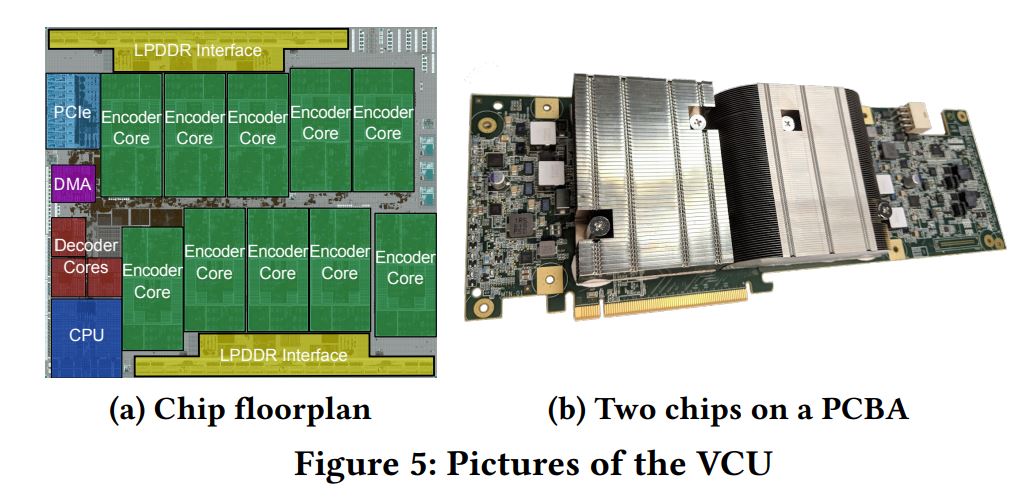

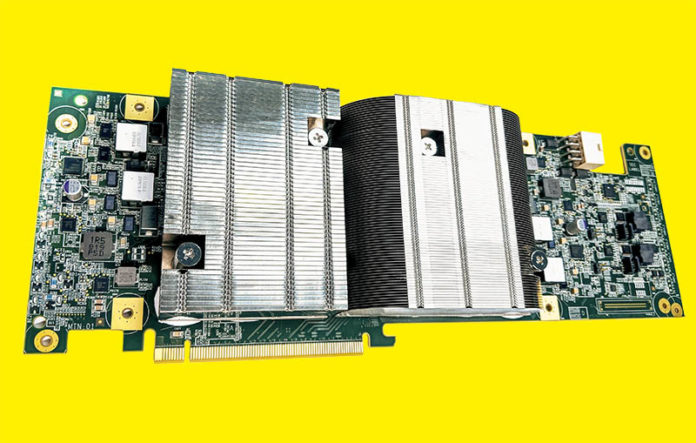

Google says that its production systems utilize ten cards each with two VCU accelerators for a total of 20 VCUs. The card itself puts two VCUs on a PCIe Gen3 x16 bus.

Each of the accelerators have ten encoder cores, four 32b LPDDR4-3200 memory channels (8GB of memory usable after ECC), smaller decoder cores, and CPU/ PCIe Gen3/ DMA for connecting and controlling the accelerators. One will note that on many GPU implementations we see an emphasis on decoder cores as those devices are meant to accelerate the playback rather than the encoding of video. Google says that the memory was a major challenge as each VCU requires somewhere in the 27-37GiB/s of bandwidth.

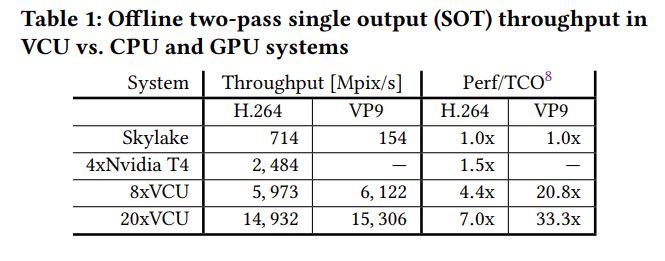

For its comparison, Google is using dual Intel Xeon Skylake based server with 384GB of memory and another server with for NVIDIA T4’s to show its performance speedups.

On the speedup side, Google is showing both H.264 as well as VP9. Given where we are in the hardware/ software ecosystem, H.264 can be seen as the most commonly supported codec. VP9 is the newer codec that can offer higher quality at lower bitrates but the trade-off is in compute. It is fairly clear that part of the VCU’s charter was solving for a way to deliver both VP9 and H.264 content to maximize quality and minimize bandwidth. With CPUs, VP9 was too costly to run at scale, so that is where the VCU offered a tremendous performance uplift.

The Google team had some very interesting insights around deploying the accelerator. For example, it observed around 40Gbps of inter-socket bandwidth, but then increased performance by 16-25% by adding NUMA-aware scheduling for the accelerator jobs. It also found that it could help avoid stranding encoder cores by doing some decoding on the CPUs as well.

Final Words

The Google/ YouTube team says that it is achieving 20-33x better performance per cost over doing the workloads on CPUs. Scale plus an order of magnitude level speedup means that it makes a lot of sense for Google to be developing this solution.

While the ACM paper is excellent, the fact that we had heard rumors of this card in the industry for some time, there is a TCO benefit, and the fact that Google often does not disclose its newest hardware, there is a non-zero chance that Google has a next-generation VCU at some stage. Still, it is always inspiring to learn about how smart people solved big challenges. Many of our readers do not focus on video encoding/ decoding, especially at this scale, but we are simply going to suggest our readers download and tag the paper for some weekend inspirational reading.

Also – if anyone from Google sees this, happy to help you take better photos of hardware to support this type of work. We can use our studio in Mountain View, CA if it helps folks learn and get inspired by this type of work.

Google employee here :) Anon as I’m not authorized to speak publicly.

Like you mentioned there is a non-zero chance there is a newer version of this card, there is a non-zero chance this photo was intentionally left ‘bland’ to protect trade secrets. We have plenty of audio/video facilities in-house :)

That’s one HECK of an MPEG card. Not surprised that YouTube would have these. Very cool.

Now, all we need is nvenc on geforce capable of av1 encoding.

Patrick, I’m not clear on this bit:

“Each of the accelerators have ten encoder cores, four 32b LPDDR4-3200 memory channels (8GB of memory usable after ECC)…”

What do “four 32b” amount to?

I wasn’t aware that ECC had a subtractive effect on usable RAM – is this inherent and inescapable? I’ve never seen any articles mention a reduced “usable” RAM figure due to ECC in the context of normal server DDR4 modules.

What’s funny is that there were some pics circulating about it before the announcement: https://www.techpowerup.com/forums/threads/is-this-a-video-card.279701/

Cool. Can’t wait to get one of these off ebay for my home Plex server :D

jk

I have 6 of these cards :-)