One of the strangest data slices you can do of the November 2017 Top500 list is around the interconnect used. We see a number of Infiniband (Mellanox) or Omni-Path (Intel) installations as one expects. There is a 100GbE system but in the HPC space, low latency interconnects are a major differentiator. As workloads are distributed, efficiently passing information via MPI or another system can become a major bottleneck source in a system and impact the amount of work a system can get done. Indeed, the rhetoric from the Intel Omni-Path and Mellanox Infiniband camps is palpable at this point simply by talking to individuals aligned with either camp. In the November 2017 list there is an oddball: 25GbE.

We do not mean this piece to disparage 25GbE in any way. It is a great technology and one we expect will rapidly supplant 10GbE in the data center space, especially in public and private cloud deployments. At the same time, Ethernet is a relatively slower stack than the HPC optimized interfaces which is not good for HPC. Likewise, 25GbE is a quarter of the per-link speed we see of 100Gbps connections such as 100G Omni-Path and EDR Infiniband and half of the HDR Infiniband 200Gbps speeds were are seeing at the show. If you were building a supercomputer, we would not expect 25GbE to be on the list. With that said, there are 19 systems on the November 2017 list that are using the technology.

November 2017 Top500 List Interconnects

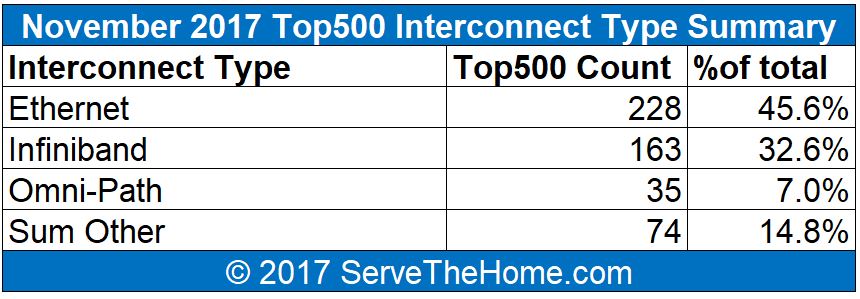

The first question many of our readers will ask is what types of interconnects are being used. We lumped the numbers from the latest Top500 list into four categories, Ethernet, Infiniband, Omni-Path and Other. Here is what that view looks like:

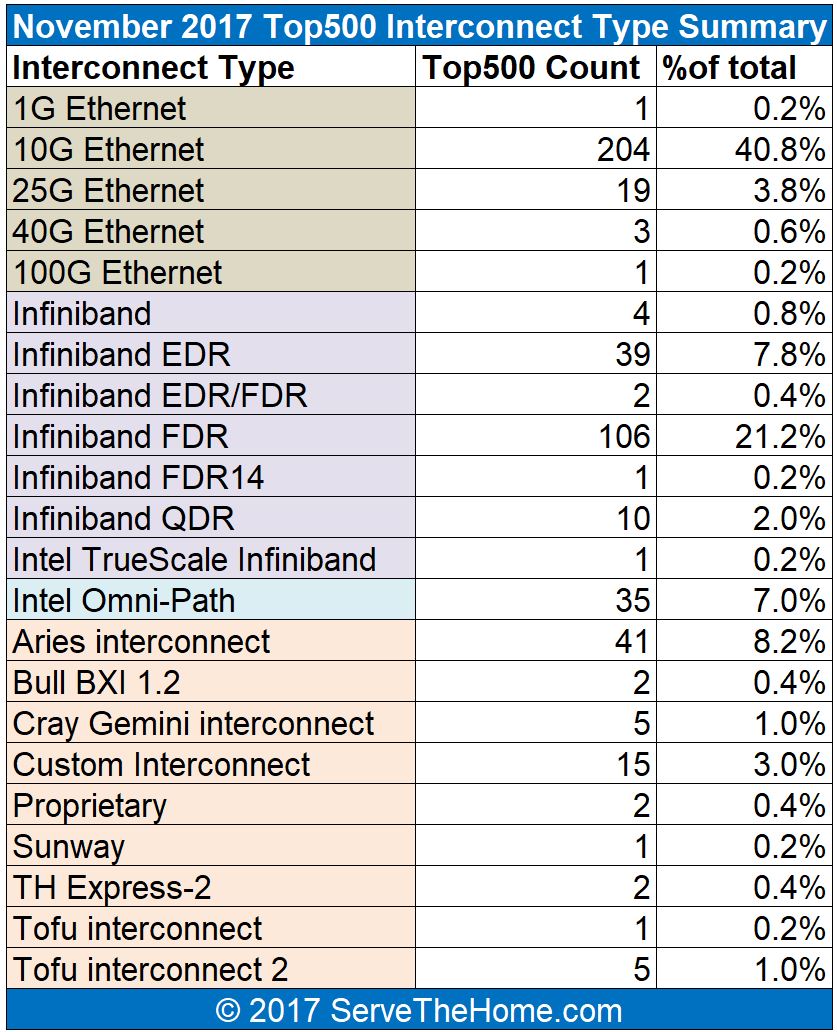

Other encompasses Aires and other types of interconnects that we do not cover widely on STH. When we drill down into these figures, here is what we see in terms of distribution:

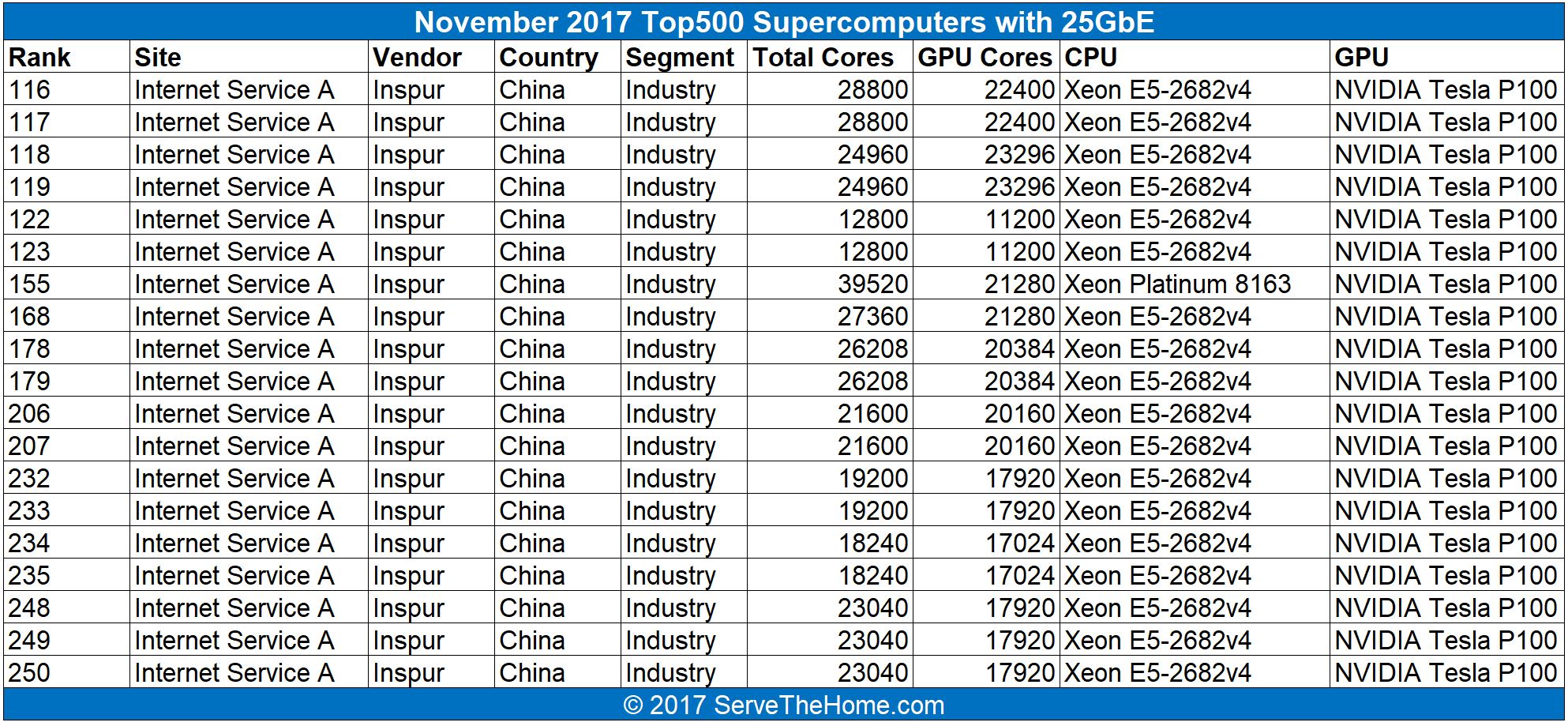

The 10GbE systems were deployed years ago so you can see that most of these systems are running 40Gbps or faster interconnects. The 19 entries for 25GbE are interesting for a few reasons. 25GbE is relatively slow for a supercomputer interconnect these days. On the other hand, it is popular in web and cloud infrastructures. When we looked into the details of the 19 25GbE entries, here is what we saw:

All 19 systems appeared on this latest list, no 25GbE systems appeared before November 2017. As you can see, all of them are Inspur systems using Intel CPUs and NVIDIA Tesla P100 GPUs. Some of them have nearly identical specs.

Chatter around the SC17 show was that these are not running traditional HPC workloads and instead are web providers benchmarking web / cloud clusters using Linpack just to fill the list. That narrative helps explain the choice in 25GbE (Mellanox from what we are told) interconnect. At SC17 is was certainly a heated topic of discussion that could be heard all over. It certainly is a move that shifted some commonly watched slices of the list and created attention grabbing headlines.

Can you give more insight into how cloud is using p100s?