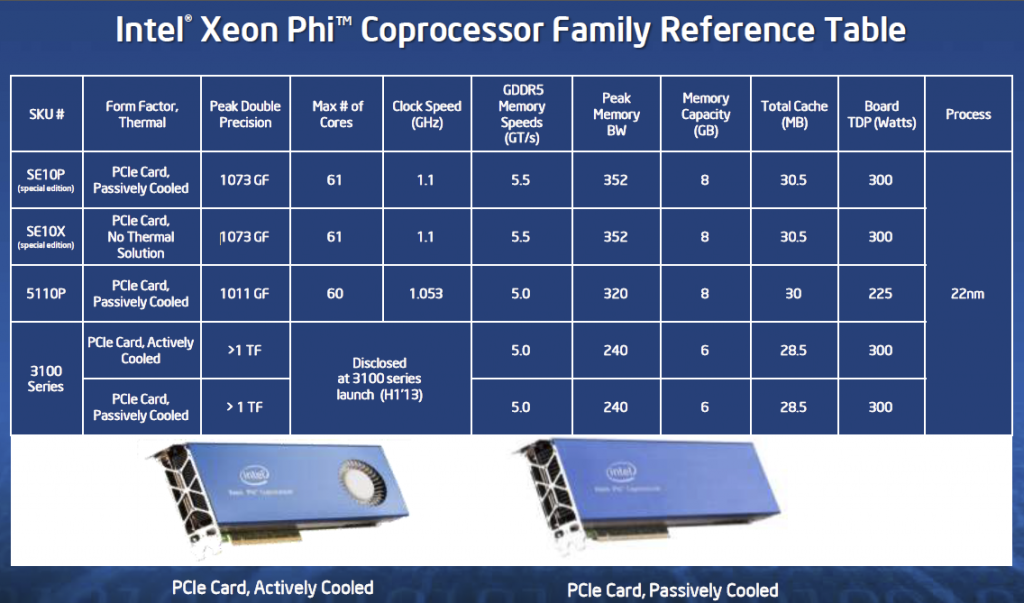

Intel finally released its first commercially available Larrabee derivative, the Intel Xeon Phi co-processor 5110P. Basically it has 60x four thread x86 64-bit cores with enhanced vector units. 30MB of L2 cache for each chip breaks down to 512KB per core. The Intel Xeon Phi 5110P coprocessor is a passively cooled card with a 225w TDP meant for HPC applications. The special edition parts used in TACC’s Stampede supercomputer had 61 cores and a 300w TDP. The cards are manufactured on Intel’s 22nm 3D tri-gate process and still have twice the TDP of high-end Intel Xeon E5 chips on the 32nm process. Clearly, these are big parts! Here is a list of the Intel Xeon Phi coprocessor family.

Interestingly enough, the Xeon Phi 3100 series may find its way into high-end workstations in 2013. One can tell that they are 57 core parts with 6GB of GDDR5 compared to the 8GB on the 5110 series. I covered the Intel Xeon Phi family and TACC’s Stampede on Tom’s Hardware. That is a cool piece if you have never seen a supercomputer being built.

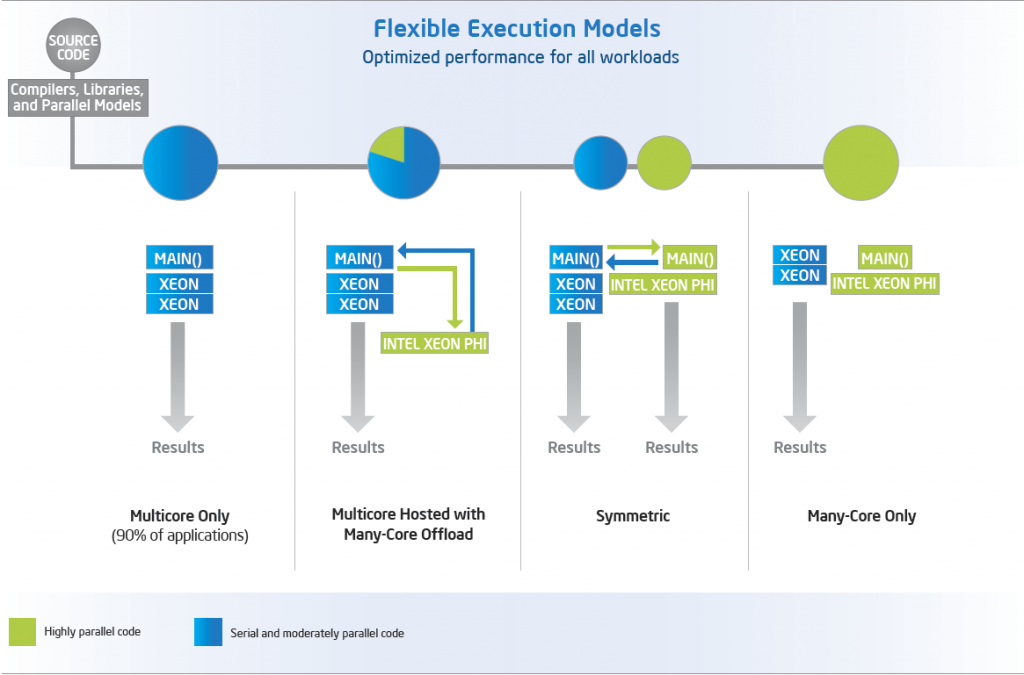

The breakthrough with the Intel Xeon Phi is that it is not GPU based. Instead, it looks more like a Linux cluster node. It runs Linux on-card which does occupy one core. The cards though can execute code themselves. Here is Intel’s view on execution models with Intel Xeon E5’s:

As one can see, the Intel Xeon Phi can be used fairly flexibly. One other major point that Intel stressed was the ability to use OpenMP directives to optimize both for current generation Intel Xeon E5 and Xeon E3 series CPUs, but also Intel Xeon Phi. That means the same framework is used for the Intel Xeon E5-2690 as is used for the Intel Xeon Phi. Sure, the Xeon Phi costs a few thousand dollars much like two Xeon E5-2690‘s but it offers 2x or more improvement in tasks that are highly parallel and can take advantage of the architecture.

Of course, here is an obligatory die shot:

Intel Xeon Phi 5110P Pricing

The Intel Xeon Phi Coprocessor 5110P is shipping to OEMs already with general availability on January 28, 2013. The Xeon Phi 5110P has a MSRP of $2,649. The Intel Xeon Phi coprocessor 3100 series will be available 1H 2013 with a sub $2,000 MSRP.

Closing Thoughts

Thinking back ten years ago, who would have thought something like this would be in the market. It does make me salivate at the upcoming Haswell, Atom and ARM war though. These are signs of things to come not just in the x86 and super computing space, but these architectures are going to migrate into cloud applications soon enough.

I also have a bunch of pictures from the trip to the TACC and Stampede being assembled. Let me know if any of that is of interest to you.

Pardon my ignorance here…I know that this card is essentially a GPU, but what’s throwing me off is the x86 instructions.

Can this card be put to use right now, today, on tasks that something like a dual 2687W would crunch?

Or will it need software written specifically for it, but just makes it easier on coders since it’s x86 like they’re used to instead of CUDA/OpenCL/etc?

That’s some neat tech. I really liked your article on Tom’s Patrick, how did you like Texas, ready to move here yet?

For me at least this has some real promise. My biggest PC choker is a 2D flood modeling and some of my spatial analysts tools in GIS. If we as an community can get these guys to write some multi-core x86 updates to their code and I could actually buy one of these Phi cards I would be off to the races!

Lorne – It is not a GPU despite how it may look. Better way to think of it is that it is a Linux cluster compute node on a PCIe2 bus. You can SSH into these cards. On the node it has ~60 cores that are x86 similar to Pentium cores, but they have huge vector units and can handle 4 threads each. You also have 8GB on the 5110 family and 6GB on the 3100 family. Big thing it that these look like compute nodes rather than GPUs.

worldskipper – Thanks! Not sure if I’m ready for the move just yet, but I did like Austin. Having the Formula 1 race there is also very cool! It will be interesting to see how these get adopted. The 3100 active cooled Intel Xeon Phi parts would be interesting for applications like yours.

Well we are always looking for hard working people here in the Lone Star state, and NO INCOME TAX!

Do you know if these Intel cards will be available in the retail channels (provantage, newegg, amazon etc)?

From what I understand, the 5110P is not going to be a channel part (maybe ebay in a year or two.) The 3110A (active cooling) has a better chance.

Also, you *might* be able to call up a Supermicro partner and order one through them. Have not confirmed this yet.

You need to benchmark these Patrick. We expect you to buy one.

Let’s also get more pics of the supercomputer install.

Show us everything. More Pictures the better.

Can I use this as a GPU? For $3000USD with tax I should be able to at least play games on it.

Hi Folks! I’m new here, but I’ve been trolling for awhile. This is a *great* site, and has become my fave web site, using others only for sanity checks.

Guys, I’m really late to the races on this Phi thing. My intuition is that this does not undercut CUDA, but rather they (Intel) address different code spaces.

My exposure to CUDA has been limited to financial quants [1]. In those cases they were taking massive C code programs and briefly offloading/round-tripping the math routines (say 60% of the code) to CUDA. They were doing this either for high end individual servers used as shared workstations or very small clusters of say 5 shockingly low powered systems. Yes, you too could build a cluster for all that. The tech on Wall Street would blow you away!!

The Intel Phi product seems aimed at the whole code base. There’s always a but – it’s not plug’n Play. You would pick through the whole code base in an optimization project, looking for areas of code that would round-trip well into this thing – super high thread count or (I’m not sure about this) parts of code that would benefit from the extra cores. The slide on possible utilization scenarios is telling.

###

Are you one of those horrifically anal people who make lists of top level stuff you want to do in the coming year? I have to admit that I do this. The only item that I missed in 2012 was “learn CUDA.” Now it might be “learn to use the Phi AND learn CUDA !!” Here’s why —

I had a high-flying career, first as a consultant with McKinsey and Co., then with a computer industry giant in Palo Alto (hint). One day I was at my desk (I traveled 70%, so thank God I was home). I stood up to stretch and had a massive stroke at 40! Watch your cholesterol, boys. Anyway, I recovered completely thanks to a great medical center and a somewhat patient employer, but my high-flying career was over.

I’m on my own now, working from home. Half of my work is “quantish” support of fast growing or fast failing companies, negotiator support, and litigation work. The other half is whatever I want it to be. If income is down I pick up some slack or trouble shoot development projects. Otherwise I code my own Dog Food. My “quant” work is still mostly done with spreadsheets, really pushing Excel to it’s limits, and a paid license for SAS (the license fee was more than I pay annually for my 3’000 sq foot. house!!) My other “quant” tool is @RISK.

2013 Resolution #n — Make and eat your own Dog Food:

1a. The open source R language has saved me a $60’000 at least – no more SAS!! I want to pay back by contributing my time to clean up some of the primary code and speed it up a tad. I also want to take portion of the code and branch it for use under CUDA.

1b. My guess is that you’ll see CUDA running next to Phi boxes real soon. Since I’m already into cleaning up code, learning to use a Phi box makes sense. This would fit well with some of R, and would be killer on my fave open source BI and ERP systems, as they are already very well written.

1c. I will never really get free of using Excel, but I sure can improve upon it. I built a really nice BI stack for my own use in 2012 using open source. Problem is that it lacks front end analytics, or at least home grown analytics.

So for 2013/4: At a high level, I need strong BI and ERP analytics (for paying customers), risk apps that give me everything I get out of @Risk now (customers love their graphics, and I’ve got one attorney who actually understands the risk material I feed him… calling me at 3AM with questions). So I need some risk API’s or applets and my calculus is good, but not that good. Probably rent @Risk’s code for now. I do some optimization work as well, and I know just where to find that code. All of that needs a nice interface and easy to use calls in Excel.

It’s a matter of stitching together mostly existing code with data interfaces to both the BI system and Excel. Not difficult, but challenging, and a perfect use for CUDA and Phi and I now understand it.

My at home hardware is embarrassing – once high end HP and IBM servers stitched together with some more modern Dell stuff and a very high end HP workstation. It’s on old fibre with an ancient HP switch. Sooo…

Currently I’ve got HP-UX and AIX, some of those running God only knows Linux. Only my workstation is clean, with VMWare Workstation. First thing, change everything to Solaris 10> or freeBDS with ZFS. Really want to commit to ZFS.

I have to figure out if the Phi cards are useful and if they will run on Opteron systems. That may change the architecture if the cards are truly useful. Learning CUDA is a given, Phi is in the air:

2. Production: A new 4-way Opteron 6300 system, else something like it in the Intel world. My enhanced code will need to be re-compiled if changing chip-sets anyway. This would hold the entire BI system, including usually small data sets, with most of the data in the cloud. For almost every app I have data on Amazon’s paid cloud. Each big server deserves it’s own u1 cloud server/front and back end. That’s an easy build or buy reconditioned.

Your opinion here: I like the idea of housing the compute system in the U4 form factor, but unsure of the do-ability of this. This would be for existing work plus truly tested and ready to go code, and would eventually take a CUDA card and possibly a Phi card as well.

Dev/Test: Same as above but with dev tools. No need for really big data sets – I’d pick one, maybe 3-4 GB, to work with the code. But this box still needs to run the BI solution, with data in the cloud – expensive for dev work. As for tools, I’m an old emacs user, which is set for an IDE for C/C++ and (drumroll) smalltalk! For everything new I’m a born slave to UML, even state models for math, and for everything already written I profile it. I sometimes model what I’m going to write in Smalltalk. This provides a nice roadmap and I’m off to the races in C/C++. These tools are not small to my way of thinking, but only about 2gb. I think I could get away with a U3 machine. Again, I’d like to have the processor in the box with n-storage. Your thoughts?

3. Edgar/Crawler: Often a job requires econometric analysis, usually comparative. I’m also a Chartered Business and Municipal Valuator for Negotiation and Litigation – very lucrative work when it comes along, often because I’m the only calm guy in the room! I do scripted nightly downloads of selected data, all freely available but in many different places. Perfect use for an almost pure storage machine. Currently I then scrub the data and move it to it’s own BI instance. Frankly, I need to change that process – a new BI system, better data handling, columnar database, etc. I keep everything for three years. Anything older goes to the cloud, but would be cheaper backed up only.This would be a U4 box, fully populated, about 18 gb compressed, probably 24 gb uncompressed. Don’t need a lot of processing, unless I want to turn this into a free-standing BI server. That would probably require a “head” system. Your thoughts?

Other than backup and network that’s about it. I’ll get rid of this existing ancient fibre loop, and replace it with (a) 10Kb net if I can find a used one cheap or (b) just plain 1K, iSCSi in any case.

Sorry for the long post. It wont happen again!

Thanks and cheers,

CodeToadPhD (econ)

[1] Wall Street Quants — the boys who brought us the current recession using vastly updated (i.e perverted) Black Scholes math instead of black jack using debt vehicles as “assets”. And they gave Black and Scholes the Nobel Prize just before the crash!! They should be shot.

worldskipper, if you-all need (a) some development help, or (b) a business-side quantitative guy, give me a shout! Like a good hooker, I’m very good and work cheap!!!

Greetings in any case,

CodeToadPhD