At HPE Discover 2025, we got a neat look at an OCP DC-MHS platform that we thought was worth sharing. The Datacenter Modular Hardware System is designed to lower the costs of producing servers. It does this by standardizing the I/O and layouts to a large extent. Let us take a quick look at the demo.

OCP DC-MHS Demo at HPE Discover 2025

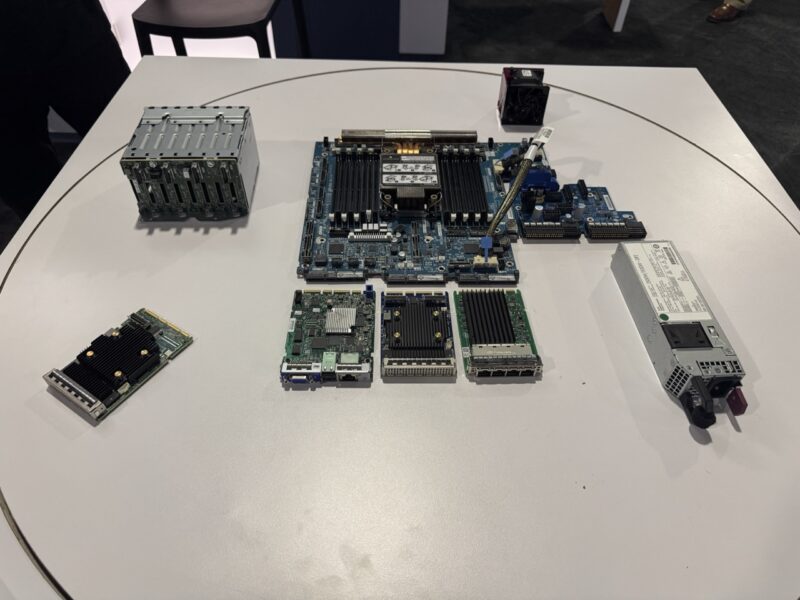

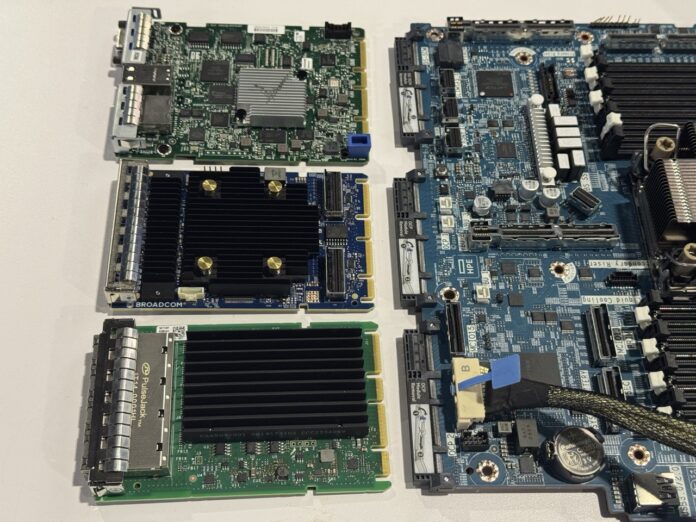

On the table, HPE had a number of different components centered around the Intel Xeon motherboard.

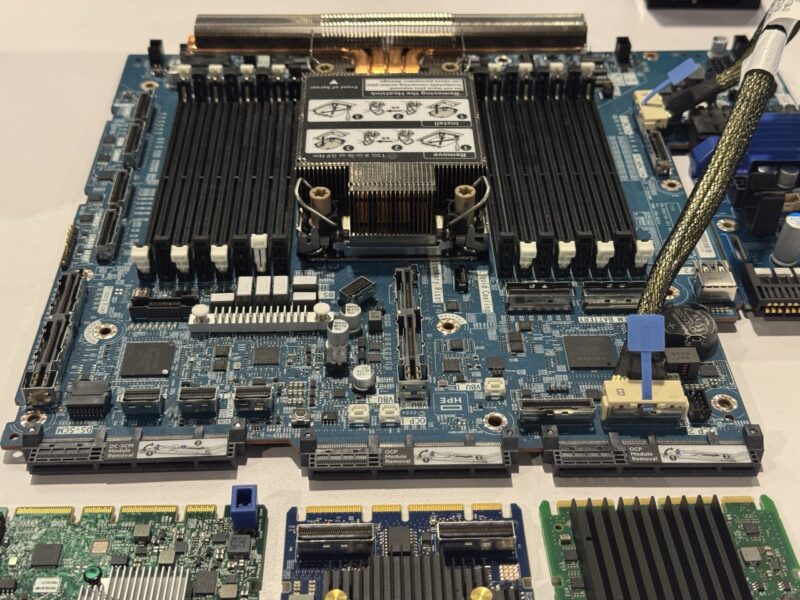

First, this is a single socket Xeon platform. Perhaps the one point to notice is that there are no traditional riser card slots other than the two x16 slots. Instead, almost everything is cabled to either additional risers, front panel storage, or to other locations.

HPE showed off a DC-SCM (Datacenter Secure Control Module) that has everything from the rear video and USB ports, to the firmware images, and the control processor. Then there are two OCP NIC 3.0 slots. This allows the configuration to be flexible offering different service processors, multiple NICs at the rear, and so forth. This demo is a little bit more exciting.

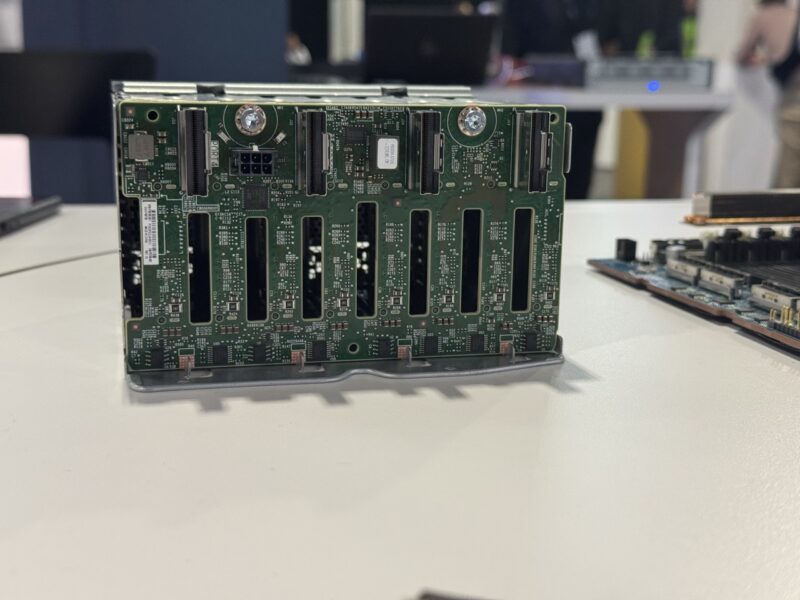

The middle OCP module you will see does not have an external port. Instead, since the OCP NIC 3.0 form factor is really defining the PCIe connector, PCB area, and so forth, it is being used here for a RAID controller/ HBA with connectivity back to the system via MCIO headers. That can then be attached to a storage backplane. That gives this setup extra configurability.

Also, the power interfaces are standardized.

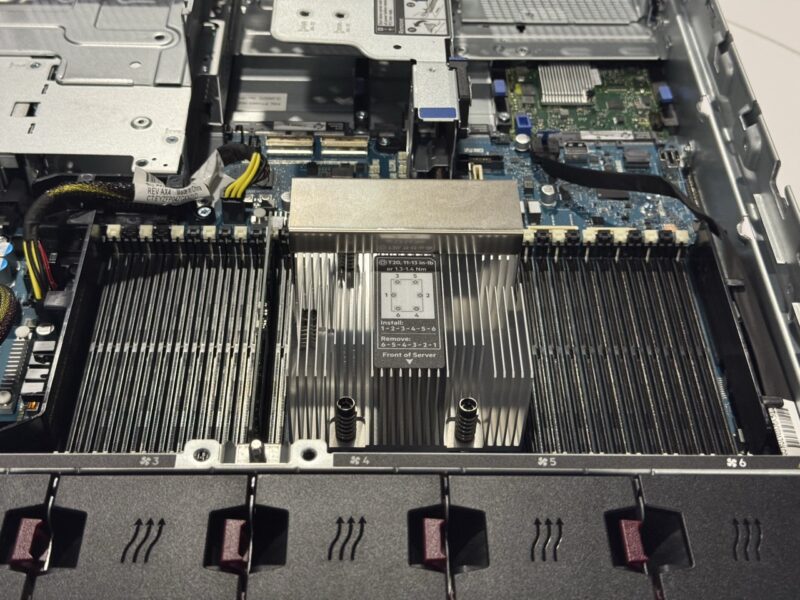

Here is another board with a similar design in a system, albeit as a different platform.

The goal of this demo was simply to show off how the components interact with each other making the system configurable.

Final Words

Companies like HPE and Dell used to be highly proprietary in their designs. Now, both are adopting DC-MHS which is helping to standardize server layouts in the industry. The modular design makes it much easier to adapt designs to different form factors given how much of it is focused on standardizing the I/O including slots and using MCIO connectivity for a lot of the PCIe lanes. Using cabled connections means that this motherboard can handle both 1U servers and 2U servers just using different risers as an example. It is an intresting parallel given what is happening with NVIDIA looking to standardize its platforms on the AI side and with its MGX. Servers are becoming more standardized across vendors, but are also becoming more flexible.

Is it really going to change much though? Even if everything is a standard, there is no chance that you will be able to mix and match parts between vendors (e.g. even if the PSU pinout is standard, you’re not going to be able to use DELL PSUs in a HPE Server as you can be sure the firmware on the DC-SCM will surely block that)

Everything is still going to be locked behind proprietary firmware even if it’s standard off the shelf ASpeed BMCs or Broadcom MegaRAID parts.

“Using cabled connections means that this motherboard can handle both 1U servers and 2U servers just using different risers as an example.” Uh guys 1U and 2U servers have had shared boards for over 10 years, R720/R620, R730/R630, Intel reference platforms for the same generations, and more, those are just the ones I’ve owned. All they did was add an extra riser slot that got left unused on the 1U.

@Armageus To an extent that’ll be true. To your point, the big players like HPE, Dell etc. certainly will firmware lock things where they can to keep users inline with support contracts. However, the smaller players like Asus, Gigabyte, Asrock Rack etc. can leverage these standards and be less particular about firmware lock ins. These smaller players can leverage some of the same scale benefits despite their size. For example, using MCIO as the cable riser standard permits HPE and Asus to use the same riser board but with different base motherboards. Delta could make the same power supplies for Dell and Asrock Rack with the only difference being firmware. Dell of course won’t charge less but Asrock Rack has incentive to pass the volume production saving onto customers in an effort to gain market share against Dell.

There are other benefits for shops that run multiple platforms in emergencies: if Asus rack gear doesn’t care about PSU firmware, just stock Dell branded PSUs that’ll also work in the Dell systems you run so you don’t have to keep two models of spares on site.

The real ultimate beneficiaries of these standards are the hyper scalers who contract the ‘smaller’ players for hardware manufacturing of their own designs. Google, Meta, Microsoft all spin their own hardware designs and let a company like SuperMicro, Asus, Gigabyte manufacture it for them. The hyperscalers are not going to paint themselves into a corner by vendor locking themselves in.

For I/O adaptability & repairability this is super great. I have a standard ATX board from SMC that has dead NICs and thankfully I have open PCIe slots open for a network card so not a horrible situation. If this was a prod server & not a homelab I would have to RMA the whole system likely or have a tech come out for mobo replacement. Resulting in at least a full day of downtime. With this new standard I get a replacement module & the repair timeline is not much more than a cold power cycle. Super cool, thanks for the overview piece.