This is a huge deal. NVIDIA and OpenAI announced a deal today whereby NVIDIA will invest in OpenAI as OpenAI deploys a lot of NVIDIA compute. In some ways, the numbers are staggering in terms of scale, making this very exciting.

NVIDIA to Invest $100B in OpenAI and OpenAI to Deploy 10GW of NVIDIA

There has been some speculation that OpenAI would be moving to non-NVIDIA solutions going forward. With this latest deal, NVIDIA is cementing its place as a next-gen AI hardware provider at OpenAI, even if OpenAI is using different types of compute.

Here is an excerpt from the announcement:

To support this deployment, including data center and power capacity, NVIDIA intends to invest up to $100 billion in OpenAI as the new NVIDIA systems are deployed. The first phase is targeted to come online in the second half of 2026 using the NVIDIA Vera Rubin platform. (Source: NVIDIA)

This seems to be a deal targeting infrastructure being deployed a year from now. It is also a commitment to getting Vera, NVIDIA’s next-generation of its Grace Arm CPU along with Rubin, the successor GPU IP to Blackwell, out in volume manufacturing in 2026. Vera is quite interesting as NVIDIA is adopting a custom Arm core, instead of an off-the-shelf Arm Neoverse, and it also has SMT, joining AMD and Intel (Intel is about to skip a generation though.)

The other key component is that it is not $100B invested today. Instead, it is $100B invested as the new systems are being deployed. This is not the traditional vendor financing, but it seems like NVIDIA is taking an equity investment as OpenAI spends money on NVIDIA systems and other infrastructure.

Another line stood out:

OpenAI will work with NVIDIA as a preferred strategic compute and networking partner for its AI factory growth plans. (Source: NVIDIA)

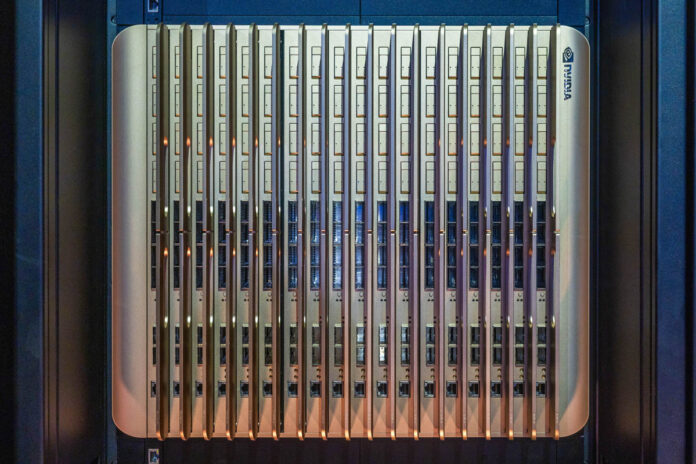

That means that OpenAI will not just look to NVIDIA for its compute, but also for its networking capabilities. NVIDIA’s networking business is a powerhouse as it owns the AI server NIC market. Folks often do not realize this, but for every GPU, there is often $1000-3000 of NIC and connectivity content in an AI server. A new NVIDIA SN5610 switch just arrived at STH and that is a $65,000 64-port 800GbE switch, without optics. Given many of those ports are used for uplinks, and the 800GbE ports can be split to dual 400GbE ports, that is often another $1000-$3000 per GPU in just the first level of switching above the AI server (topology plays a big role in that figure.)

Keen STH Substack readers will also note the companies are using “AI factory” here, something that we went into in-detail on a few weeks ago.

Final Words

There is a fairly interesting trend where the big hardware providers are investing to get AI clusters built. The infrastructure investments are huge, so the capital needs to come from somewhere, and that seems to often be from equity investments. What is clear, however, is that OpenAI is building big infrastructure, and NVIDIA is going to be selling billions of dollars in the future to OpenAI. 10GW is a substantial figure as we are in an era where the large AI shops are working towards building 1GW facilities and a year ago tens of MW was considered very big.

Anyone else a trifle jumpy about the amount of correlated risk involved here?

It will be a bad day for Nvidia if the music stops even if they were selling all their product for cash, given the sudden downturn in sales; but here they are effectively selling product in exchange for IOUs whose value will crater under the exact same conditions that would cause their own product’s value and sales volume to suffer. Really doubling down.

Either someone can write better prompts than I or their standards are lower. In either case, it would be nice if one of those giant AI training clusters could be used on the weekends to run some traditional long-range weather forecasts at higher resolution with larger ensembles.