NVIDIA Tesla T4 Power Tests

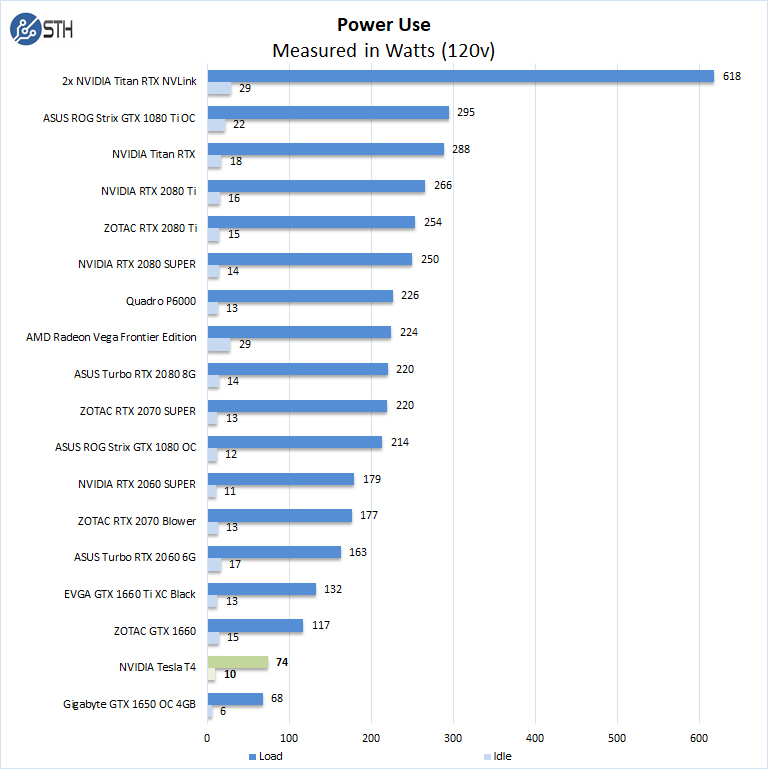

For our power testing, we used AIDA64 to stress the NVIDIA Tesla T4, then HWiNFO to monitor power use and temperatures.

After the stress test has ramped up the NVIDIA Tesla T4, we see it tops out at 74 watts under full load and 36 watts at idle. That low power consumption along with the compact form factor is what makes these cards popular in many applications.

Cooling Performance

A key reason that we started this series was to answer the cooling question. Blower-style coolers have different capabilities than some of the large dual and triple fan gaming cards. In the case of the NVIDIA Tesla T4 which is passively cooled, temperatures will vary depending on server cooling configurations.

NVIDIA Tesla T4 TemperaturesTemperatures for the NVIDIA Tesla T4 ran at 76C under full loads, in this case, the highest temperatures we saw were achieved while running OctaneRender benchmarks. Idle temperatures were reasonable for a passively cooled GPU at 36C.

Final Words

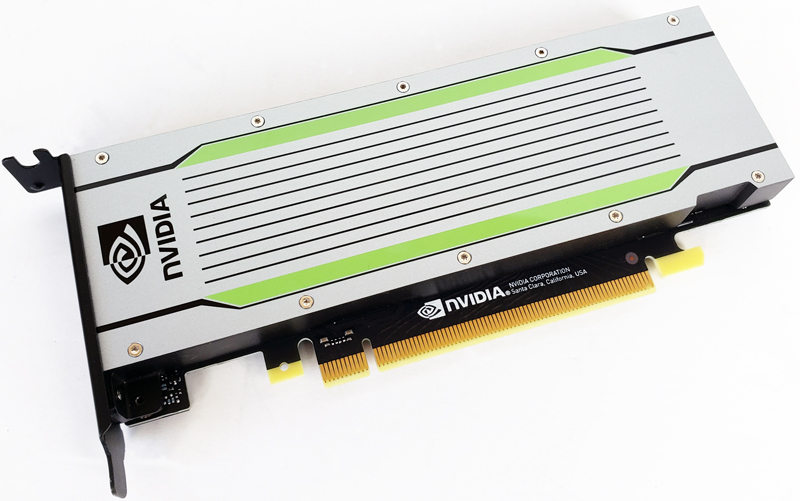

The NVIDIA Tesla T4 has become extremely popular with server vendors and customers. With its compact and easy-to-integrate form factor, they can be deployed almost anywhere. We have seen competitors realize NVIDIA’s killer product status and release products specifically targeted at NVIDIA’s popular card. An example is the recent Xilinx Alveo U50 FPGA Card trying to emulate the power and space requirements.

NVIDIA offers only a few competitive products in this space. There is the special single slot NVIDIA Tesla V100 150W single-slot PCIe card with 16GB of memory that was designed for a certain hyper-scale customer and is fairly hard to find. The last generation NVIDIA Tesla P4 is a tough value proposition in the face of the NVIDIA T4 as Turing was a major architectural leap for this application. There is simply not much by way of single slot inferencing accelerator competition.

GPU Accelerated servers vary a great deal, from 1U, 2U, and 4U systems, each having a different targeted application role. The standard NVIDIA Tesla V100 PCIe card occupies two physical slots (one electrical) and uses 250 watts of power. It can be purchased with 16GB or 32GB of memory. The NVIDIA Tesla T4 takes a single slot and only uses 70 watts of power. One can easily install two Tesla T4 in the same physical space and power budget of one Tesla V100.

NVIDIA has great tools and its work on containers and Docker integration have been excellent. One can install multiple Tesla T4’s in a system and use Docker’s –device=0,1,2,3,.. command to target specific GPU to run workloads. This adds more flexibility for servers and containerized applications to pick specific resources. Also with the Tesla T4, NVIDIA added INT4 for even faster inferencing For virtual desktop uses we found the Tesla T4 a capable GPU with our OctaneRender benchmark’s excellent run.

If one looks at a 2U server like the Dell EMC PowerEdge R740, one can install multiple GPUs. At some point, one would make a choice between a single NVIDIA Tesla V100 card or three Tesla T4 GPUs. Using three Tesla T4ss one would use 210 watts of power while the single Tesla V100 uses 250watts. A single Tesla T4 using Integer Operations (INT8) 130 TOPS while the Tesla V100-PCIe can do 224 TOPS, using 3x Tesla T4’s achieves 390 TOPS in the same workspace. With lower power and cost along with more performance, three Tesla T4’s are the better choice. That is what is making the cards so popular alongside their deployment flexibility.

Another great review William