NVIDIA Jetson AGX Thor Developer Kit T5000 Performance

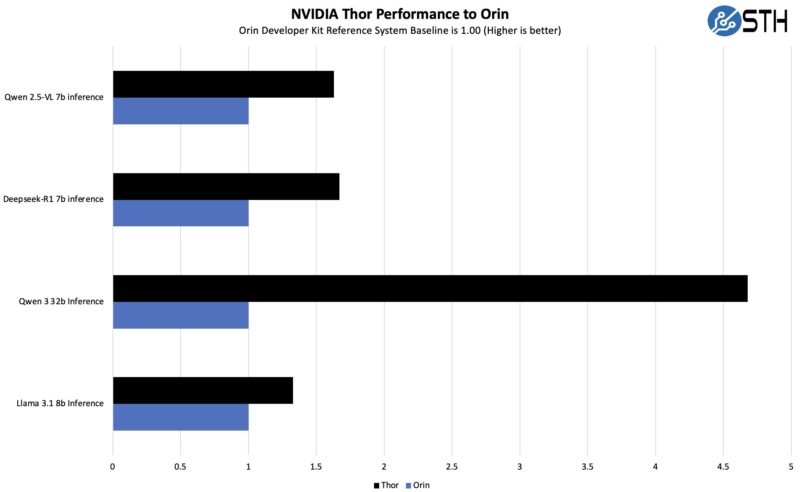

In terms of performance, we got this up and running literally hours before Patrick, Sam, and the studio team hit the road. Still, here is a quick look at Thor versus Orin staying very close to NVIDIA’s reference materials:

We were fairly close to what NVIDIA told us we should expect on these, usually within 2%. For example, NVIDIA told us we should be at 150.8 tokens/ second on Llama 3.1 8b, but we were closer to 149.1 tokens/ second on average. Still, there is some variability here, so we considered that to be close enough to be a test variation. We will probably get to run more of these later this week, but on limited time, and trying to average 10 runs with also doing warm-up runs and we just ran out of time.

In terms of CPU performance, here is a Geekbench 5 run that Patrick did en route to Hot Chips 2025 in an Uber this morning after the embargo lifted:

That is not bad. It puts the multi-threaded performance in-line with the AMD Ryzen AI 7 350 or a 10 core Apple Mac Mini M4. We do not expect this to have the fastest CPU since it is focused on GPU and accelerator compute.

Here is another Geekbench 6 run from the Uber ride post-embargo.

Again, a decent result.

NVIDIA Jetson Thor T5000 to Jetson Orin

Since we have looked at all of the NVIDIA Jetson Orin Developer Kits we thought we would take a shot at doing a quick comparison

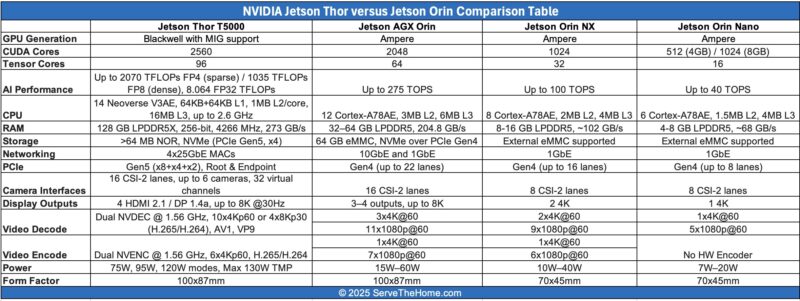

Here is a best shot at comparing the Jetson Thor T5000 to Jetson Orin parts:

That is a best shot, but it should give some sense of just how massive the changes are. It also omits things like the NVDLA removal in the Thor series as transformers became the dominant compute. NVIDIA (across lines not just with Jetson) also changes many of the specs that it reports such as for AI performance over generations so that makes this harder.

Final Words

The NVIDIA Blackwell architecture is everywhere at this point, much like Ampere was. It makes sense that NVIDIA pivoted its AI support to Blackwell’s GPU compute over the NVDLA. Getting more CPU and memory resources also helps add even more capacity, but at a price as we are now operating well over 100W at peak. If you are building a robotics application where battery life matters, then that power consumption is going to be important to watch.

On the other hand, with more camera processing power, more compute, more memory, and so forth, the NVIDIA Jetson AGX Thor developer kit is going to sell like hotcakes. If you are building high-end next-generation robotics, this is the platform you want to do it on. At $3499 it is not cheap, but it is also going to be a necessary tool for an entire industry just as NVIDIA Ampere compute in the data center has given way to Blackwell compute.

Can it run larger LLMs (70B+ params) and if yes – how fast?

Yes – I think the number we are not publishing for llama 3.3 70b is in the low 10s of tokens/ second. We did not get to run this through a standard process to average out runs.

I’d love to see this compared to DGX Spark. On paper, this should have more GPU compute at around the same price.

Agreed jtl. I thought we were going to do GB10 earlier this month. We will get that to you when we can.

@jtl The DGX Spark seems to go for about double the Thor’s price. The only offering I found was >$6000. $5800 on alibaba.

What is the fan noise like? That huge cooling system looks like it might be pretty quiet. More of a pleasant swishing sound like Rosie from the Jetsons rather than the roar of my Dyson?

People, this is a robotics supercomputer not an Ollama computer. This is meant for robotics. If you’re interested in LLM inference instead not VLAs or robotics, check out the DGX Spark, they use the same architecture. But the AGX Thor is a lit slower at running LLMs since it’s not designed for it. On the other hand it has 2x the FP4 AI performance than the DGX Spark due to its integrated NVIDIA Deep Learning Accelerators so AI models and systems for robots will be way faster on this.