This is an exciting one at Hot Chips 2025. We get Gilad’s talk about co-packaged silicon photonics switches for gigawatt-scale switches. As a fun one, I got my first Hot Chips on-stage shout-out during this session.

Please excuse typos; this is being done live.

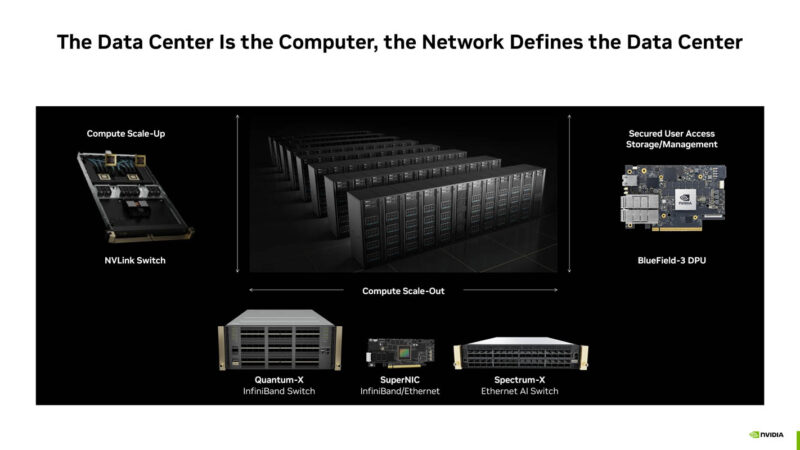

NVIDIA sees the data center as the computer, not the individual GPU.

The BlueField-3 DPU is designed as the NIC for the access network.

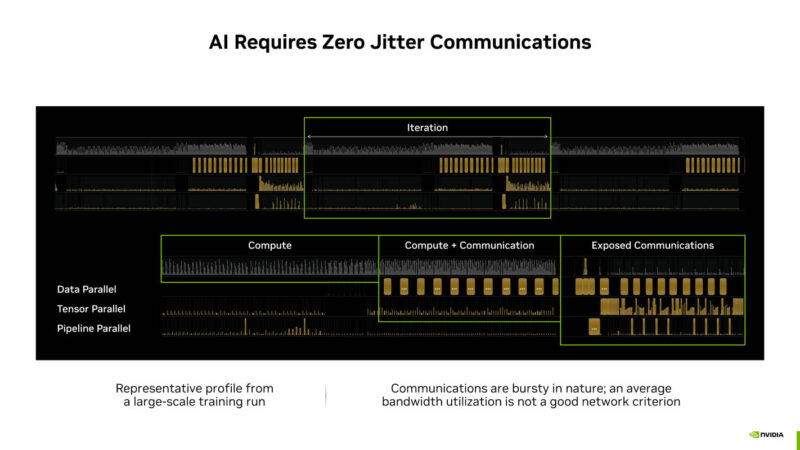

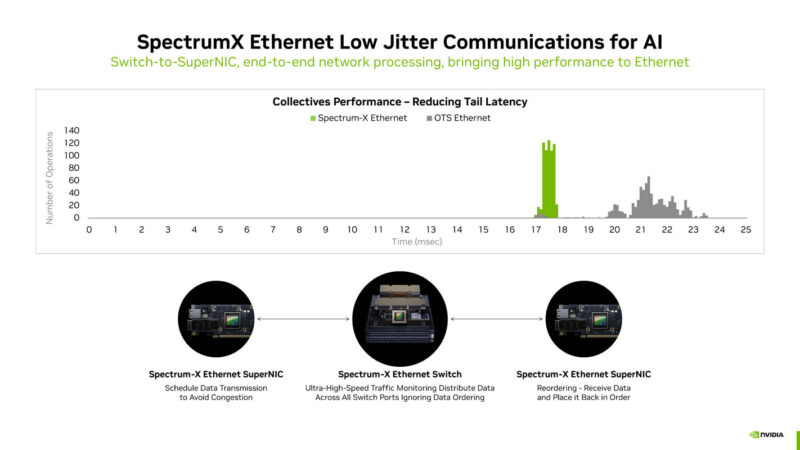

AI requires zero jitter communications because they are large, complex, and span a lot of distance.

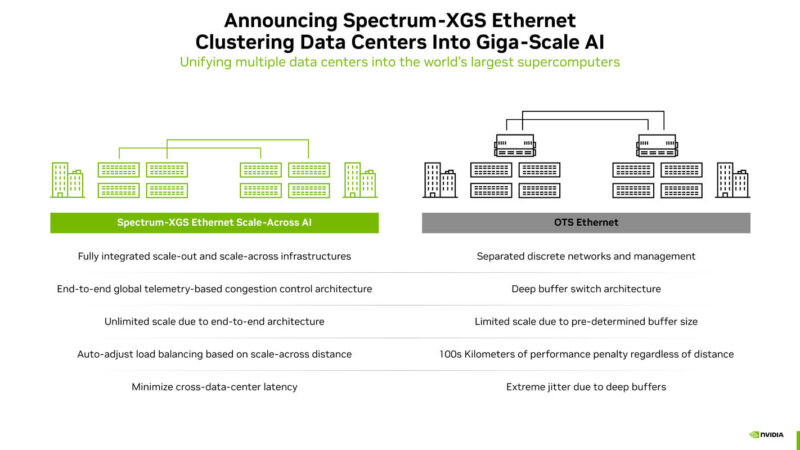

There are different Ethernet architectures. While they are all Ethernet, they have different requirements and goals.

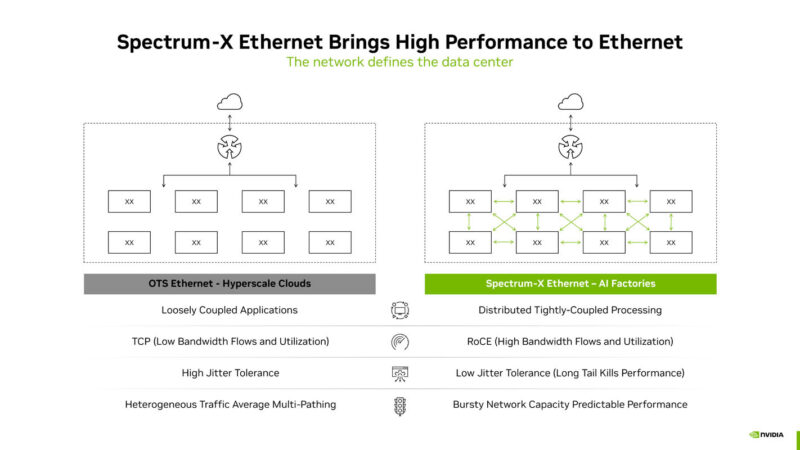

NVIDIA Spectrum-X Ethernet is designed to allow large GPU clusters to use Ethernet.

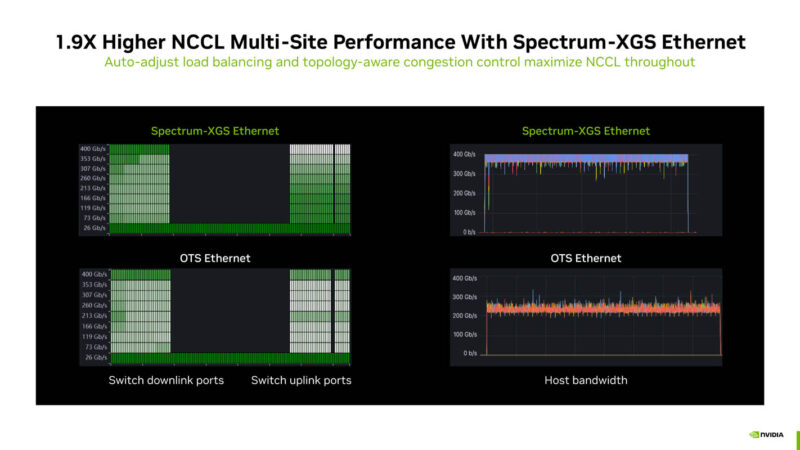

Spectrum-X is designed to bring low jitter communications for AI workloads. Jitter in AI networks causes GPUs to sit idle across massive numbers of GPUs. That is inefficient and costs a lot to have GPUs idle. NVIDIA is designing this end-to-end so that all of the functionality is not just in the switch.

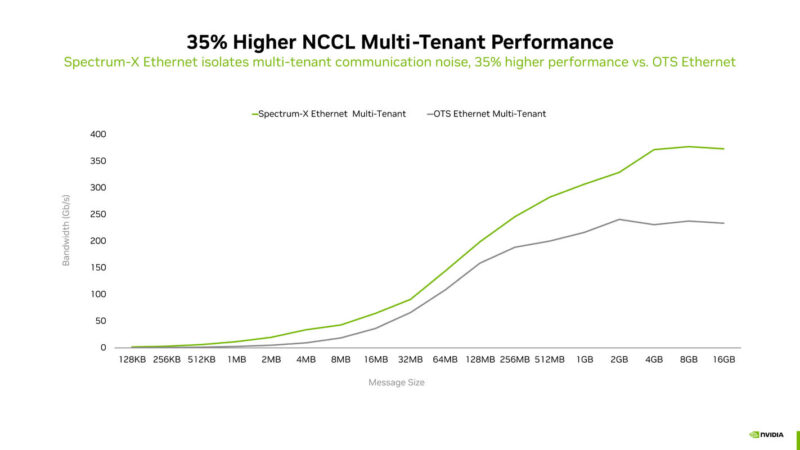

Spectrum-X offers higher NCCL performance. NVIDIA wanted to ensure that if multiple jobs are happening on large infrastructure, they do not interfere with one another. For example, if a switch has one job, but then if other jobs are on the switch, you do not want the jobs to interfere with the performance of the other.

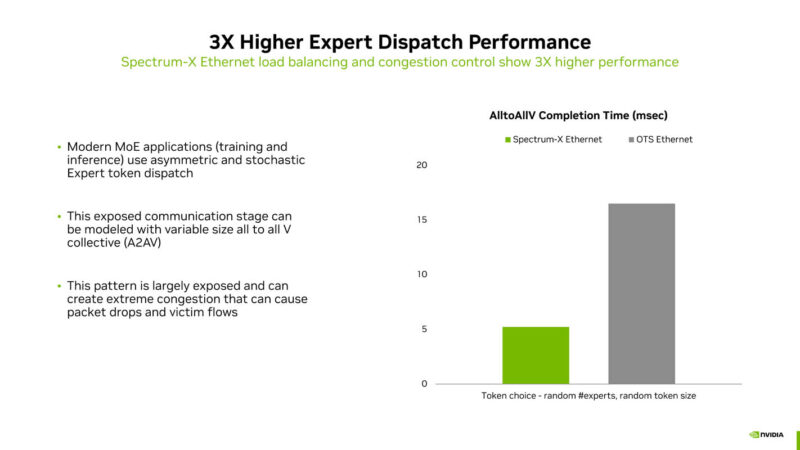

This is one that is new for this year showing Spectrum-X has better dispatch performance for mixture of expert models than standard Ethernet.

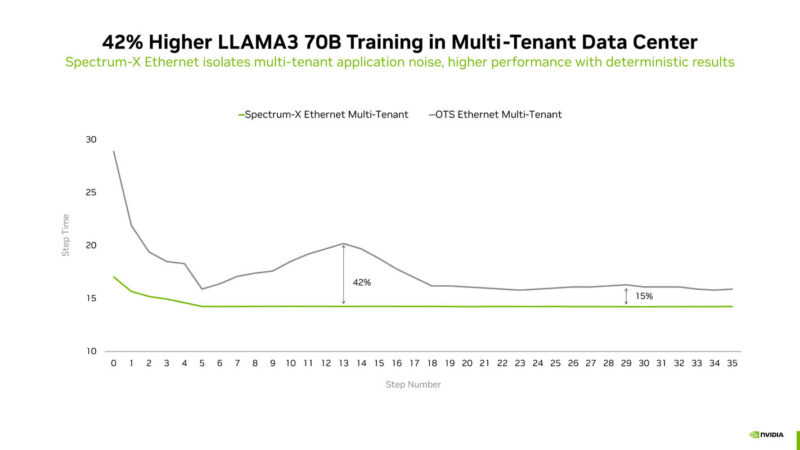

Here is the impact of the Spectrum-X on the multi-tenant data center.

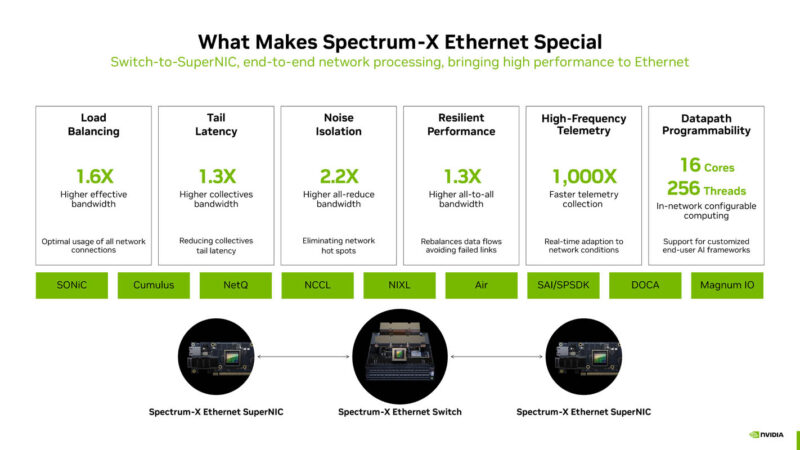

Here is the summary slide on what makes Spectrum-X Ethernet different from off-the-shelf (Broadcom) Ethernet.

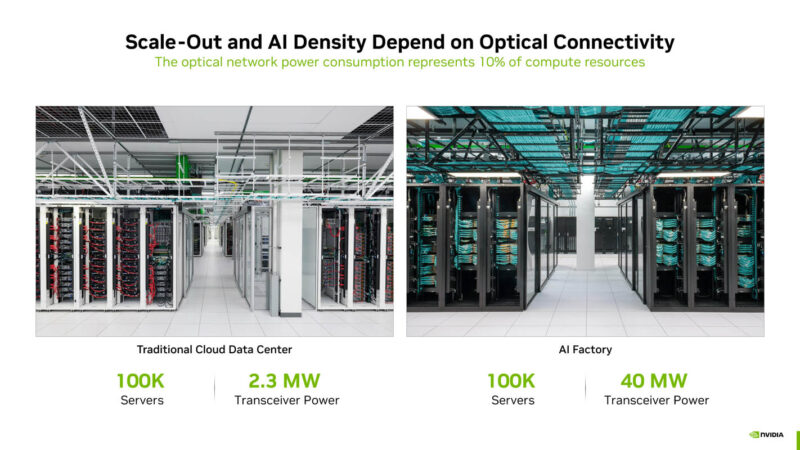

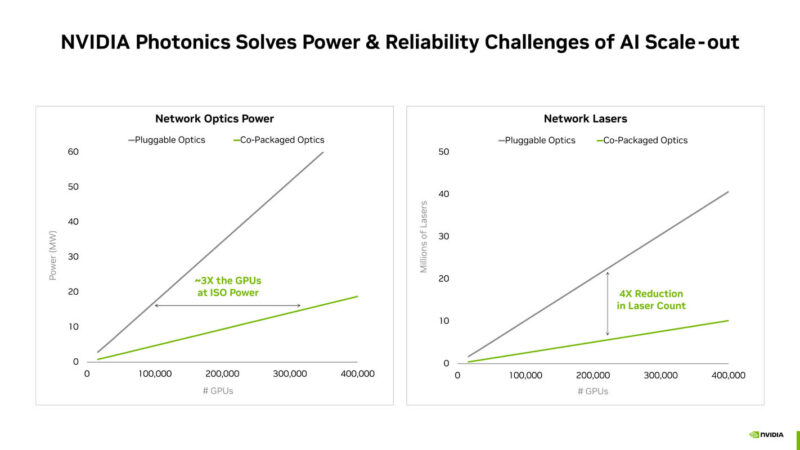

Scaling out also becomes a challenge because the optical networking elements can take a lot of power.

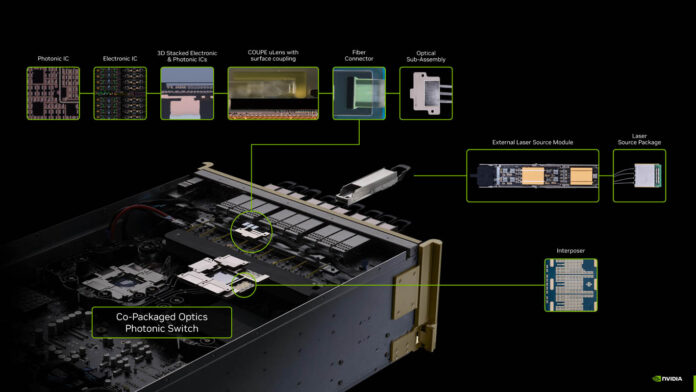

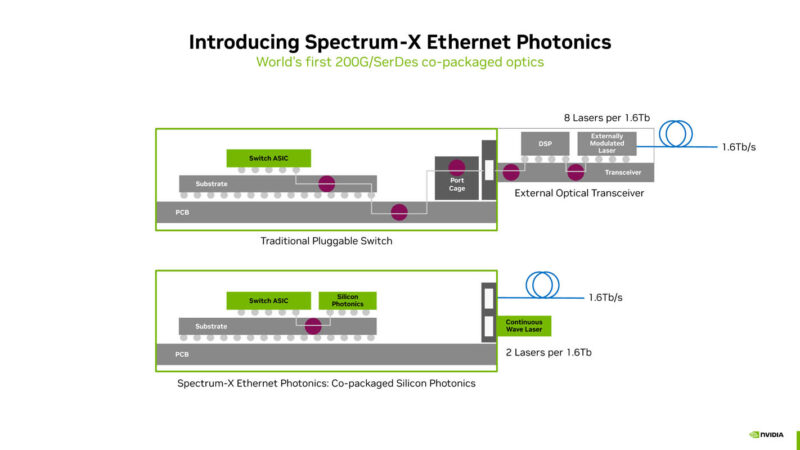

This is the next-generation of Spectrum-X Ethernet Photonics. Instead of spending the poewr to get to a pluggable optical engine, it saves a lot of power.

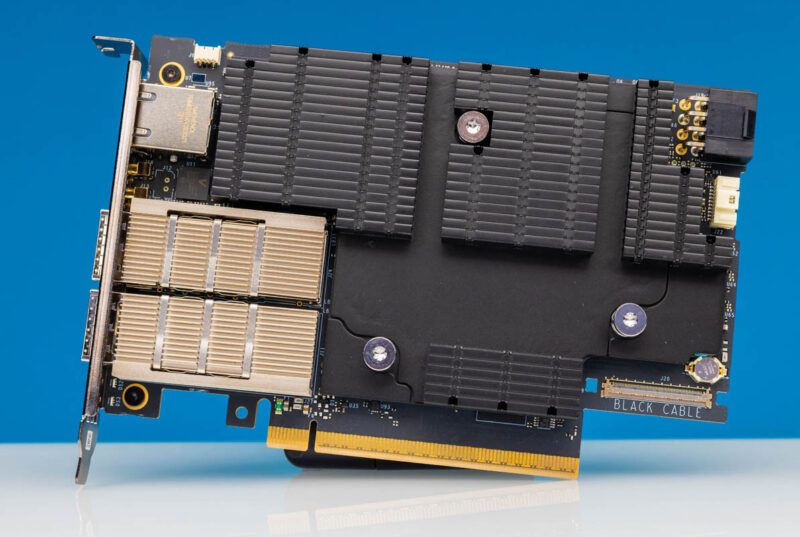

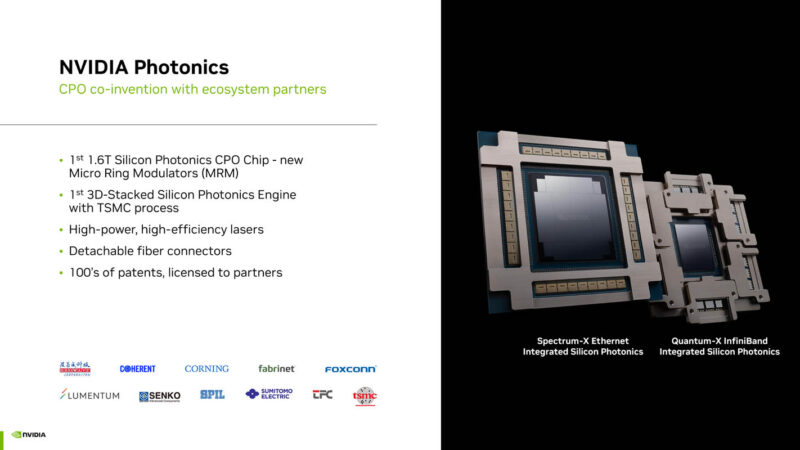

NVIDIA Photonics is a 1.6T CPO chip with new micro ring modulators. NVIDIA is also focused on detachable fiber connectors. Something you might see in the photos is that the Spectrum-X and Quantum-X have different connections for the CPO. This is due to evolution of the solution.

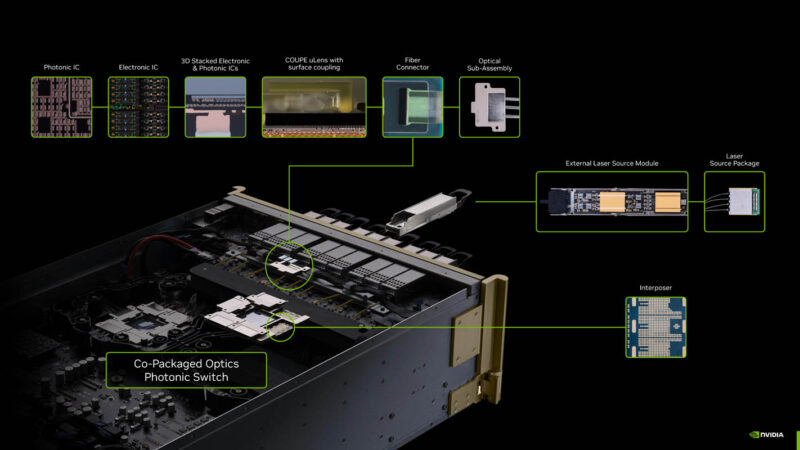

There are many components running to make this work. As a note, there is a pluggable laser in this design.

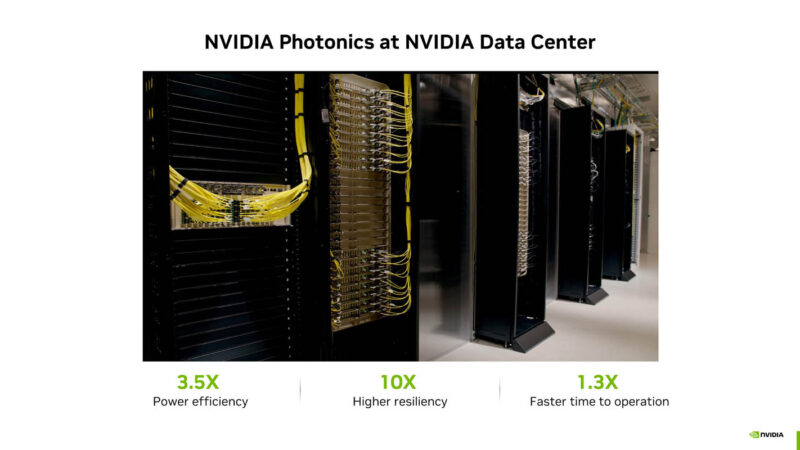

NVIDIA showed that it has this running on site.

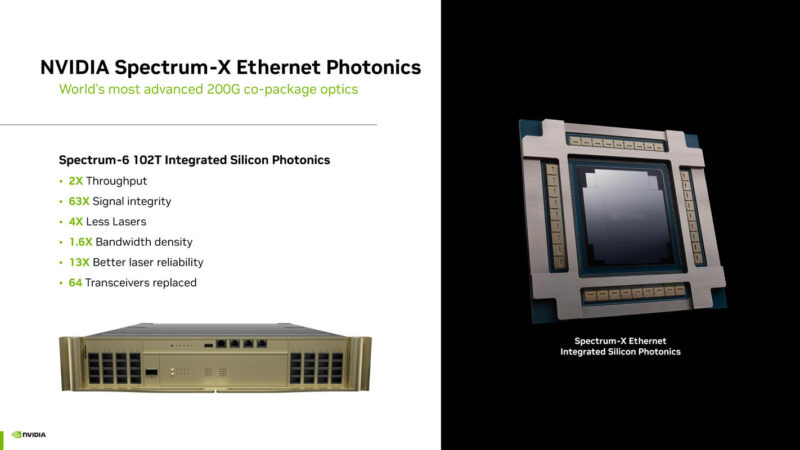

NVIDIA has a 102T switch, the Spectrum-6 102T switch with integrated silicon photonics.

With this, the reliability goes up while power goes down.

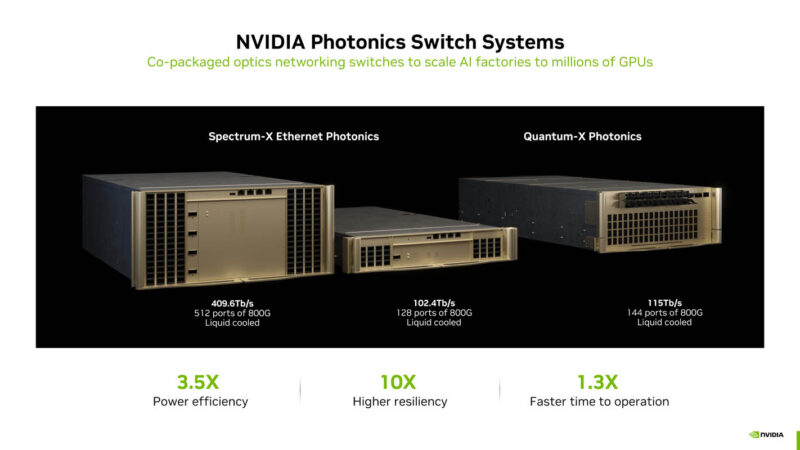

NVIDIA has both Quantum-X and Spectrum-X switches with CPO coming. As a note, I am going to try to get to look at these in a lot of detail in the future.

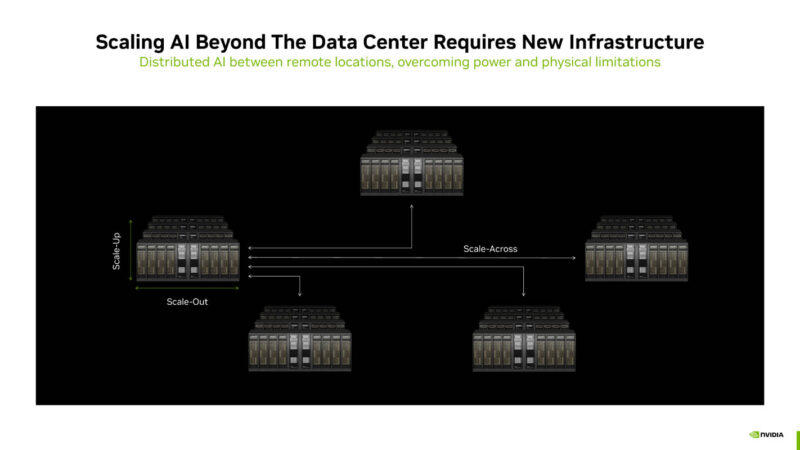

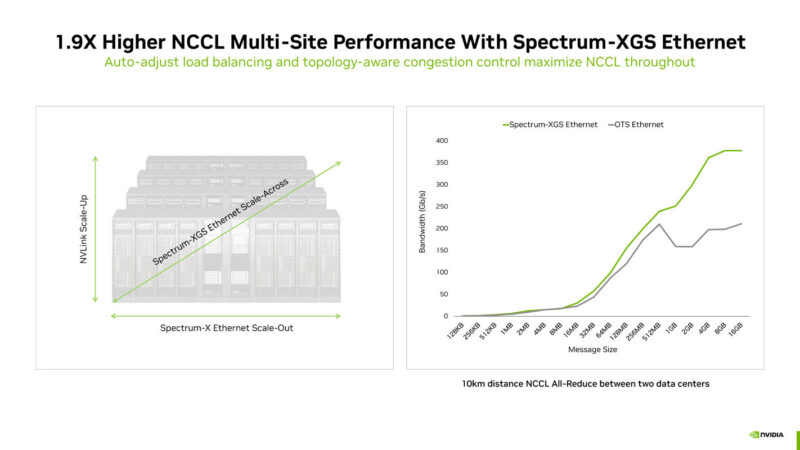

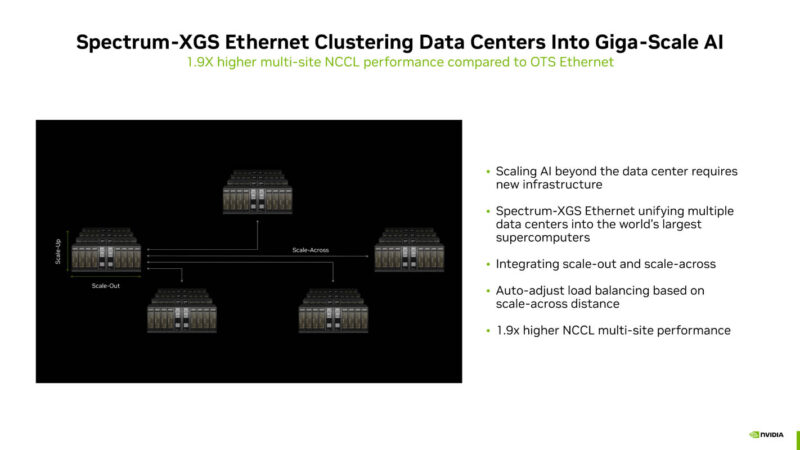

Scale-up, scale-out, and now scale-across. Scaling beyond a data center site, then you need to still have a high quality network, but then also have a lot of speed.

Spectrum-XGS is the company’s approach to expanding the scale-out network to scale-across. That means now just hardware, but distance aware algorithms.

NVIDIA says it can get 1.9x better scale out performance using this technology, and there is room to get better.

Here is a training job running on scale across.

The goal is to allow multi-site AI training so that training is not limited by the power and resources of a single site.

CoreWeave is likely going ot be the first to deploy.

Final Words

Scale-across network starts at 500m or so. After that point, adjustments need to be made to the algorithms to handle the distance. I cannot wait until these are products.