At SC25 we saw the MiTAC G4826Z5 and then the racks that this goes into. What is notable about this one, is that it is a liquid-cooled dual AMD EPYC Turin server (EPYC 9005) that has eight AMD Instinct MI355X GPUs. Beyond the server itself, MiTAC also had more on how these go into rack and cluster configurations.

MiTAC G4826Z5 Liquid-Cooled AMD Instinct MI355X Server at SC25

The MiTAC G4826Z5 was interesting. It is a 4U chassis allowing for higher densities. The top 2U is dedicated to the eight MI355X GPUs. The bottom features the two AMD EPYC 9005 CPUs, SSDs, and management.

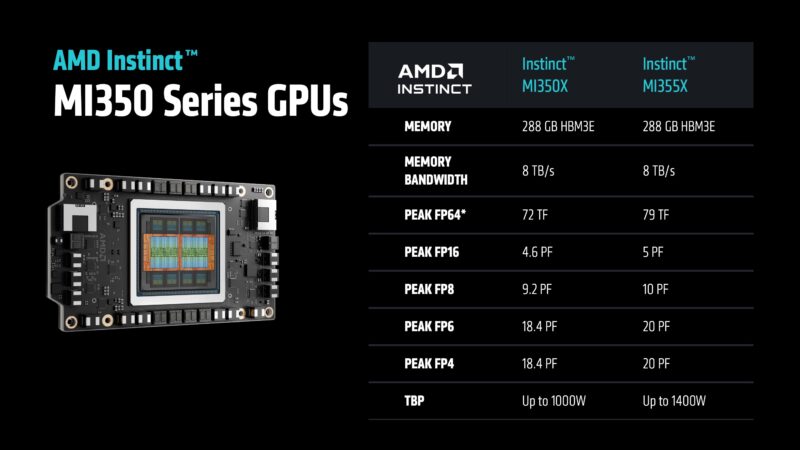

You can tell that the GPUs require a lot of fluid to cool them as the tubes are much larger than the CPU tubes on the bottom part of the server. The liquid-cooling here is important. AMD has both a MI350X and a MI355X in this generation, but the MI355X requires liquid-cooling.

Although the MI350X and MI355X have the same 288GB of HBM3E memory, the MI355X has higher performance. That is because it has a 1400W TDP, 40% higher than the air-cooled alternative.

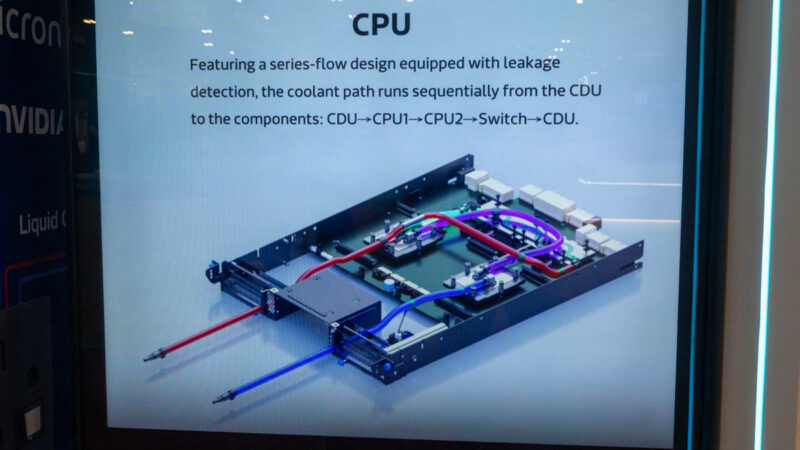

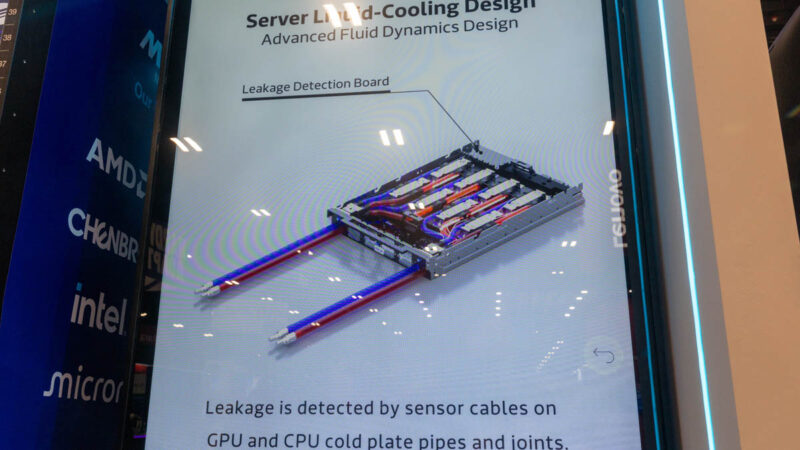

In the booth, MiTAC showed how the liquid flows on the CPU and GPU sides. In these racks, the required cooling dictates the tubing size and the sequence of components to be cooled. Rack designers need to ensure that there is proper pressure and flow from the rack manifold to the server components.

Another important aspect is that modern liquid-cooled servers include built-in leak detection. In AI clusters, if a leak is detected, then the node needs to be shut down almost instantly.

That is really focused more on the node, but the more interesting part was how this translated to a rack-scale solution.

MiTAC SC25 AI Liquid-Cooled Racks

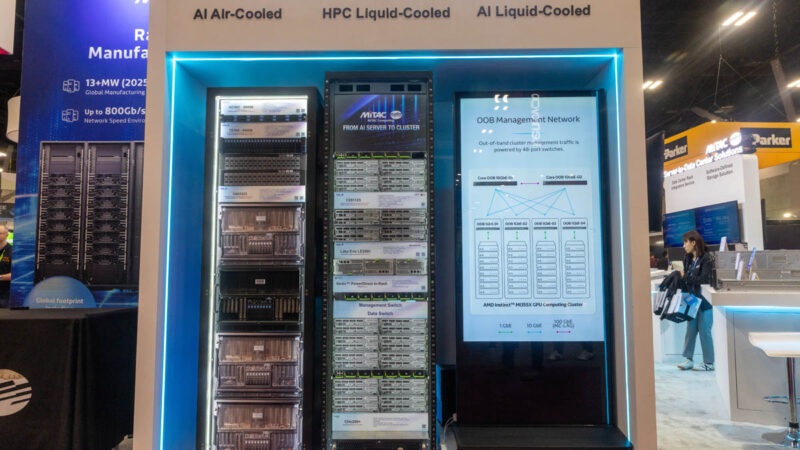

MiTAC had its AI Air-Cooled, HPC Liquid-Cooled, and AI Liquid-Cooled racks at SC25. The AI Liquid-Cooled rack is the one that the MiTAC G4826Z5 server goes into.

Since each of the MI355X GPUs has 288GB of HBM3E memory, and the rack has eight servers, each with eight GPUs, that means we have 64 GPUs and around 18.4TB of HBM3E memory.

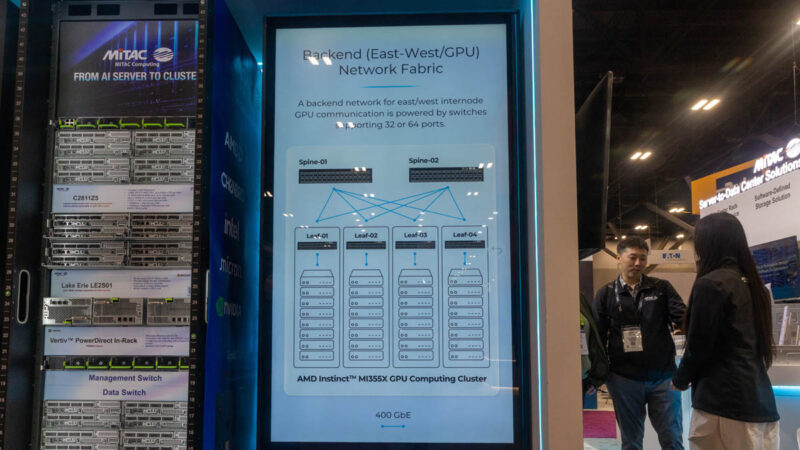

Beyond the compute, each GPU also has a rear NIC for the compute fabric.

MiTAC showed what that fabric looks like beyond just one rack.

They also showed the liquid-cooling flows at a rack level.

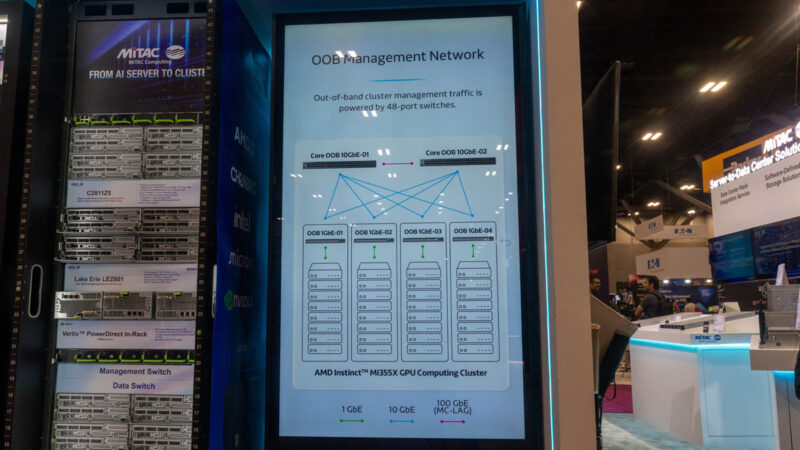

This might seem like a small detail, but modern AI racks have the high-speed networks that get most of the attention. There are still out-of-band management networks in these clusters, so it was neat to see that mentioned.

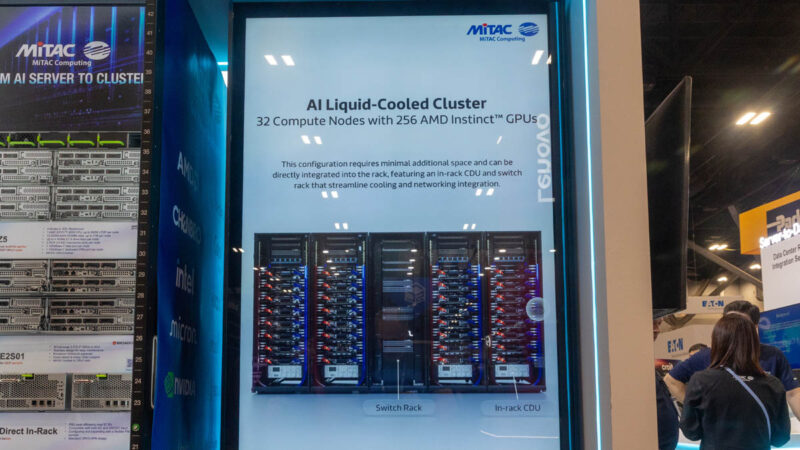

There was then a 256 GPU cluster, which has 32 of the MiTAC G4826Z5 servers.

The 512 GPU version not only doubles the number of compute nodes, but it also integrates an in-row CDU.

If you were wondering, that is well over 147TB of HBM3E memory.

Final Words

AI was a major theme at SC25 this year. MiTAC’s booth was a bit different since it had both the physical servers, but also a look at what larger topologies would look like. A few months ago, we had our MiTAC G8825Z5 AMD Instinct MI325X 8-GPU Server Review. What we saw at SC25 was a newer generation of that with liquid cooling and next-generation Instinct GPUs. It is always nice to see future generations of servers that we review, and how they integrate into larger clusters.

Looking at the extra 400W power consumption of the MI355X I can’t really fathom why it would be wirthwhile in a server environment. They are basically overclocking the cards, making them us 28.2% more power per PF.

That is, based on the FP4 and FP6 numbers, the MI350X gets 54.34W/PF and the MI355X is 70W/PF. And 70 is 28.2% more than 54.34.

I wonder if the initial hardware cost of purchasing 9% more servers to offset the performance difference is that much higher than paying 28.2% more for electricity (and cooling, of course). And, I suppose, the initial cost of purchasing the MI355X cards would be higher than that of the MI350X on a per card basis.

Or perhaps they will segregate the two platforms, making the MI350X the air cooled version and the MI355X the watercooled option and claim that the underwhelming efficiency will be offset by the lower cost of cooling?

I just don’t see the viability of the MI355X. It’s not a desktop CPU that I would want to overclock no matter what, just to get a few extra points in Cinebench. This is where efficiency should also matter a lot.

You’re looking only at GPU to GPU power. Fewer GPUs mean fewer network cards, optics, and switches. These aren’t just power limited at the GPU. If you’ve gotta add another layer of switching then you’ve added cost and power. Liquid at the system level will save 10% on power too. It’s much closer than you think if you’re looking at cluster instead of just the GPU

That’s all correct, but it’s only 9-10% overhead. And you can liquid cool either of the two cards for a similar amount of power savings.

I am by no means saying that you’re wrong, comparing the two cards goes much deeper than just comparing the two chips, but when I do compare the two chips, the losses in efficiency are very significant if one chooses to step up for the MI355X.

Please bear in mind that performance numbers we see in the specs are max and they seem comparable, but no one mentions how fast air cooled version may start to throttle or how the perf numbers will look like after 2hr of work.

I can’t see getting the MI355X with the MI430X so close behind, at least wait for the new stuff to be generally available so you can get a deal.

You can purchase the installed server from the immersion cooling company instead of getting the server seller to cobble together an immersion cooling system. The server isn’t too tricky to install, while an immersion cooling system can be fairly technical to size and plumb.

What’s old is new again, I remember over a decade ago oil companies branching out into cooling fluids; not just run of the (saw) mill oil but highly specialized coolant made for efficient heat transfer.