Here is a question we get quite often, how much power do DDR4 DIMMs actually use? We collected a very interesting data point on this inadvertently over the past few weeks. In the STH/ DemoEval lab we have a 1U Supermicro system with dual Intel Xeon E5-2650L V3 processors. That system is one of our load generation nodes we use for network testing. In that system we had the strangest combination of DDR4 2133MHz RDIMMs we have ever used, 16x 8GB DIMMs. These days, we are only buying 32GB and larger RDIMMs for the lab and this was the only machine that had 8GB DDR4 RDIMMs out of the ~50 or so servers we have in the lab that are DDR4 RDIMM capable.

As part of our DemoEval program we had a client that requested a Pentium D1508 machine be setup with 2x 8GB DDR4 RDIMMs to see if it was suitable for a NAS platform. Being thrifty, we decided to open this machine up and borrow some 8GB DIMMs. While we were at it, we went from a total of 16x 8GB DDR4 RDIMMs to 4x 8GB RDIMMs (two per CPU) just to see how much power removing 12x DIMMs would actually save. We knew the remainder of January had a light demand for RAM in that machine so it seemed like an opportune time. The results were borderline shocking.

Test Configuration

Our test configuration for this exercise was as follows:

- Supermciro SYS-6018R-WTR 1U

- 2x Intel Xeon E5-2650L V3

CPUs

- 16x 8GB Samsung DDR4 RDIMMs (M393A1G40DB0-CPB

)

- 2x Intel DC S3710 400GB

SSDs

- 1x Mellanox ConnectX-3 Pro EN dual port 40GbE NIC

This is a fairly decent E5 V3 low power configuration. We pulled 12 of the 16 DIMMs to register a delta.

We did heat soak both configurations for a full week and measured when we had constant temperature and humidity in the data center. That was important because servers tend to consume a few percent less power in their first few hours under load.

DDR4 RDIMM System Power Consumption Results

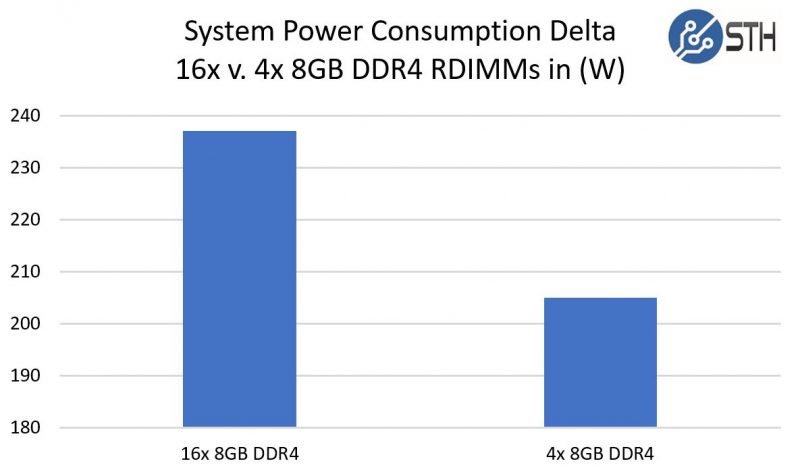

Here are the relevant figures for this system before and after pulling the 12x 8GB RDIMMs.

For those that want the hard numbers:

- 16x 8GB 232W

- 4x 8GB 205W

- Savings: 27W

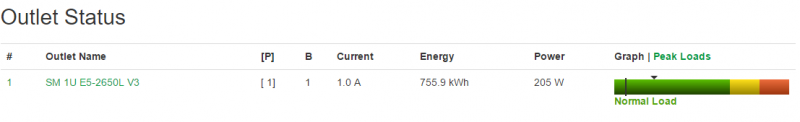

Here is a post-removal view of the server on our PDU running on a 208V circuit after heat soaking for a week at 100% utilization:

While we often underestimate RAM power consumption, in this case we are seeing greater than 10% of total system power being used by those 12x RDIMMs.

Caveat to This Measurement

Our diligent readers likely noticed a caveat in this analysis. We are measuring not just the power consumption of the DDR4 RDIMMs, but also the power consumed by the server itself. We removed the second 700w 80Plus Platinum PSU to increase efficiency but it is still well below 50% load. There were other factors at play as well. We were using a 1U chassis and removing 75% of the DIMMs certainly changed the airflow situation and cooling related power consumption.

An alternative methodology would be to use a system without chassis fans or try to measure the DDR4 RDIMMs more directly. That may work well to get an exact number for a DDR4 RDIMM however it is not instructive as to the number we care about, the server’s metered power usage. PDUs and circuits are where we pay for power so we feel this is an appropriate place to measure.

Final Words

Realistically, you want to populate at least four DDR4 RDIMMs per CPU on Intel Xeon E5-1600 and E5-2600 V3/ V4 CPUs. There are many memory capacity-bound workloads (e.g. virtualization) where adding more RDIMMs to use fewer machines is the logical option. We have a workload that is high CPU and L3 cache utilization but low RAM utilization so in this very narrow case we lost about 0.1%-0.2% performance while lowering power consumption by over 10%. That gives us around a 2W/ DDR4 RDIMM actual power consumption savings for the server with their removal. On low power nodes such as this, it is significant.

the chart would have been even more impressive if you had cut it at 200W…

that’s the first thing i noticed as well.

bar graphs are only useful for comparing values when they have a true zero.

that chart provides absolutely no advantage over the raw numbers.

How about same RAM size.

Difference between more dimms vs less larger dimms?

Maybe we’ll start seeing dram TRIM, where the OS disables refresh on a per-page basis. Current OSes tend to fill up free dram with the page cache, but that is becoming less important as ssd latency goes down. I wonder if we’ll see it first in servers, or mobile?

A caveat to the caveat. Appreciate the effort to isolate the actual draw of the additional RAM, but if the system has to pull more to cool the additional RAM then I would consider that the actual draw of the memory. You can’t have one without the other after all and I’d guess the total draw is more useful to someone building a system. Either way, a very useful article.

If only STH can do a similar test on LRDIMMs. LRDIMMs are much more power hungry than RDIMMs.