This week, Cadence released new IP for LPDDR6. This is faster memory that will eventually displace LPDDR5X. In future generations, we will see AI accelerators integrate both HBM and LPDDR memory, and so getting to a new, faster LPDDR generation is an important milestone.

Cadence IP for LPDDR6 Launched

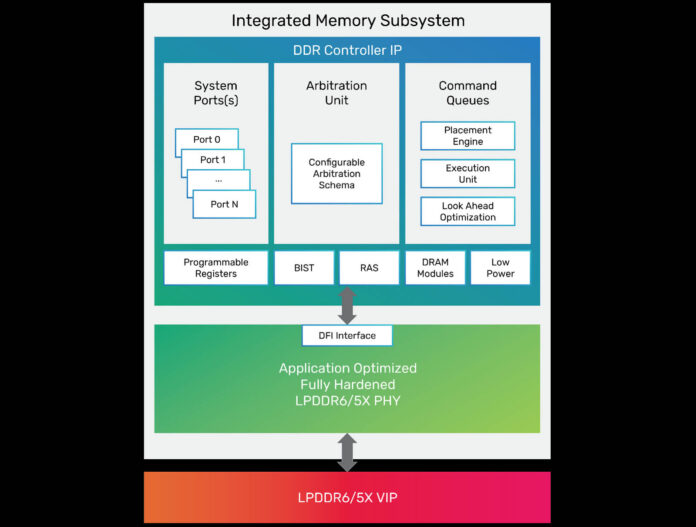

This week, Cadence announced that it successfully taped out the industry’s first LPDDR6/5X memory IP system operating at 14.4Gbps. That is a big increase in performance on the new memory. While we have seen LPDDR5X in even consumer AI systems like the GMKtec EVO-X2 Review with the AMD Ryzen AI Max+ 395, next generation big AI chips, we expect to use LPDDR. For example, at Intel Foundry Direct Connect 2025, the company showed a HBM5 and LPDDR chip as a potential future design. The idea behind adding LPDDR is to add a capacity tier for the AI accelerator directly attached to augment the HBM onboard.

Cadence’s IP design also supports LPDDR6 / LPDDR5X CAMM2. This is not just for the AI market, but also for future workstations and mobile devices.

Final Words

These types of IP packages tend to come out well before they are used. For example, Cadence already has PCIe Gen7 IP packages even though the industry is just starting to adopt PCIe Gen6 with parts like the NVIDIA ConnectX-8 NIC and B300 GPU. We expect see PCIe Gen6 servers in 2026. That makes PCIe Gen7 quite a ways away as an example. At the same time, in future chip designs, having fast memory is going to be important to feed larger accelerators especially given how memory bandwidth sensitive workloads like AI are. Having the Cadence IP now means that chiplets can be built with the standard for future generations of high-performance chips that we will see once those chiplets are produced and integrated into packages.

Cadence is pushing the envelope. They’re betting big on LPDDR6 + 5X as a key memory tech for next-gen AI and high-bandwidth systems.