ASRock Rack 4UXGM-GNR2 CX8 Power Consumption

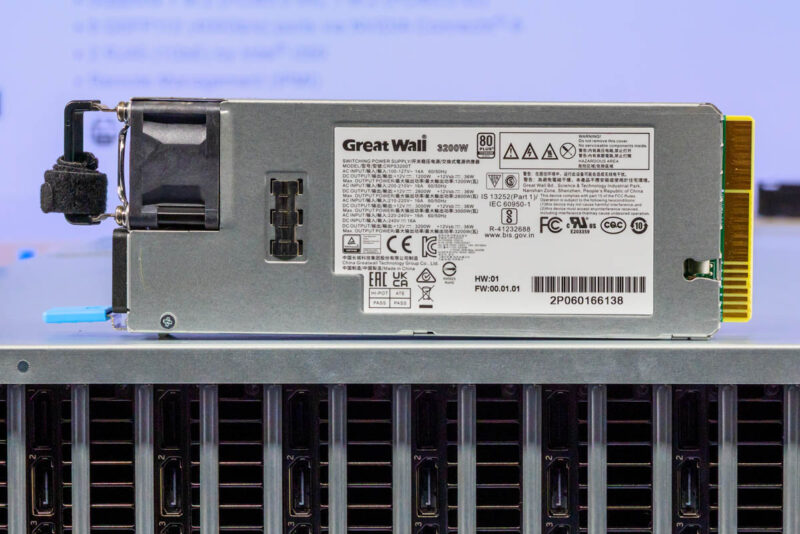

The system comes with four 3.2kW PSUs, so it is fair to say we are in a vastly different era of system power consumption than we were a decade ago. These are designed for 3+1 operation, meaning one power supply can fail and the system can continue to operate.

Here is a look at one of the 3.2kW 80Plus Titanium PSUs.

We were able to get this system to around 8kW, but there is likely room to scale higher than we got it to. If you take a step back, 8x 600W GPUs is 4.8kW alone. The CPUs and memory add another roughly 750-800W. There is then a high-end DPU with its own memory and 16-core CPU, four ConnectX-8 NICs, and front E1.S SSDs, which can add quite a bit themselves. That means we are over 6kW just from the primary components in this system, without including the air cooling.

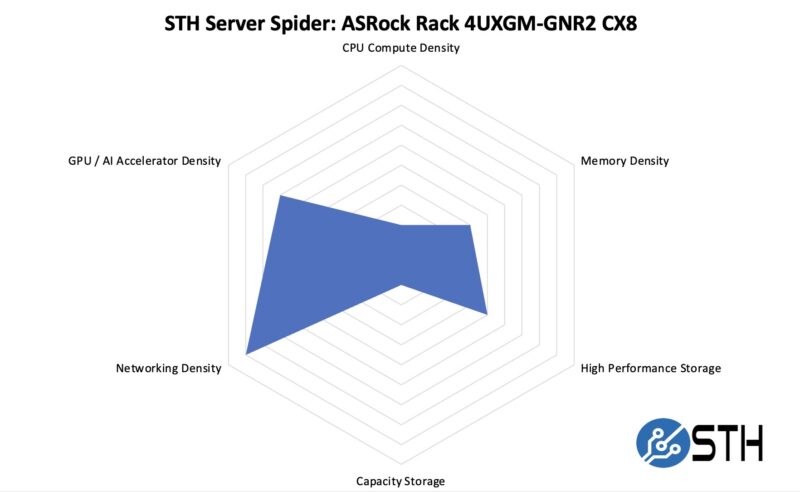

STH Server Spider: ASRock Rack 4UXGM-GNR2 CX8

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Clearly this system is not attempting to have the densest storage configuration, or have the most CPU and memory you can fit in 4U. What it does, however, is pack eight Blackwell GPUs and 3.6Tbps+ of networking into 4U, which would be wild to think about just a few years ago. One can rightly note that a NVIDIA NVL72 rack can have higher GPU and networking density per U, but make no mistake this is up there in terms of density.

Final Words

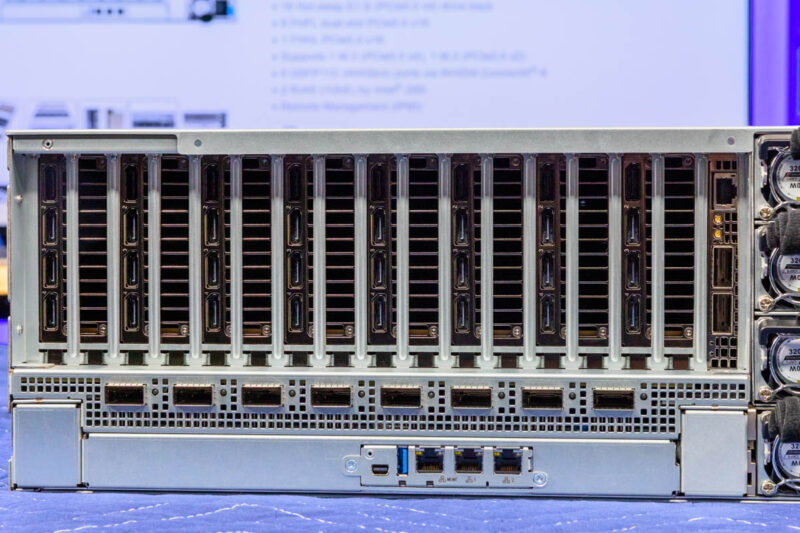

There are both evolutionary and revolutionary steps taken to advance the eight PCIe GPU systems with the ASRock Rack 4UXGM-GNR2 CX8. For example, the compact 4U air cooled form factor makes it denser than the 8-GPU HGX platforms, and part of what makes that possible is the swap to E1.S SSDs on the front of the system. For some context, if this system used 2.5″ SSDs, they would likely occupy the entire top 2U of the system aside from some extra venting. That would then make the front airflow less optimal and cause fans to work harder. That is an example of an evolutionary change.

On the revolutionary side, the change to the NVIDIA ConnectX-8 PCIe switch board completely changes these servers. A decade ago, we might commonly see 5-10Gbps per GPU with 1-2 40GbE NICs in a system. Now, each GPU gets 400Gbps of network bandwidth, and the system gets an additional 400Gbps through the DPU. Even just compared to last year’s systems, that is double the per-GPU network bandwidth. The board also removes 2-4 PCIe switches that would have handled CPU, GPU, and NIC connectivity. The revolution is removing those components while providing better cooling for the NICs and optics, and increasing the speeds.

For organizations looking to scale NVIDIA RTX Pro 6000 Blackwell Server Edition GPUs to handle AI workloads, or mixed workloads including VDI and visualization, this is a neat new architecture.

It is hard to underestimate how big of a change this is in a segment we have been covering for over a decade. It is also notable that we reviewed our first ASRock Rack 8-GPU server almost 11 years ago, so the company has been making this class of system for a long time. This is a very cool system indeed.