Today we are testing another large multi-GPU server the ASRock Rack 3U8G-C612. The machine enters our datacetner testing facility with an impressive set of 8x AMD FirePro W9100 workstation graphic cards. The ASRock Rack 3U8G-C602 server has been updated to the 3U8G-C612 model which now features Dual Intel Xeon E5-2600 V3 processors and DDR4 and the C612 chipset.

ASRock Rack 3U8G-C612 Key Features:

- Dual socket R3 (LGA 2011) supports Intel Xeon processor E5-2600 v3 family; QPI up to 9.6GT/s

- Up to 512GB ECC, up to DDR4 2133MHz; 16x DIMM slots

- Expansion slots: 8 PCI-E 3.0 x16 (double-width) slots, Mezzanine Card Support for 1GbE x 2 or 10GbE x2

- Dual 1GBase-T LAN with Intel i210 and dedicated IPMI LAN port

- 6x 2.5″ SATA Hot-swap drive bays

- 8x 80 mm x 38 mm Hot-Swap cooling fans

- 4 (3+1), Redundant up to 1200 Watts (200V AC) or 1000 Watts (100V AC) of power

- Server size: 700 x 430 x 130.5 mm (27.6 x 16.9 x5.1 inches)

The x8 mezzanine slot supports mezzanine cards with 2 x10G Ethernet or 2 xGLAN (2 x 10G Base-T by Intel X540 or 2 x SFP+ by Intel 82599ES or 2 x GLAN by Intel i350) for additional network connectivity.

The ASRock Rack 3U8G-C612 server comes in a 3U packing, Height 5.1″ (130.5mm), Width 16.9″ (430mm), Depth 27.6″ (700mm). An additional top plate is provided for GPU’s that have top mounted power connectors which brings the server Height to 4U.

The ASRock Rack 3U8G-C612

The ASRock Rack 3U8G-C612 server was delivered to us in an impressive size box.

The shipping box itself is large and well protected with plenty of foam inserts to protect the contents inside. The kit comes with the server rails, accessory box, two CPU heat sinks (white boxes), an optional 4U top, four power cords and the server itself.

The CPU heat sinks supplied with the 3U8G-C612 kit are standard Socket 2011 R3 passive CPU cooling blocks that we see in many servers. Chassis fans provide the cooling.

The accessory box includes a driver DVD, power connectors for 8x GPU’s and additional mounting screw kits.

The shipping box includes all needed accessories to get the server setup and running. The contents are well packed in a heavy duty shipping box, heavy duty foam padding protects the contents very well.

After taking the server out of the shipping box we get our first look at the 3U8G-C612. The server comes equipped with the standard top which keeps the server height at 3U.

For GPU’s that have top mounted power connectors an optional top is provided that allows for those power connections to be made. The 3U form factor does not afford enough space atop the cards to allow for the PCIe power connector.

With the optional top installed we now have a 4U server.

Here we can see the different rack mounting hole locations and space needed for each configuration of the server.

Here we can see the different rack mounting hole locations and space needed for each configuration of the server.

The front control panel on the left side of the server features Power Button and LED, UID Button and LED, LAN1, LAN2, LAN3, LAN4 Activity LEDs, HDD Activity LED, System Event LED, NMI (Nonmaskable Interrupt) Button, System Reset Button and 2x USB 2.0 ports.

Turning the server around we now get a look at the back.

Here we see the 6x 2.5” Hot-Swap drive bays on the left and the 4x power supplies along the bottom of the server. IO features include 2x USB 2.0 ports, 2x USB 3.0 ports, Serial Port, VGA output, 2x RJ45 by Intel i210 and 1x RJ45 Dedicated IPMI LAN port.

The 6x drive bays include standard hot-swap drive trays which can have 2.5” HDD’s or SSD’s installed.

The power supplies included with the 3U8G-c612 are 4 (3+1), Redundant hot swap units. Depending on what type of service these are connected to they provide 1000W @ 90Vac ~132Vac or 1200W @ 200Vac ~264Vac. That is up to 4.8kW of power among them.

Taking the tops off of the server we start to get a look at the inside of the 3U8G-C612.

When opening the server for the first time we find a series of blanking plates installed over the PCIe slots. These help to direct air flow in slots that will not be populated with a GPU card. For each GPU used, simply remove the corresponding plates.

Here we see what ASRock calls the Switch Board (SWB).

The front of the server is at the bottom of the picture. At the top 8x black power connectors for the GPU’s is located right next to the fan bar. The silver heat sinks provide cooling for 4x Avago Technologies PLX 8747 chips that split the four PCIe lanes into eight. The PLX 8747 is a 48-lane, 5-port, PCIe Gen 3 switch device developed on 40nm technology. Here is the block diagram showing the electrical connections:

The 3U8G-C612 comes equipped with 8x 80×38 mm system fans for cooling. These Sunon PF80381B1-000C-S99 fans are 12V DC and rated at 193.5m3/h.

Now looking at the CPU/RAM area of the server.

We can see the CPU’s are located right behind the cooling bar for optimal cooling. The fans flow air directly from the GPU’s in the front of the server so this air flow is preheated. In operation we did not find this to be a problem with our tests. When cooling 2.4kW of GPUs, cooling 300w worth of RAM, I/O chips and two Xeon CPUs is relatively simple.

Inside the server and looking at the back IO area we see the power connections and storage connections over on the left side. The white Mezzanine Card slot just behind the memory slots provides support for 1GbE x 2 or 10GbE x2 network cards.

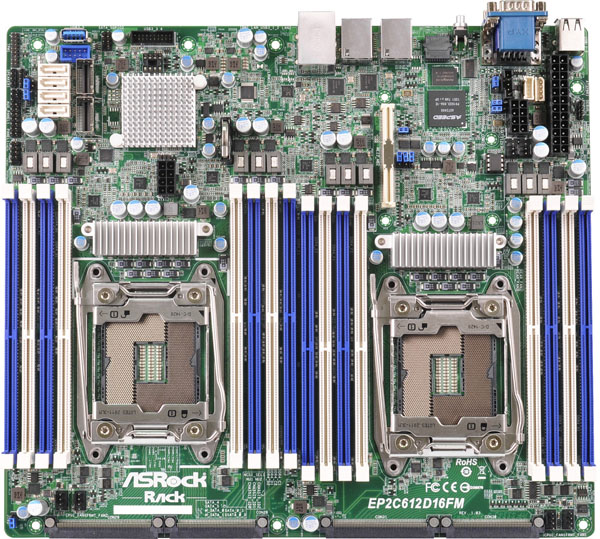

The motherboard used in the 3U8G-C612 is the EP2C612D16FM.

Back in September of 2014 this was one of the first motherboards we reviewed that supported Intel Haswell-EP Xeon E5-2600 V3 processors and DDR4 memory. It features direct connect PCIe slots to the Swtich Board in the front of the server and in a small SSI CEB 12″ x 10.5″ (30.5 cm x 26.7 cm) form factor.

The small form factor of the EP2C612D16FM motherboard allows for very smart instalation into the 3U8G-C612 server.

The back of the server has two thumb screws which unlock the motherboard tray handles. These open and allow the entire motherboard assembly to be pulled directly out the back of the server for easy maintenance or replacement.

The back of the motherboard has 4x PCIe connectors which connect to the front switch board via an interposer board.

After looking over the server we are now ready to start installing our GPU’s. For this review we are using AMD FirePro W9100 GPUs.

We removed all the blanking plates from the front of the server before installing the GPU’s. We found that connecting the power connectors to the GPU’s first, then installing the GPU into the server was the easiest way to do this. Then reach behind the GPU and plug the power cable into the motherboard GPU power socket. This is a tight fit to get your hand down in between the GPU and the cooling bar but we were able to get all eight cards installed without issue.

One thing to take note of after installing the GPU’s. Make sure the power cables do not come in contact with the cooling fans. We would like to see ASRock install a fan grille to prevent cables from coming in contact with the cooling fans.

Here we have installed all eight GPU’s and double checked to make sure all power cables were pushed back away from the cooling fans.

The last thing to do when finishing up installing the GPU’s is swing up the GPU retention lever and screw down the retaining screw to lock the GPU’s into place.

Here is what the installation looks like with the 3U8G-C612 fully loaded with 8x AMD FirePro W9100 GPU’s.

The GPU’s located at the front of the server receive direct outside air for optimal cooling.

BIOS

Looking at the BIOS we can see how it reports the PCIe slots.

The paired sets of PCIe x16 slots are adjustable here, with settings Gen 1, Gen 2, Gen 3 and Auto configurations.

Remote Management

ASRock provides remote management capabilities for remotely administering the server. For remote management simply enter the IP address for the server into your browser and login.

The login information for the 3U8G-C216

- Username: admin

- Password: admin

We ran our tests completely through STH’s new data center in Sunnyvale, California under remote management.

Remote management also reports the temperature of each CPU/GPU and also all the other aspects that are typical of other remote management solutions.

AMD FirePro W9100 Professional Graphics GPU’s

For this review we used AMD FirePro W9100 GPU’s.

These are professional workstation graphics cards but also have the ability to be used in compute systems.

Key AMD FirePro W9100 Cooling/Power/Form Factor Specs:

- Cooling/Power/Form Factor:

- Max Power: 275W

- Bus Interface: PCIe 3.0 x16

- Slots: Two

- Form Factor: Full height/ Full length

- Cooling: Active cooling

We often see the AMD FirePro W9100’s in professional workstations for graphics/media applications use. These GPU’s have 6x mini-display ports and can support resolutions of 4096×2160. They also have 2,816 stream processors (44 compute units) which make this an ideal card for compute workloads.

This is how GPUz reports these cards.

Test Configuration

We used remote access to run all tests and OS installs on the 3U8G-C612 while it was running in the new datacenter.

- Processors: 2x Intel Xeon E5-2683 V3 (14 cores each)

- Memory: 16x 16GB Crucial DDR4 (256GB Total)

- Storage: 1x Intel DC S3700 400GB SSD

- GPU’s: 8x AMD FirePro W9100 GPU’s

- Operating Systems: Ubuntu 14.04 LTS and Windows Server 2012 R2

One of the first issues we ran into while testing the 3U8G-C612 and the AMD FirePro W9100’s on Windows Server 2012 R2 was we could see all 8x GPU’s in many applications but the device manager and benchmarks would only report 5x GPU’s available for use. With Linux we did not have this problem.

We asked AMD about this issue and it was reported to us that Windows can only support up to 28 video sources and with 8x W9100 we had 48 video sources, windows was running out of resources. The answer to this problem as a utility called mGPU.exe which adjusts a reg key to only use 1 video source per card. After using this utility we now have only 8 video sources and thus under the windows limit. All 8x GPU’s are now seen by the system and benchmarks and testing went very smooth. In this case we are using the W9100’s in compute mode so the number of video sources was not a problem.

With the newly added capabilities of the new STH datacenter we have the ability to run machines like this that require large power loads that would exceed what one can run off of standard home and office circuits. The new racks in the data center can run up to 208V 30amp loads which is perfect for these multi GPU servers.

Here we see the 3U8G-C612 installed in the datacenter.

AIDA64 Memory Test

The EP2C612D16FM motherboard used in the 3U8G-C612 server shows excellent Copy and Read bandwidth but is lagging in Writes.

Memory Latency ranged at ~119.2ns and our average systems using 16x 16GB DIMM’s ranged about ~78ns.

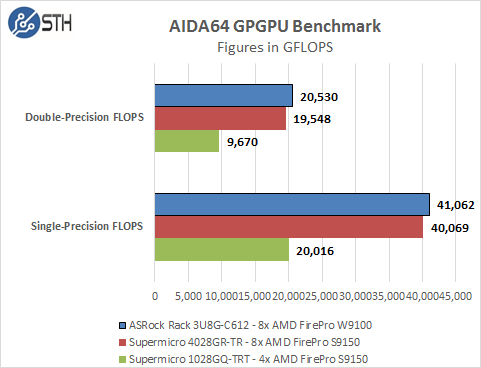

AIDA64 GPGPU Test

For our GPU testing we use AIDA64 Engineer to run the provided GPGPU tests which has the ability to run on all eight GPU’s and the CPU’s.

The first test we ran was AIDA64 GPGPU benchmark. This test see’s all GPU/CPU present in the system and goes through a lengthy benchmark test. Our combined single-precision performance came in at 41,062 GFLOPS or 41 TFLOPS performance!

Memory Read: Measures the bandwidth between the GPU device and the CPU, effectively measuring the performance the GPU could copy data from its own device memory into the system memory. It is also called Device-to-Host Bandwidth. (The CPU benchmark measures the classic memory read bandwidth, the performance the CPU could read data from the system memory.)

Memory Write: Measures the bandwidth between the CPU and the GPU device, effectively measuring the performance the GPU could copy data from the system memory into its own device memory. It is also called Host-to-Device Bandwidth. (The CPU benchmark measures the classic memory write bandwidth, the performance the CPU could write data into the system memory.)

Memory Copy: Measures the performance of the GPU’s own device memory, effectively measuring the performance the GPU could copy data from its own device memory to another place in the same device memory. It is also called Device-to-Device Bandwidth. (The CPU benchmark measures the classic memory copy bandwidth, the performance the CPU could move data in the system memory from one place to another.)

Single-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with single-precision (32-bit, “float”) floating-point data.

Double-Precision FLOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as FLOPS (Floating-Point Operations Per Second), with double-precision (64-bit, “double”) floating-point data. Not all GPUs support double-precision floating-point operations. For example, all current Intel desktop and mobile graphics devices only support single-precision floating-point operations.

24-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 24-bit integer (“int24”) data. This special data type are defined in OpenCL on the basis that many GPUs are capable of executing int24 operations via their floating-point units, effectively increasing the integer performance by a factor of 3 to 5, as compared to using 32-bit integer operations.

32-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 32-bit integer (“int”) data.

64-bit Integer IOPS: Measures the classic MAD (Multiply-Addition) performance of the GPU, otherwise known as IOPS (Integer Operations Per Second), with 64-bit integer (“long”) data. Most GPUs do not have dedicated execution resources for 64-bit integer operations, so instead they emulate the 64-bit integer operations via existing 32-bit integer execution units. In such case 64-bit integer performance could be very low.

clPEAK Benchmark

We also ran clPEAK benchmark on the system and saw numbers right where we expected them to be. This test runs on only one GPU at a time so the screen shown was repeated eight times.

The AMD FirePro W9100 GPU tested here showed improved performance in Single-precision and Integer tests vs the AMD S9150’s we tested before. However the S9150’s have better performance in memory bandwidth and Double-precision compute.

GPUPI 2.0

GPUPI is the first General Purpose GPU benchmark officially listed on HWBOT and also the first that can simultaneously use different video cards. Only the same driver is required. More information can be found here. GPUPI 2.0

The last benchmark we ran on this system and we received results that would rank as a World Record with a time of 3 minutes, 6.041 seconds to complete. This result was slightly faster than the last WR run we did, mainly because of different GPU’s used on this system.

Power Consumption

For final testing we have the server in our data center connected through our PDU using 4 outlets numbered 8, 9, 10, 11. The graph shows what each outlet’s power use is. This is an improvement over previous testing where we only had total power consumption figures as we can now see a per-power supply readout.

For our tests we use AIDA64 Stress test which allows us to stress all aspects of the system. We start off with our system at idle which is pulling ~400watts and press the stress test start button. You can see power use quickly jumps to over 2,000watts and continues to climb until we cap out at ~2,400watts. The 3U8G-C612 appears to have a very even power distribution across the 4 outlets.

Thermal Testing

To measure this we used HWiNFO sensor monitoring functions and saved that data to a file while we ran our stress tests.

Here we see what our HWiNFO sensor layout looks like while we test.

Now let us take a look at how heat from the stress test loads were handled by the cooling system in the 3U8G-C612. This shows that the servers 8x 80 mm x 38 mm Hot-Swap cooling fans are very powerful and are up to the task of keeping the CPU’s cool even after the air flow is preheated through the GPU’s.

Here we see the temperatures of the GPU’s while the stress test is running. The 8x 80 mm x 38 mm hot-swap cooling fans are pulling air directly through the front of the server to cool the GPU’s, this helps to keep the GPU’s cool while running under heavy loads.

Conclusion

Here we are at the end of the 3U8G-C612 testing and review of ASRock Rack’ss premier GPU server, we quickly found a challenge while running Windows Server 2012 R2 but AMD provided a quick response and a solution that worked well.

Locating the GPU’s at the front has clear advantages in a server like this. Fresh cool air is pulled through these cards which is exactly were its needed. Most of the heavy workloads will be done on the GPU’s so this provides a good cooling solution. The optional top for GPU’s with top mounted power connectors also gives this server added flexibility in the choice of GPU’s to run at the expense of adding 1U extra height. Spreading the GPU’s across the width of the server opens up space in-between the GPU’s which has an added cooling bonus with side mounted active cooling solutions.

We would like to see a fan grill installed between the GPU’s and cooling bar. Care must be taken to be sure no power cables can get into the high speed fans which would not be good. However after installing the GPU’s it is rather simple to stow away the cables, perhaps with cable ties to firmly secure them out of the way.

We also like the unique slide out motherboard tray at the back of the server which makes it much easier to service this machine. While it is true that all power and storage connections need to be removed first to accomplish this, it’s something that would be required if the motherboard needed replacement anyway. It does make the task of motherboard replacement easier when accessing the mounting screws and other parts. An additional benefit of using a small motherboard like the EP2C612D16FM is it allows space on the left side for 6x 2.5” hot-swap drive bays which can give the 3U8G-C612 large local storage abilities.

We found the AMD FirePro W9100’s to be powerful compute cards and well suited to this server. With many different applications that might be used on a server like this the type of workloads used might require different GPU’s or even Intel Xeon Phi cards. The 3U8G-C612 is well equipped to handle any number of different GPU’s/Phi cards with its cooling capacity.

The added mezzanine card support for additional dual 1GbE or 10GbE networks is also a nice feature in case higher speed connectivity is required, these are special cards ordered through ASRock.

This seems like it’s designed more for passively-cooled cards like Tesla or Xeon Phi. Did you run into any heat or airflow issues with the Firepros? The GPU fans appear to be fighting the chassis fans in this case.

Bolt this top thingy on if you want to actually make power connections to your cards. Pass. What a terrible design.

You can get 16 Tesla GPUs in 4U with this boxes from Supermicro. http://www.supermicro.com/products/system/1U/1028/SYS-1028GQ-TR.cfm

I’m looking at the first pics and it is a 3U unless you need top power then it can become 4U. Nice to have the option I’d say. Many cards this is only a 3U box. The Supermicro one you show doesn’t even look like it can fit top powered GPUs.

Saying 3x 1U servers gets 12 or a 3U server with 8 cards is not a good comparison. You only need one CPU, set of RAM and server for the 3U. You also need two fewer PDU ports. Power consumption on the 3U is going to be much better because the bigger fans are more efficient than 1U fans and those 1U fans are going to be spinning like crazy to keep up with that much GPU.

Needs more practical benchmarks of real world scientific/engineering code (99.9% of the “big” stuff is Fortran based) and more general software implemented virtually. That is how this type of a system is going to be used in most cases: in a mainframe/supercomputer environment running MCNP, Gaussian, Charmm, etc. or distributing its resources to multiple people using software from companies like Accelrys or Dassault.

I really don’t like the feature that if I mouse over a picture it turns ligther. The whole experience of viewing this page i just blinking.

Contents of this page I like.