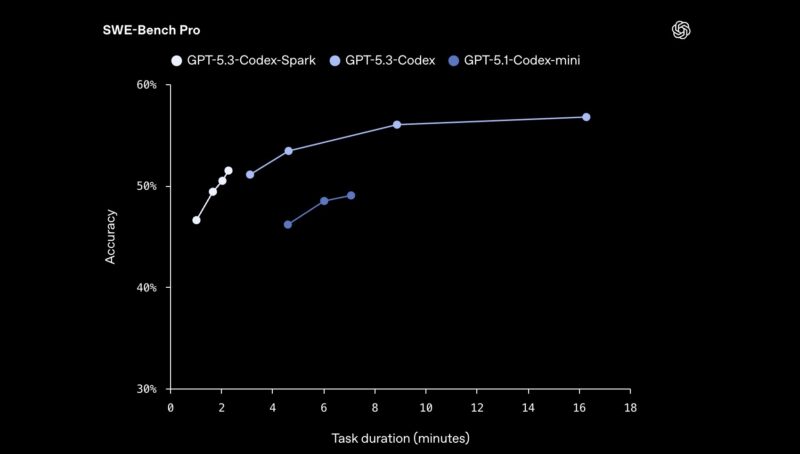

OpenAI announced that it is rolling out a new GPT-5.3-Codex-Spark as a research preview. OpenAI has released many models, but this is designed as a coding assistant that is not just another model. Instead, it is designed to be super-fast with 1,000 tokens per second performance on giant Cerebras chips. This is the first public collaboration between OpenAI and Cerebras, and it is notable.

OpenAI GPT-5.3-Codex-Spark Now Running at 1K Tokens Per Second on BIG Cerebras Chips

In a quick demo, OpenAI showed a “build a snake game” task issued to GPT-5.3-Codex-Spark and GPT-5.3-Codex at medium. Both completed the task, but the Cerebras-backed Spark ran in 9 seconds, compared with nearly 43 seconds on the non-Spark model. If you want to see the video of the side-by-side, here is a link. The Spark model is said to be higher quality than the GPT-5.1-Codex as well as much faster.

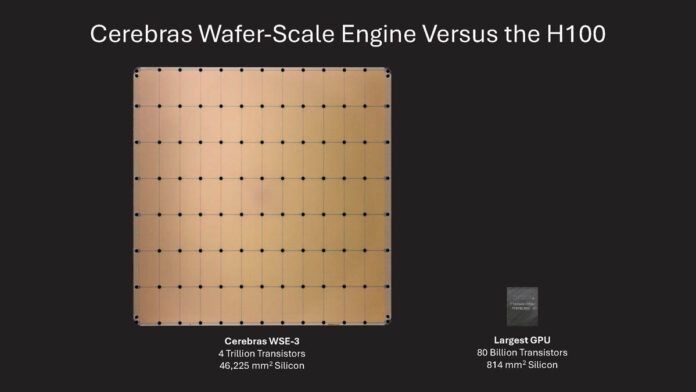

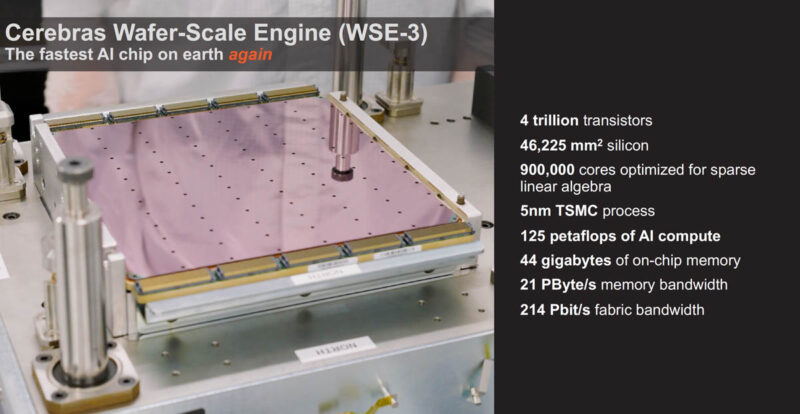

OpenAI says that it is running these on the Cerebras Wafer-Scale Engine 3 (WSE-3) that we highlighted in 2024 on STH. For those who have not been following Cerebras, they take the largest square they can from a wafer and then manage to power and liquid-cool it without cutting it into many smaller chips.

If you want to see a bit more of how these are cooled, we covered this in our SC22 video:

The idea is that with these huge chips even faster inference can happen. For agentic AI, having faster task completion means that workflows run faster.

Final Words

This is a cool announcement because Cerebras has been one of the few AI companies that I have been saying has a shot. In 2020, just about six years ago, we published Our Interview with Andrew Feldman CEO of Cerebras Systems. Folks that know STH know I am not a fan of doing interviews, but I made an exception for this one. I remember that as I was flying to New Zealand later that evening and got to tour the Los Altos lab that was cooled by partially cutting the side of the building. Not long after that interview, the world went into pandemic mode, but this is one that stuck with me for years. It is great to see the Cerebras team have success.

For all of us that have been setting up workflows with tools like n8n (see our Using the Dell Pro Max with GB10 to Profit within 12 Months piece) or OpenClaw, the reality is that fast inference will be important as we ask systems to do larger tasks. The “build a snake game” prompt leading to a working browser game in 9 seconds certainly made me think of how long it took me to do the same task a quarter of a century ago. When I think about that, I think about the time I spent actually making a (then) Java-based snake game. Perhaps underestimated is that it took some time learning concepts even just to get to that point. Now, it is a simple prompt and nine seconds without coding. It also makes me think of how fast the agents of the future are going to turn ideas into reality.

Hopefully we get to see more Cerebras inference solutions in the near future because this is cool.