The roadmap for Intel Xeon’s next major update has had two variants, just as it does today. Over the past few weeks, and under new Data Center Group leadership, that roadmap has been analyzed, and a significant change has been announced. Intel’s next-generation 8-channel “Diamond Rapids”, the successor to today’s popular Granite Rapids-SP, or the mainstream Intel Xeon 6700P/ 6500P series of server processors, has been removed from Intel’s roadmap.

Intel Cancels its Mainstream Next-Gen Xeon Server Processors

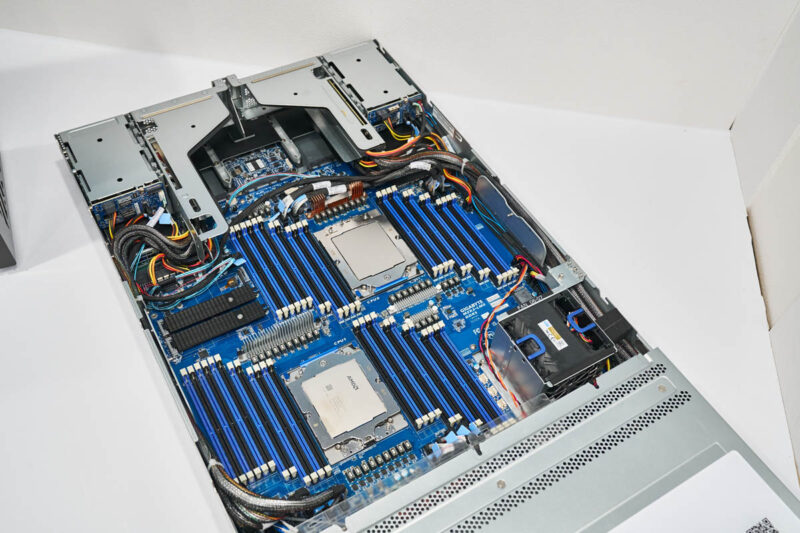

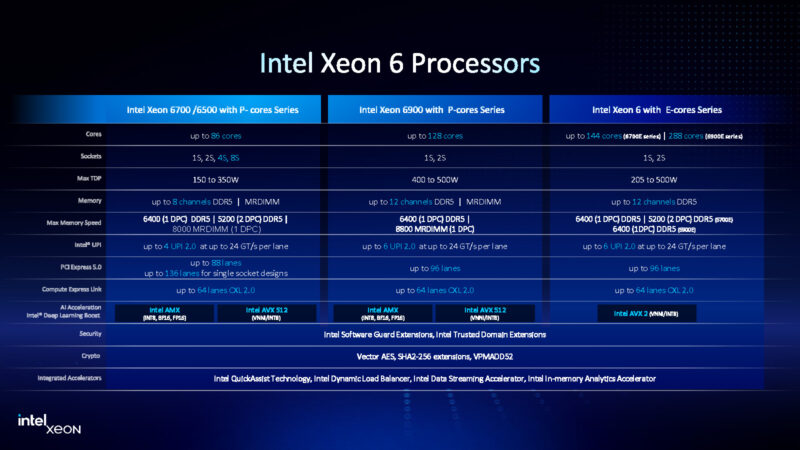

The next generation of high-end server processors is poised to transition from 12 to 16 channels of memory, utilizing faster memory as chip designers integrate more cores and faster I/O with PCIe Gen6. We expect this transition to happen in the second half of 2026 to support future AI cluster build-outs. Both AMD EPYC “Venice” and the Intel Xeon “Diamond Rapids” will have 16-channel memory.

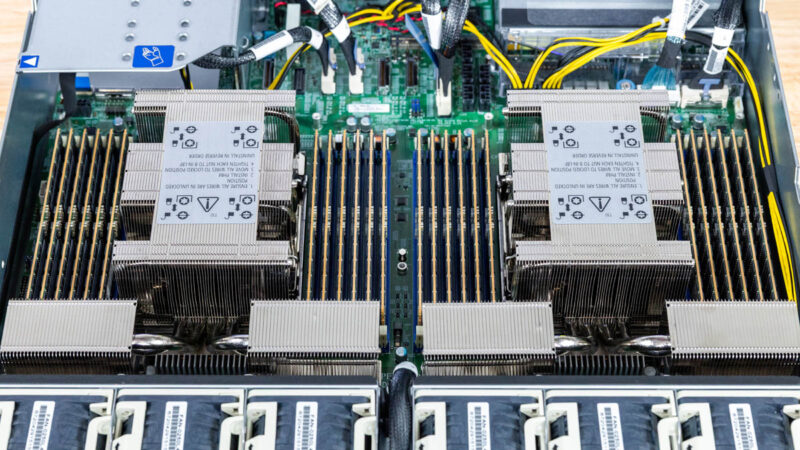

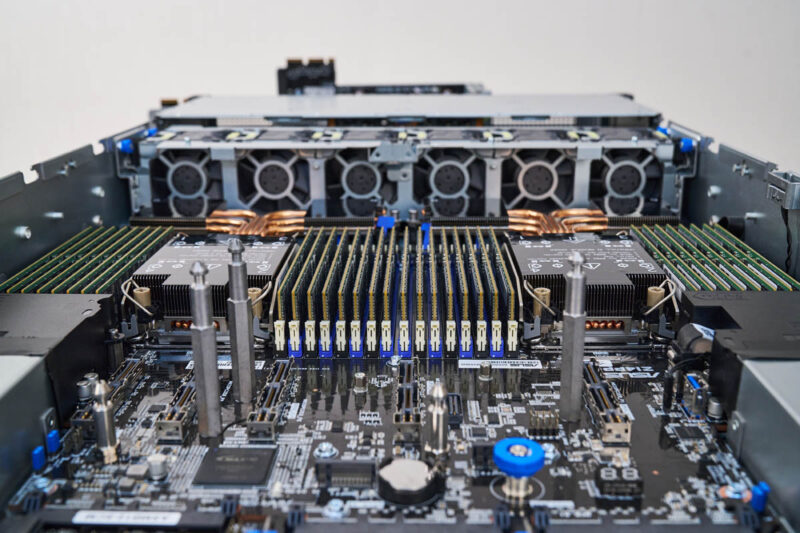

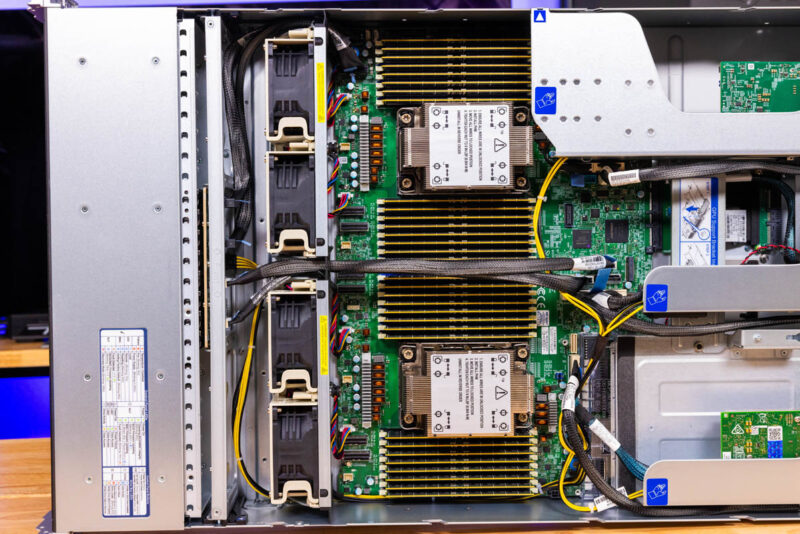

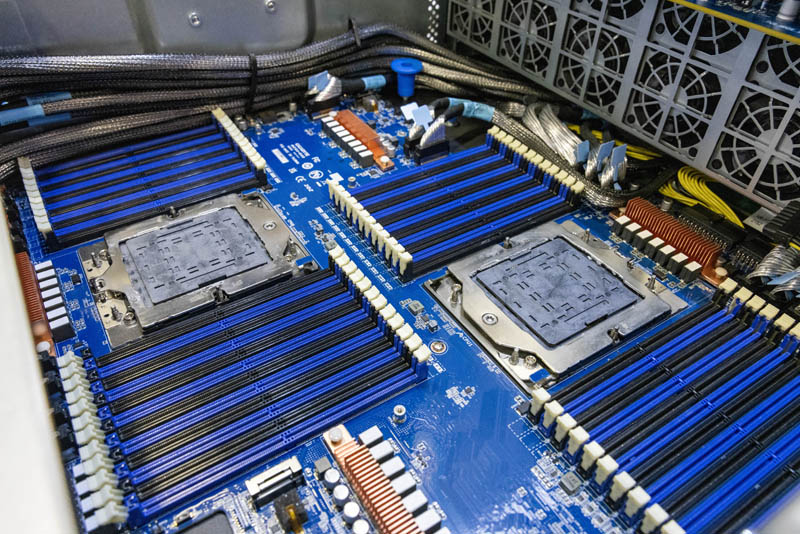

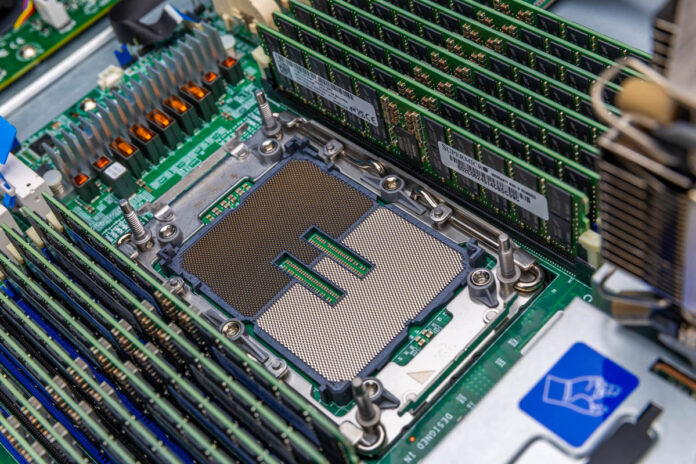

While 16-channel memory may not sound like an exciting number at first, the challenge of Intel’s dual-platform strategy in the data center is highlighted in servers. The 12-channel memory parts like Intel’s Granite Rapids-AP (Intel Xeon 6900P series), custom-only Sierra Forest-AP, and the upcoming Clearwater Forest-AP, use platforms with 12 memory channels per CPU for 24 DIMMs. In a standard 19″ rack server motherbaord, there is quite a bit of space to fit more components.

As a quick aside, this is similar to the configurations of the AMD EPYC 9004/ 9005 systems for Genoa, Genoa-X, Bergamo, and now Turin, which all utilize 12-channel memory. The 12-channel memory design in these chips provides 50% more theoretical memory bandwidth than the previous 8-channel designs, assuming all other factors remain equal. Those factors are almost never equal, but that is the concept.

There are still challenges with the 12-channel designs. For example, you can get more DIMM slots for more memory capacity with an 8-channel platform. Intel has an 8-channel memory design with the Birtch Stream-SP platform for the Xeon 6700 series (Granite Rapids-SP and Sierra Forest-SP) that provides a few major benefits.

First, it allows a server to be configured with two DIMMs per channel (2DPC) operation for adding more memory. Many workloads require a certain memory capacity. Having more DDR5 DIMM slots means you can fit more DIMMs into a server, two CPUs, eight-channel memory, 2DPC is 32 DIMMs, or 33% more than the 12-channel designs can fit.

Going to two DIMMs per channel in a 12-channel platform, like this Gigabyte AMD EPYC server, causes unnatural-looking server motherboards.

Fitting 48 DIMMs (or 32) comes at a memory bandwidth hit, as well as the memory channels clock down to handle longer trace lengths. The 2DPC 48 DIMM platforms run DDR5 slower, even if they are only populated with 24 of the 48 DIMM slots.

Still, the Intel Xeon 6700 series is popular. We recently looked at the MLPerf Training v5.1 submissions, and the Intel Xeon 6700P was more popular than the Xeon 6900P. That might shock people since the Xeon 6900P is supposed to be the high-end AI line, but 8-channel designs are popular.

A primary advantage of an 8-channel platform for mainstream servers is that the motherboards can be more cost-effective, and populating the servers is less expensive. If you are looking to purchase 64 cores per socket, you can use a smaller socket and have a lower-cost motherboard. You can also lower your DRAM cost by using a greater number of lower-capacity modules rather than paying price premiums for higher-capacity models. This makes the Xeon 6700P/ 6500P series popular because it is the lower-cost option for those who do not need higher core counts. It actually is a point of competitive differentiation for Intel Xeon over AMD EPYC because Intel has a lower-cost platform.

With Oak Stream platforms and Diamond Rapids CPUs, Intel has a better setup than in the current Birtch Stream and Granite Rapids era. The reason is that the Oak Stream roadmap had both a 16-channel and an 8-channel variant. Sixteen channels give the memory capacity of an eight-channel 2DPC platform.

A few months ago, Intel’s CEO Lip-Bu Tan made remarks discussing some of the decisions he was on the path to change, such as removing SMT or Hyper-Threading, and how it made Intel’s upcoming products (like Diamond Rapids) less competitive. Within a few minutes of meeting Intel’s new Data Center Group EVP and GM, Kevork Kechichian, I mentioned that it must be tough for the business to have comments like that out there.

From what I understand, Intel has been analyzing its roadmap over the past few weeks. About a month ago, there was chatter among OEMs that Intel’s next-generation 8-channel platform for Diamond Rapids might end up on the chopping block. More recently, OEMs have been learning that the 8-channel Diamond Rapids platform has fallen off Intel’s roadmap, while the 16-channel Diamond Rapids remained.

We had multiple sources, enough to publish on, so I sent a note to Intel last night, and they quickly sent the following statement:

We have removed Diamond Rapids 8CH from our roadmap. We’re simplifying the Diamond Rapids platform with a focus on 16 Channel processors and extending its benefits down the stack to support a range of unique customers and their use cases. (Source: Intel Spokesperson to STH)

I know there are many rumors in the industry, but this, like removing a Cooper Lake variant from its roadmap, selling its server systems business to MiTAC, and so forth, are all ones that Intel itself has confirmed directly to us.

Final Words

In many ways, this is the way of the server market. Lower-end server platforms that offered the same number of memory channels as previous generations have gradually been phased out over time. The (Xeon) EN is dead – Long Live EN! from over a decade ago was a classic case study in this, except that the Xeon 6700P/ 6500P series has been quite popular. Perhaps the real takeaway is that in servers, eventually the smaller sockets disappear as the world adjusts to higher server capacities.

For those who want a deeper dive into market dynamics, given this change, you can check our Substack for that and why I think it might be a net positive signal.

I suppose my question is what’s the cost as a customer of buying a 16 channel platform but only using 8 channels and just pretending it’s the cut 8 channel variant. I’m not going to like the answer with the current memory prices, am I!

This was a smart move on Intel’s part. Tall Form-Factor MRDIMMs provide the same DRAM capacity as 2 DIMMs per channel so there is no need to choose between expensive 3D stacked (3DS) DIMMs or less expensive 2 DIMMs per channel. Now that AMD’s server chips have become competitive, OEMs don’t have the resources to make systems for AMD Venice and Intel Diamond Rapids with both 8 and 16 channels per processor. Since Intel is adding NVLink-C2C to Xeon, there is also the NVLink-C2C variation for OEMs to support. I think NVLink-C2C on Xeon will be awesome. I hope there is a RTX PRO 6000 GPU with NVLink-C2C.

@bsmith It would not make sense to only use 8 DRAM channels of a 16 channel processor. Just use DIMMs with half the capacity and fill all 16 channels to double the DRAM bandwidth.

It’s also the trend from dual socket to single socket. 1P brings down the cost, reduces complexity & simplifies NUMA. There’s enough PCIe/memory on modern chips. The Intel models offered by major OEMs are still mainly 2P, perhaps 1P Intel (16 channel memory) will be more available next generation? The “P” SKU AMD EPYCs have been optimum for our use case.

@Y0s There are single processor socket (1P) Granite Rapids and Turin systems so I’m sure 1P will remain available for the next generation. I’ve heard people say 2P today is like the 4P of times past. Intel charges a large premium for 4P compared to 2P on Granite Rapids. For example, the recommended customer prices for the 6788P (4P and 8P capable) is $19K while the 6787P (2P capable) is $10.4K. These processors have the same # of cores (86), same clock speeds and same number of accelerators. Another example is the 6768P (4P and 8P capable) for $16K while the 6767P (2P capable) with otherwise identical specs is $9595.

I would rather have a 4P system than two 2P systems but given the large price premium for 4P, it makes more sense to use 2P in my case. Three 2P systems is less expensive than one 4P system. The large price premium for 4P and 8P CPUs drives applications to GPUs because there is no price premium for using more GPUs in a single node. Supermicro has a nice 2P system for Emerald Rapids with closed-loop liquid cooling built-in called SYS-751GE-TNRT-NV1. I haven’t found any company that makes a similar version for Granite Rapids.

This is surprising as the lower 8 channel systems were also used in some workstation and embedded systems. Further more, the difference between an 8 channel and a 12 channel Xeon today was simply a single chiplet as the each CPU chiplet has four channels of memory natively. Use two CPU+memory chiplets and two IO dies for the 8 channel models with the 12 channel models comprised of three CPU+memory chiplets and two IO dies. Conceptually the 16 core models coming a year from now would follow the same pattern and be composed of four CPU+memory chiplets. Intel could have gone further by increasing CPU+memory chiplets in the package but only permit the socket maximum of memory channels. Pretty straight forward way to scale.

Going to 16 channel reduces the change of 2 DIMM per channel systems. It is not impossible build such a system but the reduction in memory clock and physical space would make it incredibly premium and require proprietary motherboards. I do think that Asrock Rack has the right idea by releasing single socket boards that can be linked together to increase socket count via MCIO cables. That also makes scaling up to 4 and 8 sockets straight forward in terms of designs (but ignore the rat’s nest of internal cables to get there). An 8 socket Xeon with 32 DIMMs per socket should support upward of 128 TB of physical memory. Even with 1 DIMM per channel, using these single socket boards are going to be rather cramped for space. The downside to Asrock Rack’s approach is that PCIe slots are also on a separate board so for a more traditional system, you’ll have to buy more to get a complete experience.

The other factor for all of these is CXL support which can provide even more addressable memory to a system. I’m surprised that we not yet see any vendor provide a motherboard with some CXL memory extenders integrated on it. On a setup like the Asrock Rack example above, the CXL memory extenders could sit comfortable on there own board and cabled to the CPU sockets. It’d be a win for the company who made it as it would be interchangeable between Xeon and Epyc platforms if that vendor offered both platforms in a cable centric design.

@Kevin G What may have happened is the yield on Intel’s 18A process is low enough that Intel is getting a significant number of Diamond Rapids CPU chiplets with only a small number of working CPUs. If Intel was getting high yield on 18A, the only ways to sell a processor with a small number of CPU cores in a processor socket with 4 CPU chiplets would be to disable working CPU cores or sell a processor socket with 2 CPU chiplets. Obviously, Intel better figure out how to improve 18A yield for cost reasons.

I’m glad Diamond Rapids will only be available with 16 DRAM channels because I would rather have a 2P system with each processor socket having 16 DRAM channels. When there are fewer processor variations, it is more likely that someone will sell the configuration I need. I need 14 NVMe SSDs, such as the Micron 9650 series. Hopefully, someone will make that with closed-loop liquid cooling built-in.

@Kevin G I didn’t explain my last comment very well. What I meant is 18A yield is lower than Intel initially hoped so 4 CPU chiplets, rather than 2 CPU chiplets, are needed to get enough working CPU cores for the lower-end of the Diamond Rapids product line. Here is how Intel CFO David Zinsner described 18A yields during Intel’s Q3 2025 earnings call:

“The yields are adequate to address the supply, but they are not where we need them to be in order to drive the appropriate level of margins. And by the end of next year, we will probably be in that space. And certainly, the year after that, I think they will be in what would be kind of an industry acceptable level on the yields.”

Intel will be in big trouble unless yield on 18A-P is good by the time Coral Rapids is in production, presumably in H2 2027. There could be two versions of the Coral Rapids CPU chiplet, one with 4 DRAM channels per CPU chiplet and one with 8 DRAM channels per CPU chiplet. That would allow having 2 or 4 CPU chiplets in a processor package with both options supporting 16 DRAM channels.

An alternative is for Coral Rapids to have a maximum of 16 CPU chiplets per processor package. In this case, each CPU chiplet would be connected to one DRAM channel. There could also be a version of the Coral Rapids CPU chiplet that connects to 2 DRAM channels so 8 CPU chiplets in a processor package would still support 16 DRAM channels.

Another idea is to have a single type of Coral Rapids CPU chiplet that would have 2 DRAM channels but only use one of the 2 DRAM channels when packaging 16 CPU chiplets in a processor package. Both DRAM channels would be used when packaging 8 CPU chiplets in a processor package and this same CPU chiplet could also be sold, one per package, as a desktop processor. The advantage of this idea is that the CPU chiplet would still be usable if there is a defect in the hardware for one of the 2 DRAM channels. Coral Rapids will use DDR6, which has 4 subchannels per channel, unlike DDR5 which has 2 subchannels per channel.

Biggest concern here is not number of memory channels, it’s SMT support or lack of it. Most enterprise software is licensed by core now. No enterprise customer wants yo pay double the software license for the same number of threads. Looks like Intel wants to continue Diamond Rapids -AP to compete in hyperscaler market (ODM-Direct) and some unique cases by extending down the stack.

That would mean current Xeon 6 OEM platforms will need to carry the torch for enterprise customers till at least 2028 or even beyond?

@Simon C Simultaneous multithreading (SMT), which Intel calls hyperthreading, provides roughly 20% to 30% more performance per physical core. Enterprise customers would need to buy roughly 20% to 30% more software licenses for Diamond Rapids compared to Venice, not twice as many software licenses, due to the lack of SMT in Diamond Rapids. Four Venice servers with SMT might have the same performance as five Diamond Rapids servers without SMT, assuming both types of servers have the same number of physical cores and same performance per physical core.

Apart from SMT, Intel has some catching up to do for Diamond Rapids to have the same performance per physical core as Venice. Turin has 24% faster single-thread integer and 45% faster single-thread floating-point than Xeon 6 Granite Rapids according to SPEC CPU2017. One reason for these SPEC CPU2017 results is that Granite Rapids has 3x slower L3 latency than Turin (33ns vs 11ns) in sub-NUMA clustering mode (SNC3). SNC3 reduces Xeon L3 latency.

Intel will reintroduce SMT with Coral Rapids, which should arrive in H2 2027. We’ll have to see how much of a performance difference there is between AMD and Intel cores at that time. One of the main determinants of CPU performance is the performance of the cache hierarchy. L3 cache performance has been a weak spot for recent Xeons. It will be interesting to see how future chips by AMD and Intel compare on specs such as L3 latency and L3 bandwidth. A Chips and Cheese article titled “A Look into Intel Xeon 6’s Memory Subsystem” shows graphs of cache performance that compare Zen 5 Turin and Xeon 6 Granite Rapids.

OpenAI recently claimed they will have human-level automated AI researchers (essentially AGI) by March 2028. If anything close to that really happens, AI inference performance per dollar is going to be the main thing most people care about for both CPUs and GPUs. In other words, what will matter most could be low-precision tensor performance, bandwidth from weight storage, capacity of weight storage, power consumption and cost.