A few weeks ago, we got to hear about Enfabrica’s Elastic Memory Fabric System, or EMFASYS. If you did not get the acronym, try saying it, and it will make more sense. This is a super cool technology that combines Enfabrica’s ACF-S 3.2Tbps SuperNIC with CXL memory for AI clusters.

Enfabrica Elastic Memory Fabric System aka EMFASYS Launched

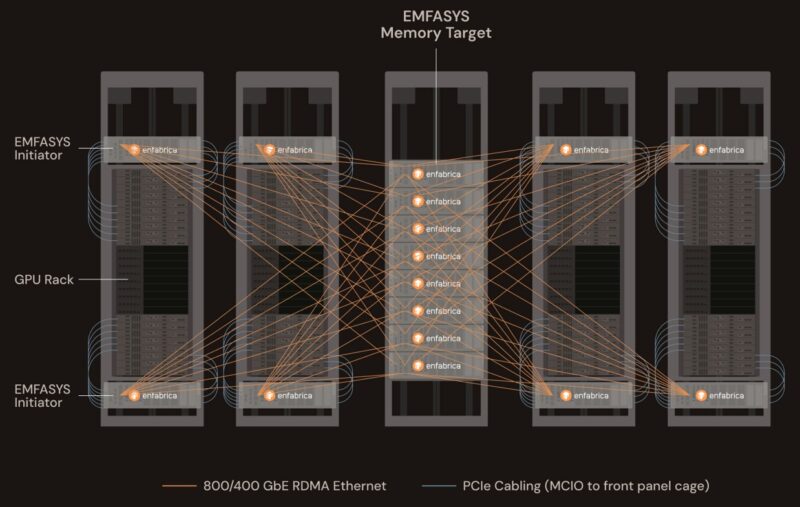

To understand EMFASYS, here is the mental model. Imagine putting DDR5 memory on CXL controllers, then connecting them to RDMA NICs. While today, you might think of building a box with CPUs, and multiple NVIDIA ConnectX or Broadcom NICs, and then perhaps getting some CXL/ PCIe switches in the mix, Enfabrica is greatly simplifying this for AI clusters.

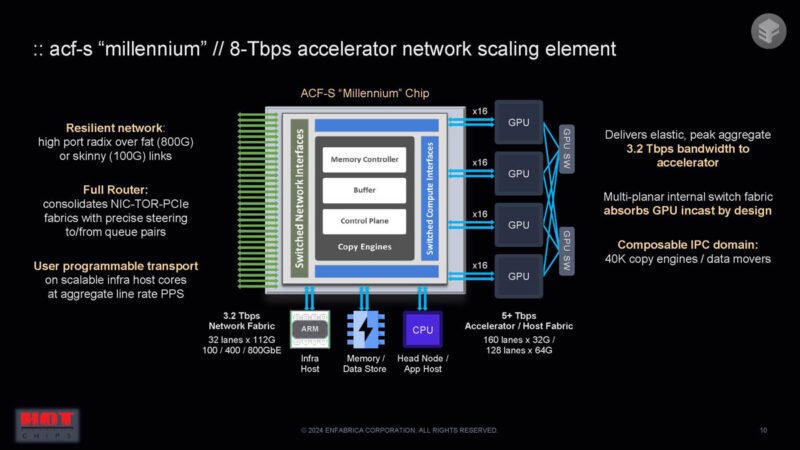

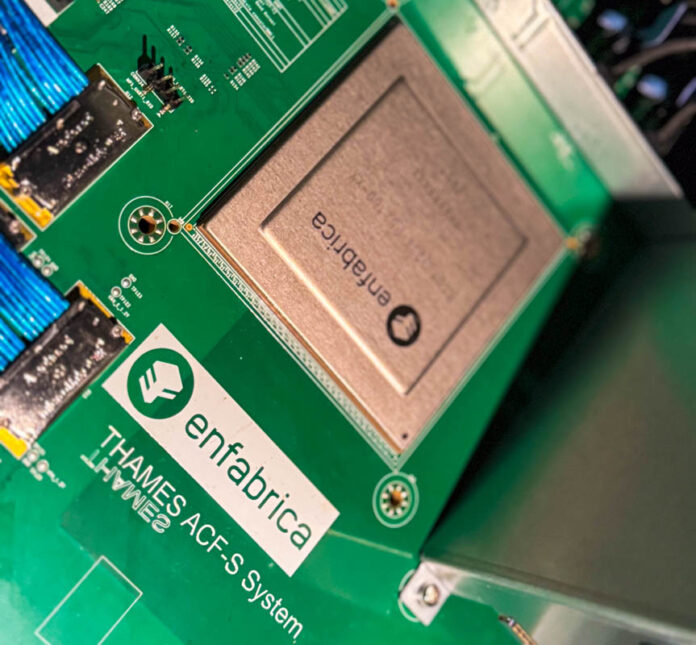

Using the Enfabrica ACF-S, the company can do this all on a device. On one side of the chip it has 3.2Tbps of network bandwidth with the ability to handle some user (think hyperscaler) programming. On the other side of the chip think of it as a PCIe switch. Then think about those two sides having a huge amount of bandwidth and being one device versus being multiple chips on a PCB. In the case of EMFASYS, the NIC side can handle the RDMA networking. The switch side can handle the CXL connections. The company says up to 144 lanes for CXL. Instead of having to copy and move data through multiple chips on a PCB, Enfabrica has one SuperNIC that can present memory as a RDMA target to AI applications.

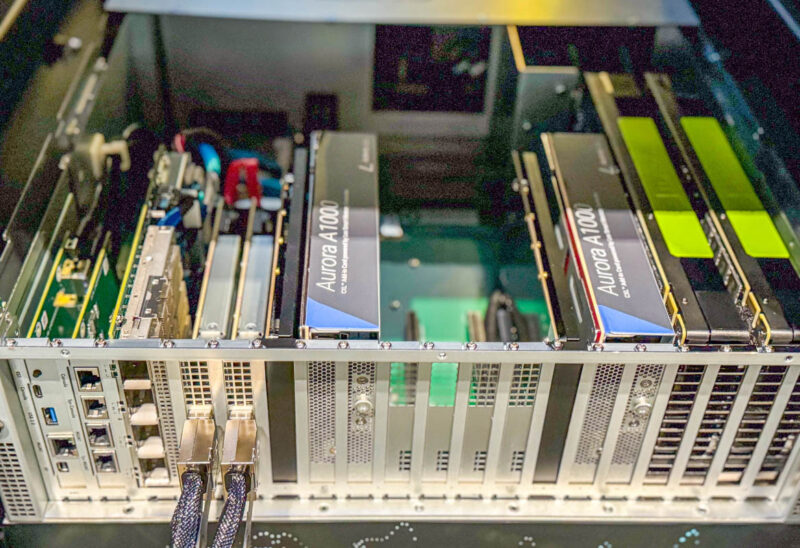

We have seen some early versions of this previously, such as this example at OCP Summit 2024 where we can see the Astera Labs Aurora A1000 CXL memory devices.

EMFASYS is interesting because memory is such a challenge with AI. As a result, AI chip makers are building accelerators with more HBM sites. DRAM is great, but it is limited by capacity. Enfabrica is effectively providing a solution for those who want to cache data in memory, but who need to scale to have a lot of memory.

Final Words

This is a neat solution. We are going to cover Enfabrica a bit more over the next few weeks. When I first saw the ACF-S, I immediately understood why something like this is useful. At first, I thought about servers in a manner similar to the NVIDIA MGX PCIe Switch Board with ConnectX-8 for 8x PCIe GPU Servers. What Enfabrica has is a larger topology with a more substantial chip that allows them to do things like CXL memory expansion over RDMA.

Seeing this report not long after the “Intel to get out of NICs” story makes me curious: is this a situation where this relatively teeny startup actually can just throw together a 32x100GbE(with options to aggregate to 400 or 800GbE) RDMA NIC, along with the PCIe/CXL switch and the memory controller when someone like Intel can’t; or are NICs the sort of thing(like memory controllers) that you can license as IP blocks from Cadence or a similar outfit if you have a plan that requires high speed networking but don’t have the resources to try to slug it out with Nvidia and Broadcom on NICs when the interesting bit of your product is elsewhere?