Building upon last weeks piece regarding how to set up an Infiniband link using a Ubuntu 12.04 machine. That guide utilized two very low cost Mellanox MHEA28-XTC controllers which cost less than $50 each used. Today we are looking at the performance of the setup. One of the big drivers for using Infiniband is that it is a very low latency interface that can also handle massive amounts of bandwidth. The newest generation parts are putting out 56gbps of throughput on a single port. The Mellanox MHEA28-XTC is a dual port 10gbps part, so not exactly state of the art. On the other hand, instead of a price tag around $175/ port for 10 gigabit Ethernet even in the used market, these cards offer 10gbps performance for $25 per port or less. There are clear limitations with this Infiniband approach, but at the same time it is much less expensive than alternatives utilizing 10GbE networking and much faster for about the same price as gigabit networking. Let’s take a look at what this setup can do from a performance standpoint and see if it offers performance benefits.

Test Configuration

The Mellanox MHEA28-XTC cards I use for this “how to” are dual port 10Gbps Memfree Infiniband host channel adapters (HCA) using 8x PCI-e. For both the target and the initiator, 8x electrical PCI-e slots are used. The following steps are somewhat generic and also should work at least with Mellanox HCAs. To this end, the Mellanox MHEA28-XTC cards are available very inexpensively (see this ebay search.)

Target:

- CPU: Intel Core i7-930

- Motherboard: Asus P6X58D-E

- Memory: 24GB (6x4GB) G. Skill Sniper DDR3 1600

- SAS HBA: IBM ServeRAID M1015 with IT firmware

- Drives: Boot: Intel SSD 320 80GB, Target: 2x Crucial C300 64GB

- Infiniband HCA: Mellanox MHEA28-XTC

Initiator:

- CPU: Intel Core i7-2600K

- Motherboard: Asus P67 Sabertooth

- Memory: 16GB (4x4GB) G. Skill Ripjaw DDR3 1600

- Drive: Crucial M4 256GB

- Infiniband HCA: Mellanox MHEA28-XTC

Testing Infiniband

For testing, I used a Windows computer and the OFED drivers which can be found here. During the install make sure to select the SRP option. After rebooting the machine, the target should already be set up if you followed the previous guide and the iSCSI drives will show up as unpartitioned devices in Computer Management.

10Gbps Infiniband has a theoretical maximum throughput of 8Gbps because of signaling overhead. This works out to about 1GB/s usable for data. I used three benchmark programs, Crystal Disk Mark, Anvil’s Storage Utilities, and ATTO Disk Benchmark.

RAM Disk Results

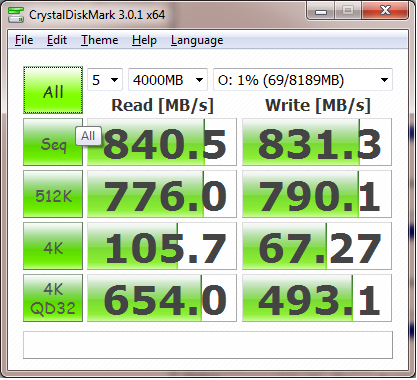

Depending on the benchmark, I achieved 800MB/s to 980MB/s sequential read when testing the tmpfs vDisk. Anvil also reported a lowest response time of just over 40us with 4k reads. Here one can see CrystalDiskMark well in excess of what we would see with gigabit Ethernet.

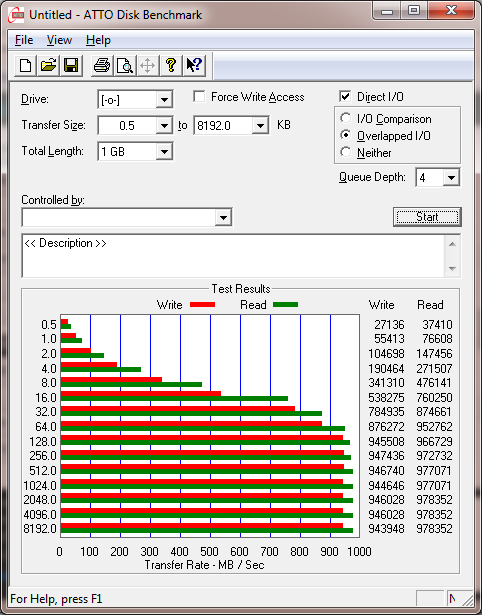

ATTO’s benchmarks is a good “best case scenario” view at network storage performance. It also is well known as being extremely friendly to compressed data gaining popularity with LSI SandForce solid state drives. Looking at the ATTO results, one can see that nearly 1GB/s was achieved on both reads and writes.

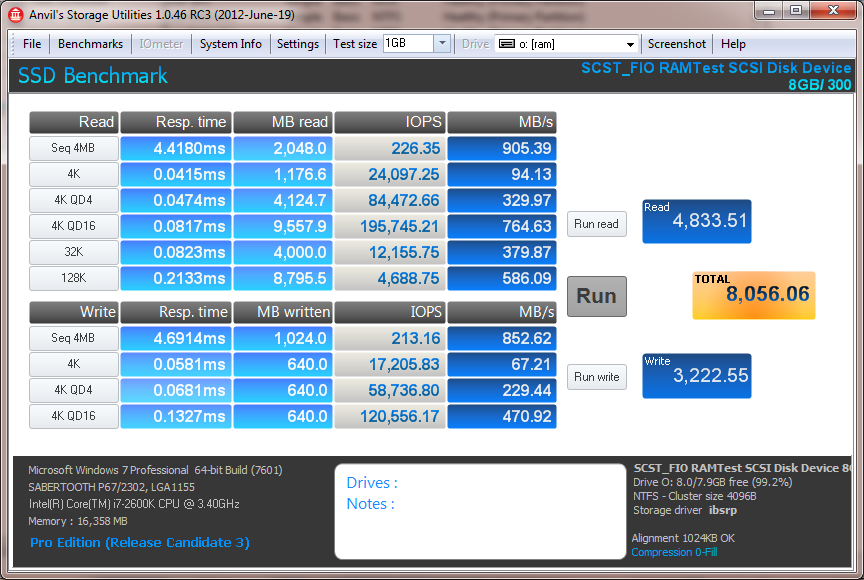

Anvil’s Storage Utilities give a fairly comprehensive view of performance. Again, we see very low response times with very high throughput. A benefit to the RAM disk is that data could be fed through the Infiniband network with latency lower than we saw with the Samsung 830 SSD recently reviewed and directly attached to an Intel 6.0gbps SATA III port.

Overall, using the RAM disk has shown that there is a lot of performance available over even a very low cost Infiniband setup. Let’s now take a look at results using real solid state drives.

RAID 0 SSD Array Results

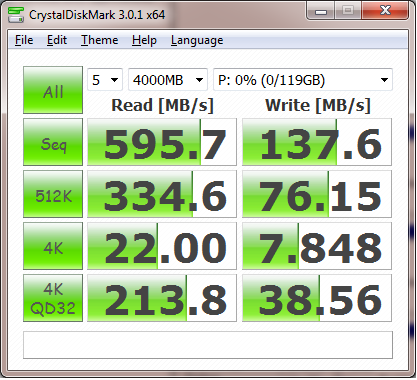

When using the mdadm SSD RAID 0 sequential reads ranged from 590MB/s to 720MB/s and a lowest response time of 180us on QD4 4k reads. CrystalDiskMark using the SSD array shows performance lower than the RAM disk (of course) but also much higher than anything we could achieve on gigabit Ethernet.

ATTO’s benchmark again is drive limited but shows we are achieving serious speed.

Here are the Anvil’s Storage Utilities numbers:

Overall performance is better than we would normally see over Gigabit Ethernet and about what we would expect with 10GbE, except at a lower cost.

Final Thoughts

I am pleased with the results from my initial testing. Maximum throughput is close to the theoretical maximum of 10Gbps Infiniband and response times are very low. For most home applications SRP and iSCSI can be limiting because iSCSI does not handle multiple R/W connections well. I will continue to look at other solutions including NFS over RDMA, NFS and SMB with IpoIB and SMB Direct (SMB2.2 over RDMA). These options allow much more flexibility and hopefully will stay efficient over Infiniband. This is a great result using the sub-$50 Mellanox MHEA28-XTC cards.

Max, nice writeup.

Pls explain, how did you test across the IB network?

Grab a second cable and bond those Point to point connections in OpenSM for double the bandwidth… 🙂

I am not aware of any way to bond RDMA channels besides SMB3 which, from my understanding, works at a higher layer in the stack. If you have seen a discussion on how to do it, please let me know. Looking at OpenSM man page, it seems the LASH routing protocol(not the default) could allow both links to be used but not in a double bandwidth sense. I have not tried using IPoIB yet, but would still have the same problem seen with regular Ethernet link aggregation where your channel is double the width, but a single connection can only use half of a bonded pair.

The supply of cheap Mellanox cards on eBay seems to have dried up. Can anyone recommend somewhere to buy these cheaply?

Give it a few days. These things usually come available with new stock every week or two.

With SRP can you share with a second computer using the other port?