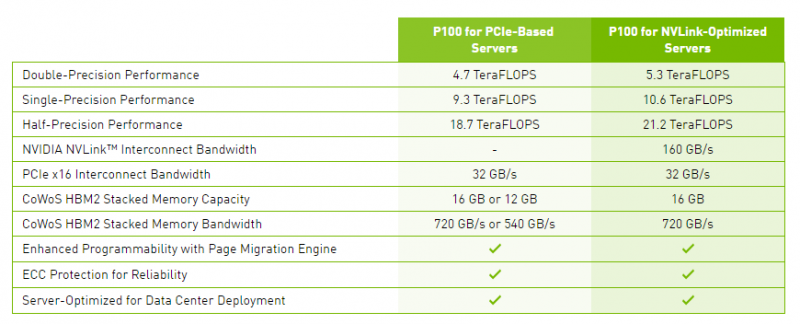

At ISC 16 NVIDIA announced new Pascal PCIe cards. Previously, the NVIDIA Tesla P100 GPU was in a mezzanine form factor with NVLINK which we covered at our GTC16 keynote piece. The NVIDIA P100 is a monster of a GPU supporting single precision speeds of 10.6 TFLOPS. There is one caveat to the Tesla P100, it is only released in the NVIDIA DGX-1 system. We have heard from partners like HPE, QCT, Supermicro and others that they have systems ready (QCT is the ODM for the DGX-1) but NVIDIA is reserving most of their supply for the DGX-1. This is simply to ensure higher-margin for NVIDIA as it launches a new architecture.

The NVIDIA Tesla P100 based PCIe cards come in two forms: a 16GB and 12GB version. Each is a 250W GPU (versus 300W for the current Mezzanine form factor) and is passively cooled. Both cards will have the full compliment of 3584 stream processors active. The 12GB model is expected to have only 540GB/s of memory bandwidth however that is still almost double the current generation Tesla M40’s 288GB/s.

With the Pascal generation, NVIDIA is able to complete double precision floating point operations at half the rate of single precision. This is important especially in the HPC market where double precision is a key metric. The Maxwell based NVIDIA Tesla M40 (not the GRID M40 which we have a tome of knowledge on) only had 1/32 the speed of double precision as it did single precision.

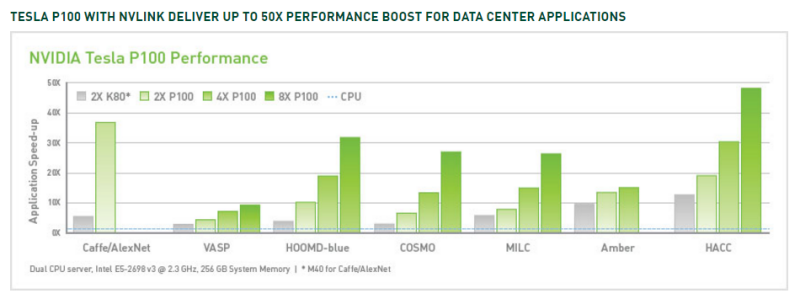

NVIDIA is positioning its cards both for the traditional HPC market but along with new software versions of its tools like cuDNN (5), for machine learning and machine vision applications. NVIDIA has a major lead in these markets where CUDA has been thoroughly adopted.

Competition from AMD in terms of new GPUs is strong, however AMD’s OpenCL adoption seems to be significantly lower than NVIDIA’s. Intel has its new Xeon Phi Knights Landing (KNL) series which is fascinating because at lower TDP levels it also includes dual 100Gb/s Omni-Path networking. The other big benefit that KNL has over NVIDIA’s current architecture is that the new Intel Xeon Phi x200 series is bootable and will have more memory bandwidth per multi-core accelerator than the NVIDIA GPUs.

Overall, it is an exciting time for the industry. With AI and machine learning exploding there is a popular application where jobs typically are taking hours or days/ weeks to run making more speed necessary. The NVIDIA Tesla P100 PCIe cards are expected to be available in Q4 so we have more than three months until their availability. In the meantime, Google has announced their Tensorflow ASIC, Intel is shipping its new Xeon Phi x200 generation in quantity and we expect more breakthroughs on the FPGA front for machine learning in the coming quarters.