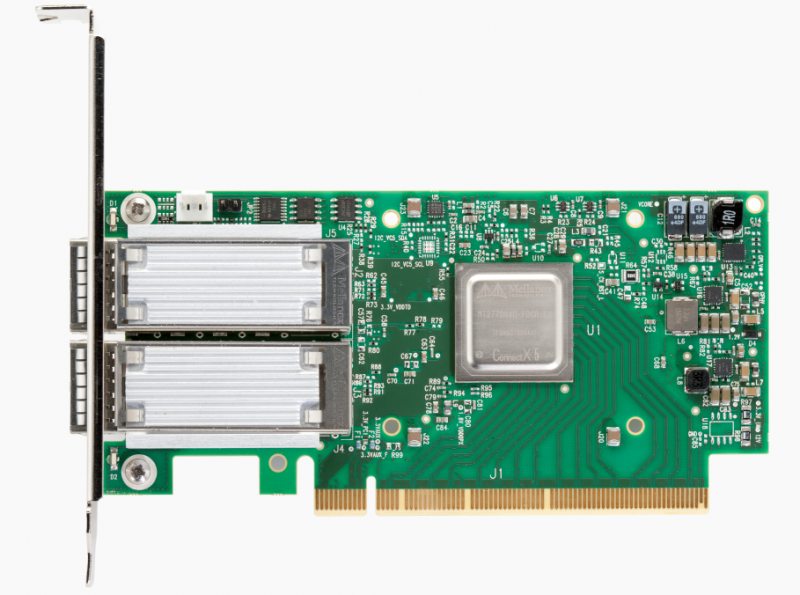

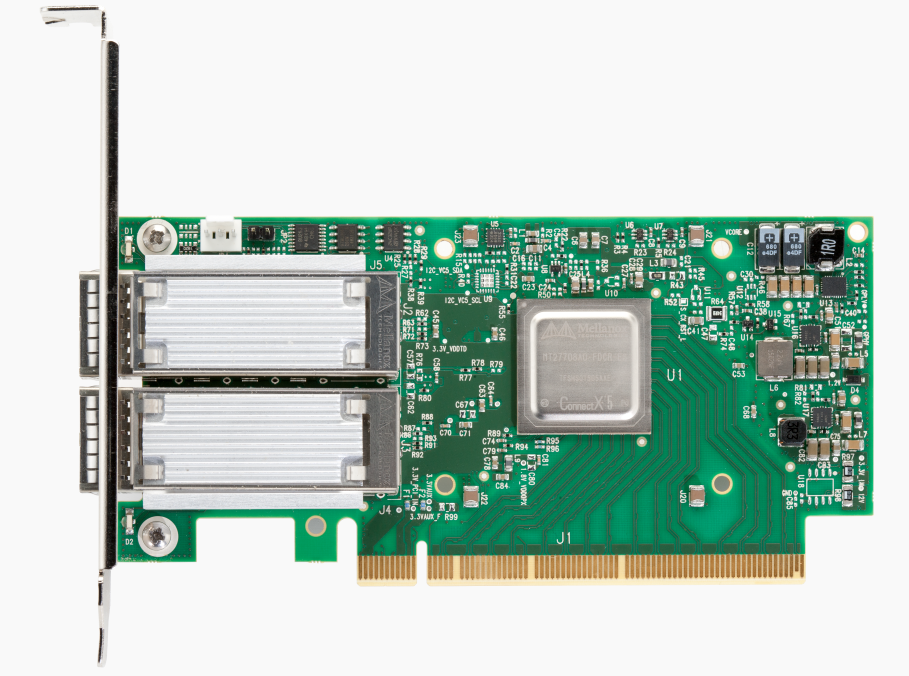

The new Mellanox ConnectX-5 cards are pushing boundaries of network performance. Ahead of ISC 2016 next week we see that Mellanox has released new cards supporting both EDR (100Gb/s) Infiniband and 100GbE. Mellanox has been on an absolute roll lately as a clear leader in both the Infiniband space (which it completely dominates) and the 25GbE, 50GbE and 100GbE Ethernet adapter space. Innovations such as Mellanox’s multi-host adapters have found their way into designs for hyper-scale clients such as Facebook and now commodity servers from companies like ASRock rack. We noted that Mellanox had a not-so-subtle marketing blitz on the Computex 2016 floor and private booths a few weeks ago with their ConnectX-4 Lx series parts seemingly at every booth.

Mellanox is known for innovating in the HPC market. Years ago, industry observers thought Infiniband was nearly dead. Mellanox took that opportunity to take a dominant position in the market where Infiniband is by far the most widely adopted network infrastructure in the HPC market today. One of the key innovations with the Mellanox ConenctX-5 series is in terms of MPI processing offload. For those unfamiliar, here is a primer on MPI. Mellanox is trying to move a significant portion of the HPC workload to its adapters and off primary compute devices. Mellanox is expanding support for forward-looking features such as Power8 CAPI and PCIe 4.0 which are not part of mainstream x86 servers.

Mellanox also innovated in that its adapters and switches can work not just for Infiniband but also Ethernet. Mellanox was an early adopter of SFP28 and QSFP28 which are the primary connector types for 25/50/100GbE today. Mellanox is offering both Ethernet only (EN) ConnectX-5 products but also a ConnectX-5 VPI part that can be configured to run either on Infiniband or Ethernet networks. We covered switching a Mellanox ConnectX-3 VPI card from Infiniband to Ethernet mode years ago to show how easy the process is. We hope to be testing 25GbE/ 50GbE/ 100GbE switches shortly as we have seen a number of offerings hit the market both from Mellanox and others.

We expect to hear Mellanox ConnectX-5 design wins in some of the largest supercomputing clusters next week at ISC.

Mellanox ConnectX-5 VPI Quick Specs

Here is the summary of Mellanox ConnectX-5 VPI specs (more here):

- EDR 100Gb/s InfiniBand or 100Gb/s Ethernet per port and all lower speeds

- Up to 200M messages/second

- Tag Matching and Rendezvous Offloads

- Adaptive Routing on Reliable Transport

- Enhanced Dynamic Connected Transport (DCT)

- Burst Buffer Offloads for Background Checkpointing

- NVMe over Fabric (NVMf) Target Offloads

- Back-End Switch Elimination by Host Chaining

- Embedded PCIe Switch

- Enhanced vSwitch / vRouter Offloads

- Flexible Pipeline

- RoCE for Overlay Networks

- PCIe Gen 4 Support

- Erasure Coding offload

- T10-DIF Signature Handover

- Power8 CAPI support

- Mellanox PeerDirect™ communication acceleration

- Hardware offloads for NVGRE and VXLAN encapsulated traffic

- End-to-end QoS and congestion control

- Hardware-based I/O virtualization

Mellanox ConnectX-5 EN Quick Specs

Here is the summary of the Mellanox ConnectX-5 EN specs (more here):

- 100Gb/s Ethernet per port and all lower speeds

- Up to 200M messages/second

- Adaptive Routing on Reliable Transport

- NVMe over Fabric (NVMf) Target Offloads

- Back-End Switch Elimination by Host Chaining

- Embedded PCIe Switch

- Enhanced vSwitch / vRouter Offloads

- Flexible Pipeline

- RoCE for Overlay Networks

- PCIe Gen 4 Support

- Erasure Coding offload

- T10-DIF Signature Handover

- Power8 CAPI support

- Mellanox PeerDirect™ communication acceleration

- Hardware offloads for NVGRE and VXLAN encapsulated traffic

- End-to-end QoS and congestion control

- Hardware-based I/O virtualization

100 GB/S is huge amount of data to be transmitted, in future we can accesses to the world fastest internet