Due to a few projects I have been working on recently, it has become time to upgrade the STH colocation facility in Las Vegas. There have also been a few issues with backups/ migrations on the network and adding several seconds to page loads, so it was a good opportunity to get some more hardware and upgrade networking. We also want to have several virtualization labs outside of expensive silicon valley power and real estate so this was an inexpensive and simple way to accomplish those goals.

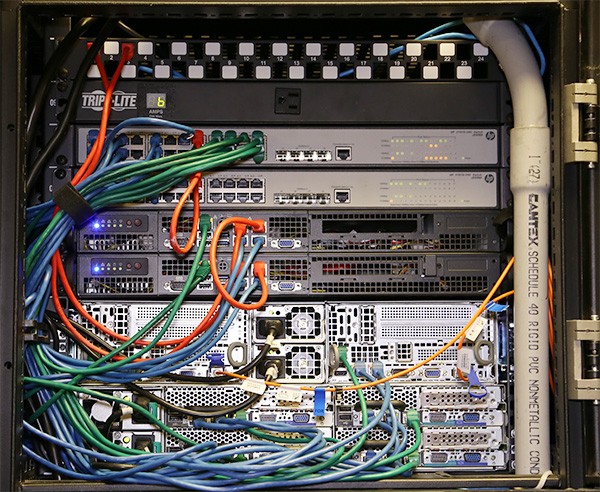

Starting Point the STH May 2014: 1/4 cabinet upgrade

In May of 2014, we upgraded the architecture substantially. We removed a troublesome Dell PowerEdge C6100 and replaced it with a Supermicro FatTwin (Xeon E5 based) that was relatively inexpensive to purchase and populate. The net was that we lost two server nodes but we gained newer/ faster technology. The Xeon E5’s had more cores and were faster, there was more memory (128GB/ node) and we moved to larger solid state disks (480GB on the primary drives.) We also had swapped out the Dell C6100 nodes that were running pfsense for the pair of Intel Rangeley/ Avoton machines for their lower power operation.

Just as we were setting up the two new nodes, we had the great STH failure of 2014 where the pictured Dell C6100 chassis had a power surge that simultaneously fried the Kingston E100 400GB drives in every node. The Intel 320 OS SSDs were fine as were the two spindle disks, but the main data drives in the chassis all died at once. We replaced the chassis but never again has STH been served off of it as a primary.

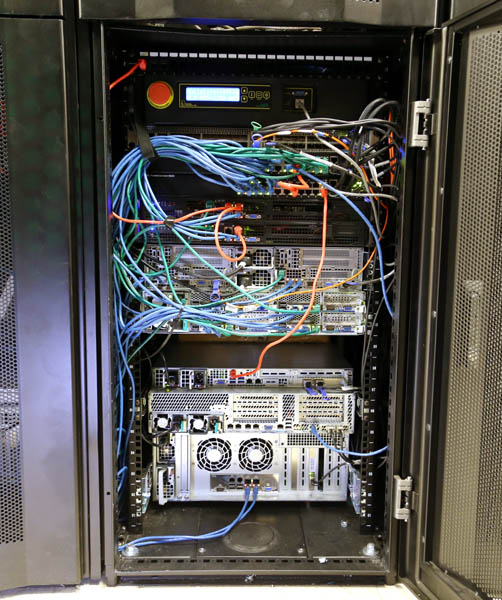

STH April 2015: 1/2 cabinet upgrade

The 2015 upgrade was an exercise in both scaling up and scaling out. We added two new Intel Xeon E5 V2 servers, one for storage and one for compute (Supermicro 4U and Intel 2U respectively.) There was also a dual Intel Xeon E5 V3 server with 128GB of RAM for primary web tasks. The goal was to move primary web serving tasks onto fast SAS SSDs as well as all Intel/ Samsung SATA SSDs. We added capacity and in-chassis hot spares to the compute nodes. The 4U (massive) storage server is not fully populated, but does have SanDisk/ Pliant SLC SAS SSDs and 3.5″ nearline SAS spindle disks, each with hot spares, to serve as the ZFS backup appliance on site. The goal is to relegate the Dell C6100 to lab duties while also moving to higher end hardware.

Looking at the rear of the 1/2 cabinet, one can spot another major upgrade in terms of networking. Note, this was not fully wired yet and cable ties were applied later. We now have 10G and 40G Ethernet supplied by a Gnodal switch with 8x 40GbE QSFP+ ports and 72 10GbE SFP+ ports. The former HP V1910-24G switches no longer fit in the 10Gb architecture, we we also moved to Dell PowerConnect 5524 switches with 24x 1GbE ports 2x 10GbE SFP+ ports and dual HDMI stacking ports each. The fact that we could have uplinks to the Gnodal switch and still stack the two switches using HDMI ports was a major plus. Our May 2014 colo option used the onboard Mellanox FDR port to replicate between the two Hyper-V nodes, however when backups/ snapshots would happen in the old architecture the network would grind and slow down the website. 10GbE fixes much of that issue for us.

Unfortunately we did not get to take final pictures but the main pieces are present in the above. Overall the additions to the colocation facility were two new compute nodes, a new backup and storage node then a complete switch overhaul. We are also re-aligning some of this hardware as we transition to a dual data center design so we will have servers in two locations rather than simply one location. The goal is to ever increase page loads. Every 0.1 second we can shave off of the site speed can save around 90 days of aggregate reader time.